Restarting an MRS Cluster Component

During MRS cluster running, restart the components in the cluster if you have modified component settings, encountered infrastructure resource faults, or detected service process errors.

Components within an MRS cluster support both the standard restart and rolling restart.

- Standard restart: concurrently restarts all components or instances in the cluster, which may interrupt services.

- Rolling restart: restarts required components or instances without interrupting services as much as possible. Compared with a standard restart, a rolling restart takes a longer time and may affect service throughput and performance. To minimize or eliminate the impact on services during a component restart, you can perform rolling restarts to restart components or instances in batches. For instances in active/standby mode, the standby instance is restarted first, followed by the active instance.

Table 4 describes the impact on services when a rolling restart is performed on components.

Restarting a cluster will stop the cluster components from providing services, which adversely affects the running of upper-layer applications or jobs. You are advised to perform rolling restarts during off-peak hours.

For details about whether services and instances in the current MRS cluster support rolling restart and the rolling restart parameters, see Component Restart Reference Information.

Notes and Constraints

- Perform a rolling restart during off-peak hours.

- If the service throughput of the Kafka service is high (over 100 MB/s) during a rolling restart, the restart will fail.

- To avoid RegionServer restart failures caused by heavy loads during an HBase rolling restart, increase the number of handles if the requests per second of each RegionServer on the native interface exceed 10,000.

- Before restarting, check the current number of requests in HBase. If the number of requests on the native interface for each RegionServer is over 10,000, increase the number of handles to prevent overloading.

- Preferentially perform a rolling instance or service restart and select Only restart instances whose configurations have expired.

Impact on the System

- If the number of Core nodes in a cluster is less than six, services may be affected for a short period of time.

- Table 4 describes the impact of a component rolling restart.

Prerequisites

- The IAM users have been synchronized in advance. You can do this by clicking Synchronize next to IAM User Sync on the Dashboard page of the cluster details.

- You have logged in to MRS Manager. For how to log in, see Accessing MRS Manager.

Restarting an MRS Cluster Component

- Access the MRS cluster component management page.

- Log in to the MRS console and click the cluster name to go to the cluster details page. Click Components.

- If you are using the Manager of MRS 3.x and later versions, log in to Manager and choose Cluster > Services.

- If you are using the Manager of MRS 2.x and earlier versions, log in to Manager and click Services.

- Click the name of the target component to go to the details page.

- On the service details page, expand the More drop-down list and select Restart Service or Service Rolling Restart.

- Enter the user password (required when you perform operations on Manager), confirm the operation impact, and click OK to restart the system.

If you select rolling restart, set parameters listed in Table 1. (Required parameters may vary by version, set parameters based on the actual GUI.)

Figure 1 Performing a rolling restart on Manager

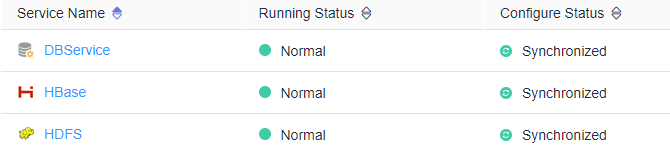

- After the restart or rolling restart of a component is successful, Running Status of the component is Normal and Configure Status is Synchronized.

Figure 2 MRS cluster components

Component Restart Reference Information

Table 2 provides services and instances that support or do not support rolling restart in the MRS cluster.

|

Service |

Instance |

Rolling Restart |

|---|---|---|

|

Alluxio |

AlluxioJobMaster |

Yes |

|

AlluxioMaster |

||

|

ClickHouse |

ClickHouseServer |

Yes |

|

ClickHouseBalancer |

||

|

CDL |

CDLConnector |

Yes |

|

CDLService |

||

|

Flink |

FlinkResource |

No |

|

FlinkServer |

||

|

Flume |

Flume |

Yes |

|

MonitorServer |

||

|

Guardian |

TokenServer |

Yes |

|

HBase |

HMaster |

Yes |

|

RegionServer |

||

|

ThriftServer |

||

|

RESTServer |

||

|

HetuEngine |

HSBroker |

Yes |

|

HSConsole |

||

|

HSFabric |

||

|

QAS |

||

|

HDFS |

NameNode |

Yes |

|

Zkfc |

||

|

JournalNode |

||

|

HttpFS |

||

|

DataNode |

||

|

Hive |

MetaStore |

Yes |

|

WebHCat |

||

|

HiveServer |

||

|

Hue |

Hue |

No |

|

Impala |

Impalad |

No |

|

StateStore |

||

|

Catalog |

||

|

IoTDB |

IoTDBServer |

Yes |

|

Kafka |

Broker |

Yes |

|

KafkaUI |

No |

|

|

Kudu |

KuduTserver |

Yes |

|

KuduMaster |

||

|

Loader |

Sqoop |

No |

|

MapReduce |

JobHistoryServer |

Yes |

|

Oozie |

Oozie |

No |

|

Presto |

Coordinator |

Yes |

|

Worker |

||

|

Ranger |

RangerAdmin |

Yes |

|

UserSync |

||

|

TagSync |

||

|

Spark |

JobHistory |

Yes |

|

JDBCServer |

||

|

SparkResource |

||

|

Storm |

Nimbus |

Yes |

|

UI |

||

|

Supervisor |

||

|

Logviewer |

||

|

Tez |

TezUI |

No |

|

YARN |

ResourceManager |

Yes |

|

NodeManager |

||

|

ZooKeeper |

Quorumpeer |

Yes |

Table 3 lists the instance startup duration.

|

Service |

Restart Duration |

Startup Duration |

Remarks |

|---|---|---|---|

|

IoTDB |

3min |

IoTDBServer: 3 min |

- |

|

CDL |

2min |

|

- |

|

ClickHouse |

4min |

|

- |

|

HDFS |

10min+x |

|

x indicates the NameNode metadata loading duration. It takes about 2 minutes to load 10,000,000 files. For example, x is 10 minutes for 50 million files. The startup duration fluctuates with reporting of DataNode data blocks. |

|

YARN |

5min+x |

|

x indicates the time required for restoring ResourceManager reserved tasks. It takes about 1 minute to restore 10,000 reserved tasks. |

|

MapReduce |

2min+x |

JobHistoryServer: 2 min + x |

x indicates the scanning duration of historical tasks. It takes about 2.5 minutes to scan 100,000 tasks. |

|

ZooKeeper |

2min+x |

quorumpeer: 2 min + x |

x indicates the duration for loading znodes. It takes about 1 minute to load 1 million znodes. |

|

Hive |

3.5min |

|

- |

|

Spark2x |

5min |

|

- |

|

Flink |

4min |

|

- |

|

Kafka |

2min+x |

|

x indicates the data restoration duration. It takes about 2 minutes to start 20,000 partitions for a single instance. |

|

Storm |

6min |

|

- |

|

Flume |

3min |

|

- |

|

Doris |

2 min |

|

- |

Table 4 describes the impact on the system during the rolling restart of components and instances.

|

Component |

Service Interruption |

Impact on System |

|---|---|---|

|

ClickHouse |

During the rolling restart, if the submitted workloads can be complete within the timeout period (30 minutes by default), there is no impact. |

Nodes undergoing a rolling restart reject all new requests. This affects single-replica services, the ON CLUSTER operation, and workloads dependent on the instances being rolling-restarted. If a request that is being executed is not complete within the timeout period (30 minutes by default), the request fails. |

|

DBService |

All services are normal during the rolling restart. |

During the rolling restart, alarms indicating a heartbeat interruption between the active and standby DBService nodes may be reported. |

|

Doris |

Doris services will not be interrupted during the rolling restart only when the following conditions are met:

|

During the rolling restart, the total resources decrease, affecting the maximum memory and CPU resources that can be used by jobs. In extreme cases, the jobs may fail due to insufficient resources. If a job times out (30 minutes by default), retry the job. |

|

Flink |

All services are normal during the rolling restart. |

The FlinkServer UI cannot be accessed during the rolling restart. |

|

Flume |

To prevent service interruptions and data loss, the following conditions must be met:

|

|

|

Guardian |

All services are normal during the rolling restart. |

None |

|

HBase |

HBase read and write services are normal during the rolling restart. |

|

|

HDFS |

|

If a third-party client is used, the reliability of the third-party client during the rolling restart cannot be guaranteed. |

|

HetuEngine |

|

|

|

Hive |

During the rolling restart, services with execution time longer than the decommissioning timeout period may fail. |

|

|

Kafka |

During the rolling restart, the read and write of Kafka topics with multiple replicas are normal, but operations on Kafka topics with only a single replica are interrupted. |

|

|

KrbServer |

All services are normal during the rolling restart. |

During the rolling restart, Kerberos authentication of a cluster may take a longer time. |

|

LdapServer |

All services are normal during the rolling restart. |

During the rolling restart, Kerberos authentication of a cluster may take a longer time. |

|

MapReduce |

None |

|

|

Ranger |

All services are normal during the rolling restart. |

The RangerAdmin, RangerKMS, and PolicySync instances of Ranger are configured in active-active mode, and these instances can provide services in turn during the rolling restart. While UserSync supports only one-instance configuration and users cannot be synchronized during the restart. The user synchronization period is 5 minutes, and UserSync takes a short time to restart. Therefore, the UserSync restart has little impact on user synchronization. |

|

Spark |

Except the listed items, other services are not affected. |

|

|

YARN |

|

During the rolling restart of YARN, tasks running on YARN may experience exceptions due to excessive retries. |

|

ZooKeeper |

ZooKeeper read and write operations are normal during the rolling restart. |

|

Helpful Links

- For details about how to start or stop a cluster component, see Starting and Stopping an MRS Cluster Component.

- For details about how to restart an MRS cluster, see Restarting an MRS Cluster.

- For details about how to restart a role instance, see Managing MRS Role Instances.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot