PERF05-01 Optimizing Design

- Risk level

Medium

- Key strategies

- Fast path

Reduce the processing load of the dominant workload to improve the response time. A piece of software can have multiple functions, but only a few of them are frequently used. The frequently used functions constitute a dominant workload. The fast path pattern reduces the processing load of these functions or simplifies the processing. This pattern simplifies the execution path

by identifying the dominant workload, which can be determined based on the usage frequency of a function. Common fast paths include quick navigation keys and database indexes.

- Prioritizing important tasks

Preferentially allocate resources to important tasks. If you cannot do everything in the available time, ignore the least important tasks. This strategy handles the situation where the system capacity is exceeded due to instantaneous burst loads. Generally, the most important tasks are processed first. If the overload is not temporary, reduce the processing volume or upgrade the system. In the performance overload scenario, apply circuit breaking to non-critical features to ensure that the main functions are available.

- Aggregation

Combine the functions that are used together in most scenarios to reduce the interactions.

This pattern combines some sub-functions that are often called together. Place related or tightly coupled functions in a single object. Use local interfaces instead of external or high-overhead interfaces (such as CORBA interfaces) that present small-granularity objects. The aggregation pattern uses coarse-grained objects. Frequently accessed data should be combined into an aggregate to minimize requests for specific information. For example, the account class CustAcct can provide access functions getName(), getAddress(), and getZip(). If you need to create email tags, use a new function genMailLableInfo() to obtain all information at a time to reduce interactions.

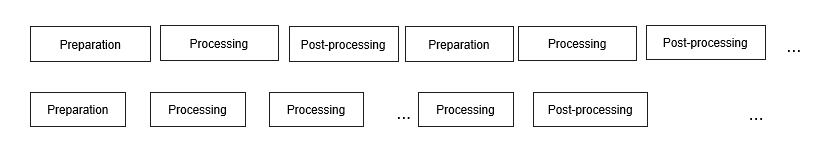

- Batch processing

Combine frequent service requests to reduce the overhead of request initialization, transmission, and termination. When the overhead of initializing, transmitting, and terminating a request is high, the actual process time for the request may be longer than necessary. By combining requests into a batch, the overhead is shared among the requests. This approach improves processing efficiency. Batch saving in the database and Redis pipelines are common batch processing operations.

Batch processing model

- Bypass

Process the requests that require extensive resources on other objects or other locations to reduce contention delay. This pattern is similar to using an alternative route to bypass the traffic bottleneck and reach the destination. Solution 1: Divide the target object into small-granularity objects, and distribute them to different physical locations. Solution 2: Create a separate thread for each data region. Each thread is responsible for updating its assigned data region.

For a process that must collaborate with a single downstream process, if there is only one downstream process, it may struggle to keep up under high loads, causing a bottleneck. You can use multiple downstream processes. Each process updates only its own data.

- Flexible schedule

Process the requests that require extensive resources at different time segments to reduce the contention delay.

A large number of requests are sent out simultaneously at a certain time. As a result, the demand for returned results surges and the response is slow. However, at other times, there are few or no requests. This situation happens when requests are processed at a specific frequency or at a specific time of day. Identify the functions that are processed regularly, then change their processing times. Randomly distribute these processing times within a given time range.

- Space–time trade off

Use more storage space to save execution time.

You can pre-store results or store frequently accessed data for easy calculation. You can also select specific algorithms, for example, the hash algorithm. Another method is OLAP. This technology summarizes data based on a certain hierarchical relationship and greatly reduces the time required for subsequent queries.

For example, when optimizing slow SQL statements, you can identify frequently accessed fields and set indexes to shorten the access latency.

- Handling valid workloads

Processing a large amount of unnecessary data heavily burdens the processing capacity. The issue might arise if the processing is oversimplified or lacks necessity analysis. You can analyze the data to be processed, filter the data that must be processed, and redesign the processing solution.

- Connections and synchronization for serial processing

Design close connections and effective synchronization measures for serial processing of threads or processes, to reduce the synchronous wait delay.

Divide a serial processing into multiple threads for parallel processing, while ensuring that each thread can be handled in a certain sequence and synchronization relationship. To achieve this, you need to design proper connections and synchronization. You can select scheduled query, message synchronization, or event association. The method should bridge the time gaps and meet performance objectives.

Even with this strategy, the overall performance of the system can only reach the performance level of the weakest processing stage (ignoring the overhead of thread switching and semaphore operations).

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot