Help Center/

Elastic Cloud Server/

Troubleshooting/

Self-diagnosis of Faulty GPU-accelerated ECSs/

Self-recovery from Non-hardware Faults/

What Do I Do If the Driver Is Unavailable After the Kernel Is Upgraded?

Updated on 2025-09-30 GMT+08:00

What Do I Do If the Driver Is Unavailable After the Kernel Is Upgraded?

Symptom

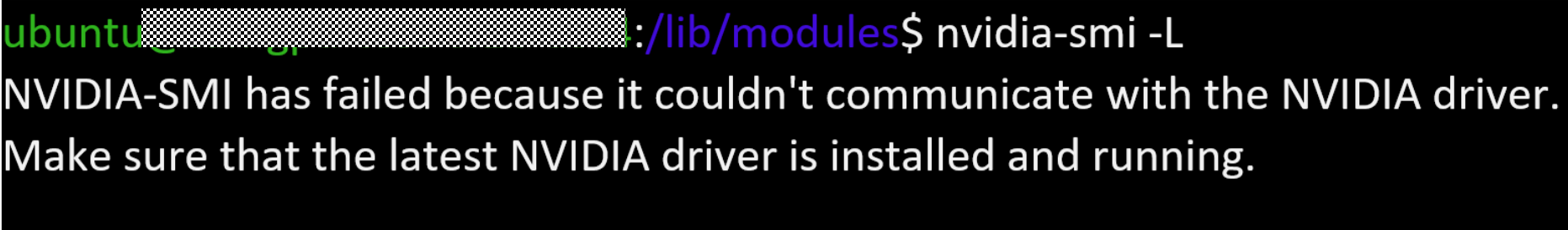

- When you run the nvidia-smi command, the error message "failed to initialize NVML: Driver/library version mismatch" is displayed.

- When you run the nvidia-smi command, the error message "NVIDIA-SMI has failed because it couldn't communicate with the NVIDIA diver" is displayed.

Checking the Kernel Version

- Run the following command to check the current kernel version:

- Run the corresponding command on the ECS to check the version of the kernel when the driver was installed:

- CentOS: find /usr/lib/modules -name nvidia.ko

- Ubuntu: find /lib/modules -name nvidia.ko

If the current kernel version is different from the kernel version when the driver was installed, the driver is unavailable after the kernel is upgraded.

Solution

- Run the following commands in sequence to remove the NVIDIA driver:

rmmod nvidia_modeset

rmmod nvidia

- Run the following command to query the GPU information:

- If the command output is normal, the problem has been resolved.

- If the fault persists in the command output, refer to Why Is the GPU Driver Unavailable?

Parent topic: Self-recovery from Non-hardware Faults

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

The system is busy. Please try again later.

For any further questions, feel free to contact us through the chatbot.

Chatbot