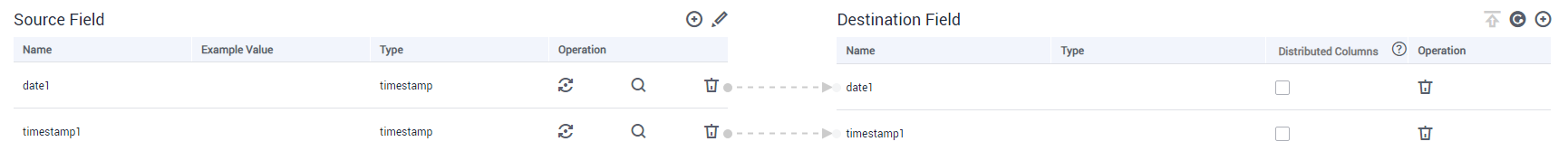

What Should I Do If a Hudi Read Job Fails to Be Executed Because the Field Mapping Page Has an Extra Column?

Cause: When Spark SQL writes data to the Hudi table, a column whose type is array<string> and whose name is col is automatically added to the schema of the table.

Solution: Delete this column from the field mapping. If auto table creation is enabled, delete this column also from the SQL statement.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot