Workloads

Why Does the Service Performance Not Meet the Expectation?

CCI underlying resources are shared by multiple tenants. To ensure service stability, there are traffic control on the underlying resources such as disk I/O. For containers, the read and write operations on the rootfs directory and the standard output of workload logs are limited. If the service performance does not meet your expectations, locate the fault based on the following causes:

- Service containers print a large number of log files to stdout streams.

CCI limits the forwarding traffic of standard output. If there are more 1-MB service logs per second, a log volume should be used to report logs to Application Operations Management (AOM). For details, see Log Management. Alternatively, you can output logs to an SFS Turbo volume and use sidecar containers to run an open-source component such as Fluent Bit to report logs to your self-managed log centers. If a large number of logs are printed to stdout streams, the service performance may be affected due to the limit.

- Service containers have high I/O read and write operations on the rootfs disk.

CCI limits the I/O of the container's system disk (rootfs). If a process may have high disk I/O operations (bandwidth > 6 Mbit/s, IOPS > 1,000) or is sensitive to the disk I/O performance, do not execute it in rootfs. For example, frequently printing logs to rootfs may cause frequent read and write operations on it. You can place files that are not frequently read and written and service-related configuration files in rootfs. For high I/O file operations, select log volumes or FlexVolume (created or deleted with the pod), or persistent SFS Turbo volumes based on your service requirements. High I/O operations in rootfs may affect the service performance due to the disk I/O limit.

Rectify the fault in a timely manner to prevent the service performance from being affected.

How Do I Set the Quantity of Pods?

- You can set the number of pods when creating a workload.

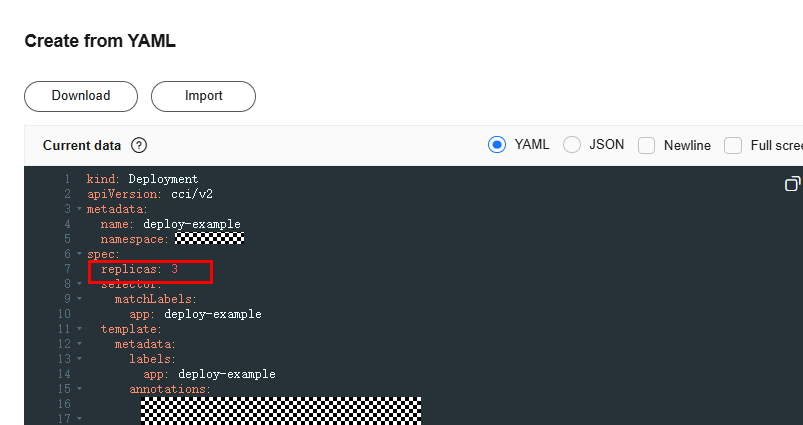

- When creating a workload using YAML, you can directly set the number of pods.

Figure 1 Setting the number of pods using YAML

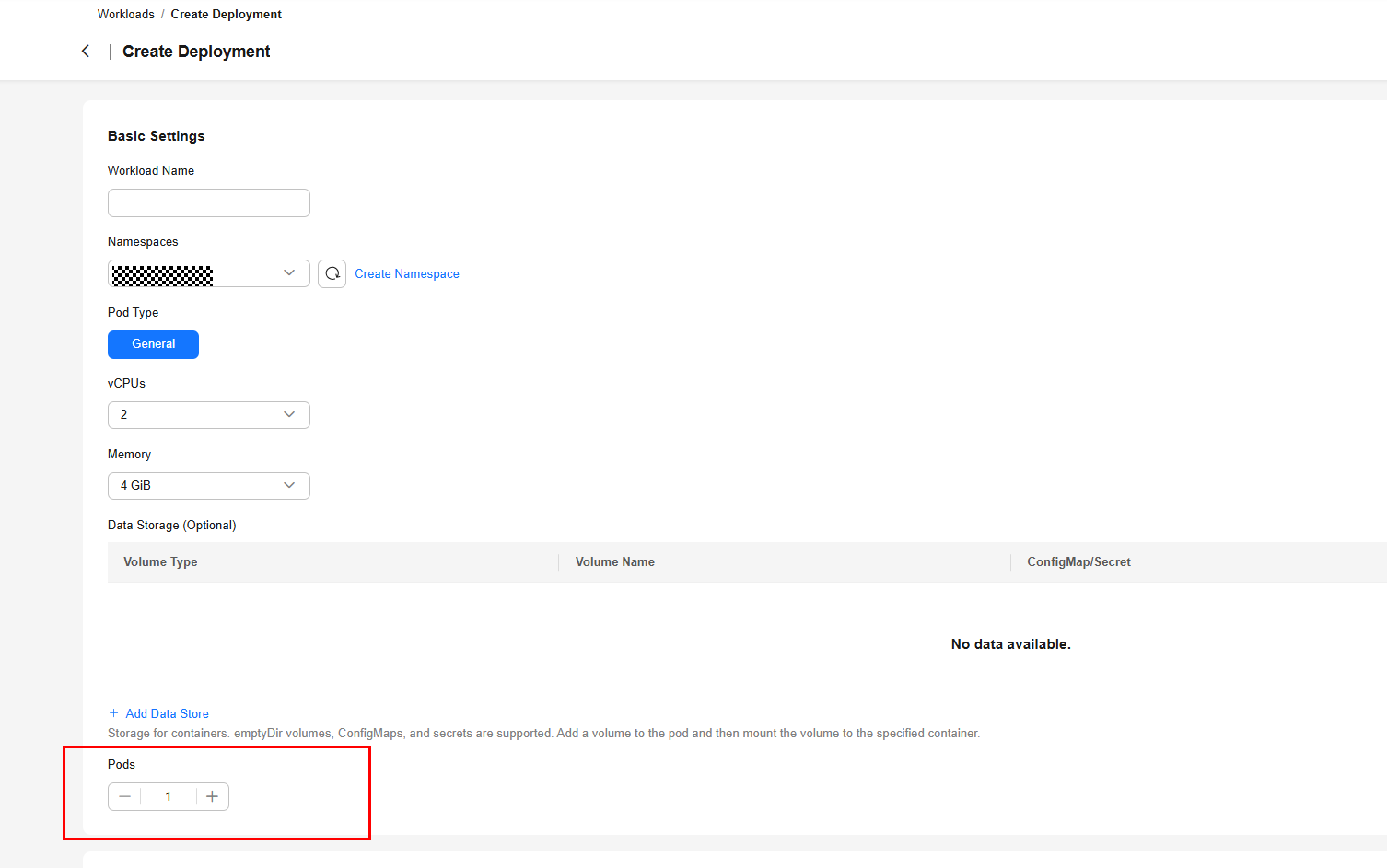

- When creating a workload on the console, you can specify the number of pods.

Figure 2 Setting the number of pods on the console

- When creating a workload using YAML, you can directly set the number of pods.

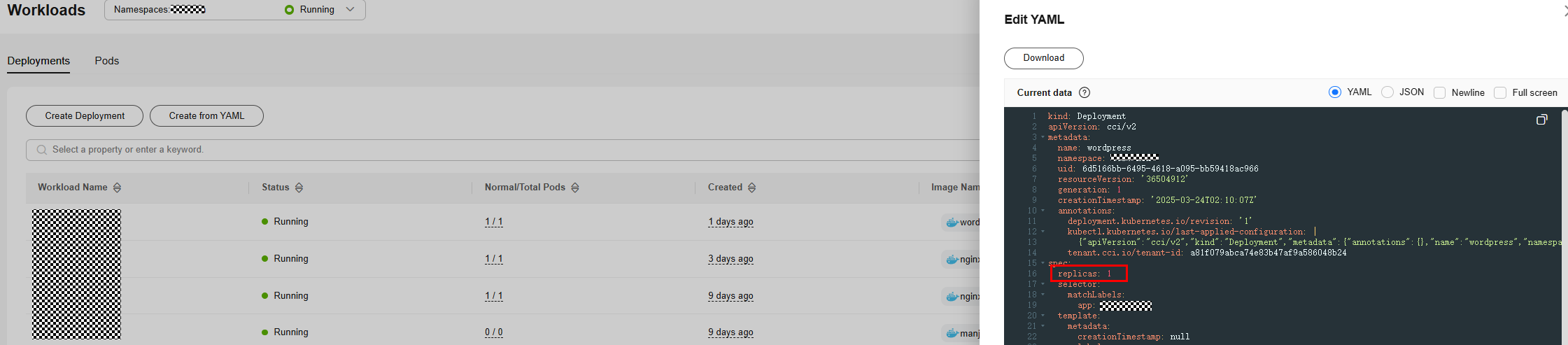

- After a workload is created, click Edit YAML and modify replicas.

Figure 3 Modifying the number of pods in the YAML file

How Do I Set Probes for a Workload?

CCI supports liveness, readiness, and startup probes. You can set them when creating a workload. For details, see Setting Health Check Parameters.

How Do I Configure an Auto Scaling Policy?

CCI supports custom auto scaling policies. For details, see Scaling a Workload.

What Do I Do If the Workload Created from the Sample Image Fails to Run?

If you have enabled SWR but have not pushed any image to SWR, SWR will build an image named sample. However, this image cannot run. You are advised to use an image on the Open Source Images tab to create a workload.

How Do I View Pods After I Call the API to Delete a Deployment?

The value of the propagationPolicy field in the API request for deleting a Deployment indicates whether pods are deleted along with the Deployment. This field can be set to Foreground or Background.

Does CCI Support Container Startup in Privileged Mode?

Currently, CCI does not support the privileged mode.

In other scenarios, you should use security contexts for fine-grained permissions control, thereby ensuring the security and reliability of the container running environment.

Why Are Expenditures Still Deducted After I Delete a Workload?

After a workload is deleted, it still exists on the My Resources page, and expenditures are still deducted. In this case, check whether there are pods running in the namespace where the workload belongs. If there are pods running and you do not need them anymore, delete the pods.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot