Tool Calling

What Is Tool Calling?

Tool calling (also known as function calling or FC) links foundation models with external tools and APIs. It acts as a bridge between natural language and information systems by converting user requests into precise tool or API commands, effectively addressing their needs.

Working principle: Developers describe the functions and definitions of the tool to the model through natural language. The model determines whether to call the tool during the dialog. When the model needs to be called, the model returns the tool functions and input parameters that meet the requirements. The developer calls the tool and fills the result back to the model. The model summarizes or continues to plan subtasks based on the result.

Principles

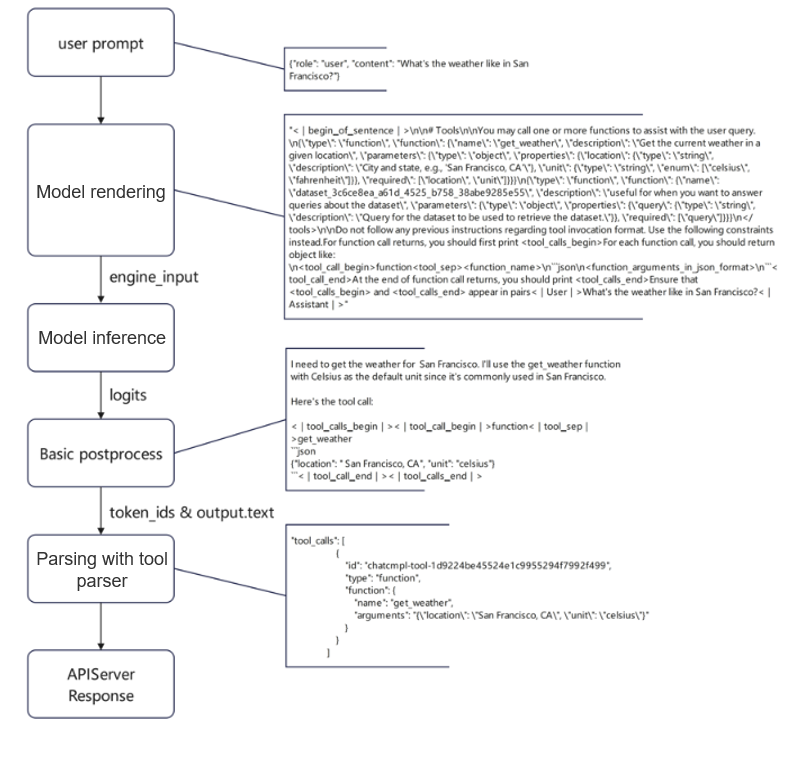

FC uses three components: the pre-processing module (serving_chat), the post-processing module (tool parser), and chat_template. The serving_chat module formats the user input using chat_template, while the tool parser processes the model's response. The figure below illustrates this process.

- The system detects that the tool definition is transferred in the user input and the auto tool choice option is enabled.

- The system uses the chat template to render the user input, and constructs the tool information and user prompt in the model input.

- The model performs inference and completes the basic post-processing process.

- The tool parser parses the model output. If the model output contains tool call information, the tool parser parses the tool call information to the tool_calls field in the user response.

Specifications

|

Model |

Advantage |

Description |

|---|---|---|

|

Qwen3-32B/Qwen3-30B-A3B/Qwen3-235B-A22B/Qwen3-Coder-480B-A35B-Instruct |

The performance and resource consumption are balanced, and the performance in MMLU (general knowledge) and C-Eval (Chinese evaluation) is excellent. |

Qwen3-Coder-480B-A35B-Instruct tool_parser: qwen3_coder Qwen3-32B/Qwen3-30B-A3B/Qwen3-235B-A22B tool_parser: hermes |

1. The models in the preceding table have been verified. The capabilities supported by other released models are the same as those of the community.

2. FC does not enhance model capabilities. The model inference capability affects the FC performance and accuracy.

Tool Choice Modes

Tool choice supports two modes: auto for automatic identification and named for specific function calls. It does not support the required mode for mandatory function calls.

Tool choice is incompatible with speculative inference.

|

Mode |

Supported |

Description |

Example |

|---|---|---|---|

|

auto (recommended) |

√ |

The model automatically identifies whether the tool needs to be called. |

"tool_choice": "auto" |

|

named |

√ |

The system calls a specific function. |

"tool_choice": {"type": "function", "function": {"name": "get_weather"} |

|

required |

× |

The model must perform a function call. |

"tool_choice": "required" |

Supported Processing Modes

|

Mode |

Supported |

Description |

|---|---|---|

|

Streaming output adaptation |

√ |

Enables incremental retrieval of tool call data to enhance responsiveness. |

|

Multi-turn tool call |

√ |

Preserves conversational context across multiple tool calls, ensuring accurate execution and result fill-in. |

Feature Compatibility

The table below lists the compatibility between function call and the existing key features of vLLM.

|

Feature |

Compatible |

Description |

Compatible Error Handling |

|---|---|---|---|

|

Reasoning Outputs |

√ |

The chain-of-thought model generates detailed thought processes. For better performance during function calls, turning off this feature boosts efficiency and avoids errors when interpreting results due to unexpected outputs in reasoning_content. DeepSeek-R1: The chain of thought cannot be disabled. Qwen3: The chain of thought can be disabled. |

- |

|

Automatic Prefix Caching |

√ |

- |

- |

|

Chunked Prefill |

√ |

- |

- |

|

Speculative inference |

√ |

- |

- |

|

Graph mode |

√ |

- |

- |

Auto mode's compatibility matches the previous section. Named mode has the same feature compatibility as guided decoding mode. Check the community feature compatibility matrix for specifics.

Basic Usage Process

- Prepare the environment.

Start the server with tool calling enabled. This example uses the Qwen3 model. You must use the hermes tool in the vLLM example directory to call the chat template.

python -m vllm.entrypoints.openai.api_server \ --model Qwen3/ \ --chat-template=tool_chat_template_hermes.v1.jinja \ --enable-auto-tool-choice \ --tool-call-parser=hermes \ --tool-parser-plugin=qwen3_tool_parser.py

- Define tools.

FC provides available tools to the model via the tools field in JSON format. The tools field includes information such as tool name, description, and parameter definitions. For details, see the tool parameter construction specifications.

Define the Tool Function

def get_weather(location: str, unit: str): return f"Getting the weather for {location} in {unit}..."- Assume a tool function named get_weather is defined in the code to retrieve weather information for a specified location.

- location: The location for the weather query. This parameter is mandatory.

- unit: The temperature unit for the returned result. This parameter is optional.

- Note: This example simulates a weather query scenario. In real applications, you should call an actual weather API.

Define Tools

"tools": [{ "type": "function", "function": { "name": "get_weather", "description": "Get the current weather in a given location", "parameters": { "type": "object", "properties": { "location": {"type": "string", "description": "City and state, e.g., 'San Francisco, CA'"}, "unit": {"type": "string", "enum": ["celsius", "fahrenheit"]} }, "required": ["location"] } } }]- The tools field is a list, where each element represents a callable tool function. In this example, a tool named get_weather is defined.

- type: The type of the tool. This is a fixed value function, indicating a callable tool function.

- function: The function object that defines the tool's details, including name, description, and parameters.

- name: The function name, here it is get_weather.

- description: A description of the function, explaining its purpose.

- parameters: The required parameters for the function, defined as an object containing location and unit.

- location: Location information for the weather query. Type: string.

- unit: Temperature unit. Type: string (enum). Options: celsius and fahrenheit.

- required: Specifies required parameters. In this case, location is required.

- Assume a tool function named get_weather is defined in the code to retrieve weather information for a specified location.

- Send a request.

Include questions and required tools in your request. The model will detect and provide the necessary tools and their parameters based on these queries.

from openai import OpenAI import json client = OpenAI(base_url="http://localhost:8000/v1", api_key="dummy") //User questions messages = [{"role": "user", "content": "What's the weather like in San Francisco?"}] tools = [ { //For details, see the tools defined in Step 1. } ] //Send a model request. response = client.chat.completions.create( model=client.models.list().data[0].id, messages=messages , tools=tools, tool_choice="auto" ) - Call external tools.

Use the details about tools and parameters provided by the model to call the relevant external tool or API and retrieve its output.

# Extract the tool orchestration parameters provided by the model. tool_call = response.choices[0].message.tool_calls[0].function # Tool names tool_name = tool_call.function.name # Execute the tools based on the tool names. if tool_name == "get_weather": # Extracted user parameters arguments = json.loads(tool_call.function.arguments) # Call the tools. tool_result = get_weather(**arguments)- Obtain the list of tools called by the model from tool_calls returned by the model.

- Execute the tool based on the tool name. If the tool name is get_weather, extract user parameters and call the get_weather function to obtain the tool execution result.

- Fill in execution results and generate final response.

Return the tool execution result to the model as a message with role=tool. The model will then generate the final response based on this result.

messages.append(completion.choices[0].message) messages.append({ "role": "tool", "tool_call_id": tool_call.id, "content": tool_result }) # Call the model to generate the final response. final_response = client.chat.completions.create( model=client.models.list().data[0].id, messages=messages , tools=tools, tool_choice="auto" ) print(final_response.choices[0].message.content)

Complete Code Example

from openai import OpenAI

import json

client = OpenAI(base_url="http://localhost:8000/v1", api_key="dummy")

def get_weather(location: str, unit: str):

return f"Getting the weather for {location} in {unit}..."

tool_functions = {"get_weather": get_weather}

tools = [{

"type": "function",

"function": {

"name": "get_weather",

"description": "Get the current weather in a given location",

"parameters": {

"type": "object",

"properties": {

"location": {"type": "string", "description": "City and state, e.g., 'San Francisco, CA'"},

"unit": {"type": "string", "enum": ["celsius", "fahrenheit"]}

},

"required": ["location", "unit"]

}

}

}]

response = client.chat.completions.create(

model=client.models.list().data[0].id,

messages=[{"role": "user", "content": "What's the weather like in San Francisco?"}],

tools=tools,

tool_choice="auto"

)

tool_call = response.choices[0].message.tool_calls[0].function

print(f"Function called: {tool_call.name}")

print(f"Arguments: {tool_call.arguments}")

print(f"Result: {get_weather(**json.loads(tool_call.arguments))}")

Example output:

Function called: get_weather

Arguments: {"location": "San Francisco, CA", "unit": "fahrenheit"}

Result: Getting the weather for San Francisco, CA in fahrenheit...

Prompt Best Practices

The performance of Function Calling (FC) largely depends on the reasoning capabilities of the underlying model. To achieve efficient tool invocation, you should define prompts with clear and concise task descriptions, paired with well-structured tool function definitions. When using FC, follow these principles:

- Keep task definitions simple and direct. Avoid including irrelevant or excessive information that may distract the model.

- Avoid using the model for tasks that can be handled by code, as model reasoning is inherently probabilistic.

The table below lists common mistakes and recommended fixes.

|

Category |

Issue |

Incorrect Example |

Corrected Example |

|---|---|---|---|

|

Function definition |

Poor function naming and vague description |

{

"type": "function",

"function": {

"name": "func1",

"description": "Utility function"

}

} |

{

"type": "function",

"function": {

"name": "CreateTask",

"description": "Creates a task for the user and returns the task ID when a new work item is needed."

}

} |

|

Parameter definition |

Redundant structure and repetition |

{

"time": {

"type": "object",

"description": "City",

"properties": {

"city": {

"description": "City"

}

}

}

} |

{

"time": {

"type": "string",

"description": "City"

}

} |

|

Unnecessary input parameters with fixed values |

{

"time": {

"type": "object",

"description": "City",

"properties": {

"city": {

"description": "Always pass Hangzhou"

}

}

}

} |

If an input parameter is fixed and not necessary, it should be removed and handled directly in code. |

|

|

prompt |

Prompt complexity leading to redundant tool calls |

System prompt: You are communicating with user Marvin. You need to first query the user ID, then use it to create a task... |

System prompt: You are communicating with user Marvin (ID=123). You can use the user ID to create a task... |

|

Ambiguous task definitions |

System prompt: You can use the ID to find the user and get the task ID. The model struggles to tell the two IDs apart, which can lead to incorrect usage by the model. |

System prompt: Each user has a unique user ID; each task has a task ID. You can use the user ID to retrieve user information and obtain all associated task IDs. |

|

|

Mismatch between task definition and tool function |

Tool function requires location and date. Prompt: Query the weather in Beijing. The model fails to call the function if the task lacks date details or uses the default date value, leading to incorrect results. |

Prompt: Query the weather in Beijing on July 30, 2025. |

|

|

Format conflicts |

If the system prompt specifies a return format that conflicts with the function call, it may cause tool call failures. |

Remove any formatting instructions that interfere with function execution. |

Best Practices of Tool Function Definition

The model needs correct tool function definitions to work properly. These definitions must follow the JSON Schema standards. Build the tools object using these guidelines:

Structure of tools

"tools": [

{

"type": "function",

"function": {

"name": "...", // Function name (lowercase letters and underscores)

"description": "...", // Function description

"parameters": { ...

} // Function parameters (in JSON Schema format)

}

}

]

- type: The type of the tool. This is a fixed value function, indicating a callable tool function.

- function: Function object, which is used to define the name, description, and parameters of a tool function.

Description

function

|

Field |

Type |

Mandatory |

Description |

|---|---|---|---|

|

name |

string |

Yes |

Function name, which is unique. Use lowercase letters and underscores (_). |

|

description |

string |

Yes |

A description of the function, explaining its purpose. |

|

parameters |

object |

Yes |

Function parameters, which must comply with the JSON Schema format. |

parameters

parameters must comply with the JSON Schema format.

"parameters": {

{

"type": "object",

"properties": {

"name": {

"type": "string | number | boolean | object | array | integer",

"description": "Attribute description"

}

},

"required": [

"Mandatory parameters"

]

}

}

- type: The value must be object.

- properties: All supported attributes and their types.

- name: Parameter name, which must be an English string and must be unique.

- type: The value must comply with the JSON Schema specifications. The supported types include string, number, boolean, integer, object, and array.

- required: Parameters that are mandatory in the function.

- Other parameters vary depending on the value of type. For details, see the following table.

|

Type |

Example |

|---|---|

|

String, integer, number, and boolean |

N/A |

|

Object

|

|

|

List of arrays

|

|

Complete Example

"tools": [

{

"type": "function",

"function": {

"name": "get_weather",

"description": "Get weather information for a specified location",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "City and state, e.g., 'San Francisco, CA'"

}

},

"required": [

"location"

]

}

}

}

]

Notes

- Case-sensitive: All field names and parameter names are case-sensitive (lowercase is recommended).

- Schema compliance: All definitions must adhere to JSON Schema standards and should be verifiable through JSON Schema validators.

Best Practices

- Core Guidelines for Tool Descriptions

- Tool descriptions should clearly articulate the tool's capabilities, parameter meanings and impact, applicable scenarios (or restricted use cases), and any constraints (like input length limits). Each tool should be described in 3 to 4 concise sentences.

- Prioritize completeness in function and parameter definitions; examples are supplementary and should be added cautiously, especially when used with reasoning models.

- Function Design Principles

- Naming and parameters: Function names should be intuitive (like parse_product_info). Parameter descriptions should include both format (like city: string) and business meaning (like Full name of the target city). Clearly specify expected output (like Return weather data in JSON format).

- System prompt: Use system prompts to define call conditions (like "Trigger get_product_detail when the user asks about product information").

- Engineering design:

- Principle of least astonishment: Use enumerated types (like StatusEnum) to avoid invalid inputs and ensure logical clarity.

- Clarity principle: Prompts should be phrased clearly enough for humans to make intuitive decisions.

- Call optimization:

- Pass known parameters implicitly through code (for example, submit_order should not require repeated declaration of user_id).

- Merge fixed-process functions (for example, combine query_location and mark_location into query_and_mark_location).

- Quantity and performance: Limit the number of functions to 20 or fewer. Use debugging tools to iteratively refine function schemas. For complex scenarios, consider leveraging fine-tuning to improve accuracy.

Request practice

- Tool calls

Example request:

{ "model": "deepseek", "messages": [ { "role": "user", "content": "Obtain the weather in Beijing" } ], "tools": [ { "type": "function", "function": { "name": "get_weather", "description": "Query weather", "parameters": { "type": "object", "properties": { "location": { "type": "string", "description": "City" }, "unit": { "type": "string", "enum": [ "celsius", "fahrenheit" ] } }, "required": [ "location", "unit" ] } } }, { "type": "function", "function": { "name": "send_email", "description": "Send an email", "parameters": { "type": "object", "properties": { "userInput": { "type": "string", "description": "Email content" } }, "required": [ "userInput" ] } } } ], "tool_choice": "auto", "temperature": 0, "stream": false }Request result:

{ "id": "1530/chat-c7277abfbc724677a570c03c7541edd7", "object": "chat.completion", "created": 1753423691, "model": "deepseek", "choices": [ { "index": 0, "message": { "role": "assistant", "content": null, "reasoning_content": null, "tool_calls": [ { "id": "chatcmpl-tool-6714630cc3fc4551a156aa48715d5139", "type": "function", "function": { "name": "get_weather", "arguments": "{\"location\":\"Beijing\",\"unit\":\"celsius\"}" } } ] }, "logprobs": null, "finish_reason": "tool_calls", "stop_reason": null } ], "usage": { "prompt_tokens": 309, "total_tokens": 359, "completion_tokens": 50 }, "prompt_logprobs": null } - Tool summary

{ "model": "deepseek", "messages": [ { "role": "user", "content": "Obtain the weather in Beijing" }, { "role": "assistant", "tool_calls": [ { "id": "chatcmpl-tool-fc6986a3dc014e80a5d3e091c60648d9", "type": "function", "function": { "name": "get_weather", "arguments": "{\"location\": \"Beijing\", \"unit\": \"celsius\"}" } } ]}, { "role": "tool", "tool_call_id": "chatcmpl-tool-fc6986a3dc014e80a5d3e091c60648d9", "content": "Beijing's temperature today ranges from 20 to 50 degrees.", "name": "get_weather" } ], "tools": [ { "type": "function", "function": { "name": "get_weather", "description": "Query weather", "parameters": { "type": "object", "properties": { "location": { "type": "string", "description": "City" }, "unit": { "type": "string", "enum": [ "celsius", "fahrenheit" ] } }, "required": [ "location", "unit" ] } } }, { "type": "function", "function": { "name": "send_email", "description": "Send an email", "parameters": { "type": "object", "properties": { "userInput": { "type": "string", "description": "Email content" } }, "required": [ "userInput" ] } } } ], "tool_choice": "auto", "temperature": 0, "stream": false }Request result:

{ "id": "1530/chat-2966628beae0430b872b994f7ef0f9b4", "object": "chat.completion", "created": 1753423849, "model": "deepseek", "choices": [ { "index": 0, "message": { "role": "assistant", "content": "Beijing's temperature today ranges from 20 to 50 degrees.", "reasoning_content": null, "tool_calls": [] }, "logprobs": null, "finish_reason": "stop", "stop_reason": null } ], "usage": { "prompt_tokens": 387, "total_tokens": 398, "completion_tokens": 11 }, "prompt_logprobs": null }

Exception Handling

JSON format error tolerance mechanism

If the JSON format is slightly invalid, use the json-repair library for fault tolerance.

import json_repair

invalid_json = '{"location": "Beijing", "unit": "°C"}'

valid_json = json_repair.loads(invalid_json)

Tool Call Exception

If the model fails to call the tool function properly because of the prompt or function definition, improve both using best practices.

Model Return Error

FC relies on the model's capabilities. Because of model hallucination, its output might not match expectations, causing the tool to fail.

If the call failure rate is low in a certain scenario, you can set the retry mechanism to harden the reliability.

Q&A

Q: Why does DeepSeek-R1-0528 return the <result> tag in content in non-streaming mode?

A: This issue stems from the model's automatic tag generation. It does not impact FC, so you can safely disregard it.

Q: Why does the </think> tag occasionally appear in reasoning_content when deep thinking is enabled in chain-of-thought models such as DeepSeek-R1?

A: The DeepSeek-R1 model sometimes outputs unnecessary </think> tags due to a known issue. This can cut off parts of the chain-of-thought process. While there is no fix yet, adding a retry may help.

Q: Why does the returned reasoning_content contain the <tool_calls_begin> tag when deep thinking is enabled in the chain-of-thought models such as DeepSeek-R1?

A: Special characters are used in the preset prompt template of FC, which does not affect the normal use of FC. You can ignore the special characters.

Q: When FC is used, only one function is called. Why are multiple function calls returned?

A: The model calculates how many times a function is called. This often happens when prompts or tool functions are not clear. To fix this, improve your prompts and tool definitions using recommended practices.

Q: When FC is used, why cannot tool_calls be parsed after max_tokens is configured?

A: If the value of max_tokens is too small, the model output may be truncated and the tool_calls content cannot be properly parsed. Therefore, you need to set max_tokens to a proper value.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot