MRS Service Scenarios

MRS consists of multiple big data components, and you can select the cluster type that best fits your service requirements, data types, reliability expectations, and resource budget.

You can quickly buy a cluster using the preset cluster template or select the component list and advanced settings to manually buy a cluster.

|

Type |

Scenario |

Core component |

|---|---|---|

|

Hadoop analysis cluster |

Hadoop cluster uses components in the open source Hadoop ecosystem to analyze and query vast amounts of data. For example, use YARN to manage cluster resources, Hive and Spark to provide offline storage and computing of large-scale distributed data, Spark Streaming and Flink to offer streaming data computing, and Tez to provide a distributed computing framework of directed acyclic graphs (DAGs). |

Hadoop, Hive, Spark, Tez, Flink, ZooKeeper, and Ranger |

|

HBase query cluster |

An HBase cluster uses Hadoop and HBase components to provide a column-oriented distributed cloud storage system featuring enhanced reliability, great performance, and elastic scalability. It applies to the storage and distributed computing of massive amounts of data. You can use HBase to build a storage system capable of storing TB- or even PB-level data. With HBase, you can filter and analyze data with ease and get responses in milliseconds, rapidly mining data value. |

Hadoop, HBase, ZooKeeper, and Ranger |

|

ClickHouse cluster |

ClickHouse is a columnar database management system for online analysis. It features ultimate compression ratio and fast query performance. It is widely used in Internet advertisement, app and web traffic analysis, telecom, finance, and IoT fields. |

ClickHouse and ZooKeeper |

|

Real-time analysis cluster |

Real-time analysis clusters use Hadoop, Kafka, Flink, and ClickHouse components to provide a system for collection, real-time analysis, and query of data at scale. |

Hadoop, Kafka, Flink, ClickHouse, ZooKeeper, and Ranger |

Big data is ubiquitous in our lives. Huawei Cloud MRS is suitable for big data processing in the industries such as the Internet of things (IoT), e-commerce, finance, manufacturing, healthcare, and energy.

The following are some typical service scenarios of big data processing:

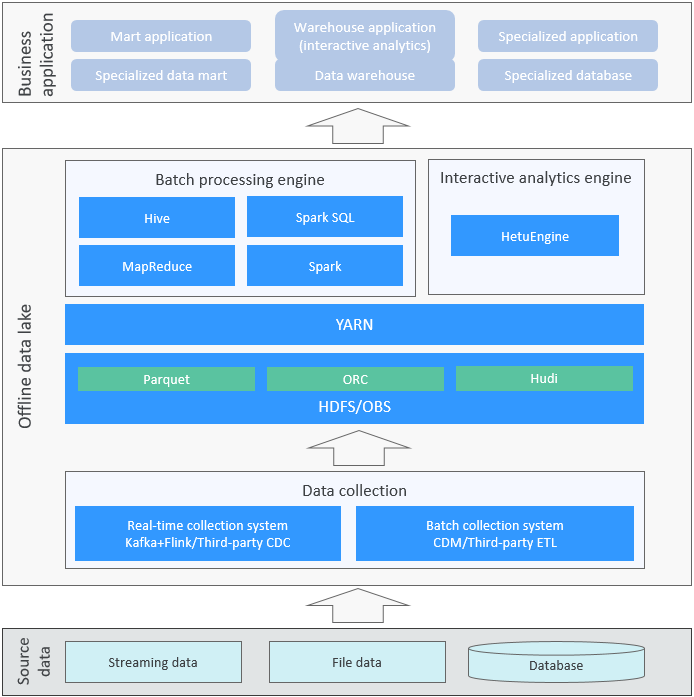

Offline data processing involves the analysis and processing of large volumes of data to generate results for various data applications.

Offline data processing has low requirements on processing time. However, a large amount of data needs to be processed, which occupies a large number of compute and storage resources. Generally, offline processing is implemented through Hive/SparkSQL or MapReduce/Spark.

- Massive data storage

HDFS supports distributed storage of TB- and PB-level data in clusters. It uses the copy mechanism (three copies by default) to ensure data reliability and is well-suited for storing logs and unstructured data.

- Batch processing framework

Complex computing tasks can be divided into two phases: "Map" and "Reduce". This framework supports large-scale parallel computing, such as sorting, aggregation, and text analysis.

- Source data: includes streaming data, batch file data, and databases.

- Real-time collection system: collects data in real time, for example, using Kafka, Flink, and third-party CDC tools.

- Batch collection system: collects offline data in batches using DataArts Studio-CDM and third-party ETL tools.

- Batch processing engine: implements high-performance execution of offline batch processing jobs.

- Hive: a conventional SQL batch processing engine used to process SQL-like batch processing jobs. Its performance is stable in the case of large amounts of data, but its processing speed is slow.

- MapReduce: a conventional batch processing engine used to process non-SQL batch processing jobs, especially batch processing jobs of data mining and machine learning. It is widely used and has stable performance in the case of massive amounts of data, but its processing speed is slow.

- Spark SQL: a new SQL batch processing engine used to process SQL-like batch processing jobs. It is applicable to scenarios with huge amounts of data, and its processing speed is fast.

- Spark: a new batch processing engine used to process non-SQL batch processing jobs, especially batch processing jobs of data mining and machine learning. It is applicable to scenarios with massive amounts of data, and its processing speed is fast.

- YARN: resource scheduling engine that provides resource scheduling capabilities for various batch processing engines. This engine is the basis of multi-tenant resource allocation.

- HDFS/OBS: distributed file system that provides data storage for various batch processing engines. It can store data in various file formats, such as Parquet, ORC, and Hudi.

- Interactive analytics engine: implements interactive queries using HetuEngine. It is suitable for scenarios with massive amounts of data, medium and high concurrency, and multi-tenant shared queries.

- Service application: queries and uses batch processing results. It is customized and developed by upper-layer services.

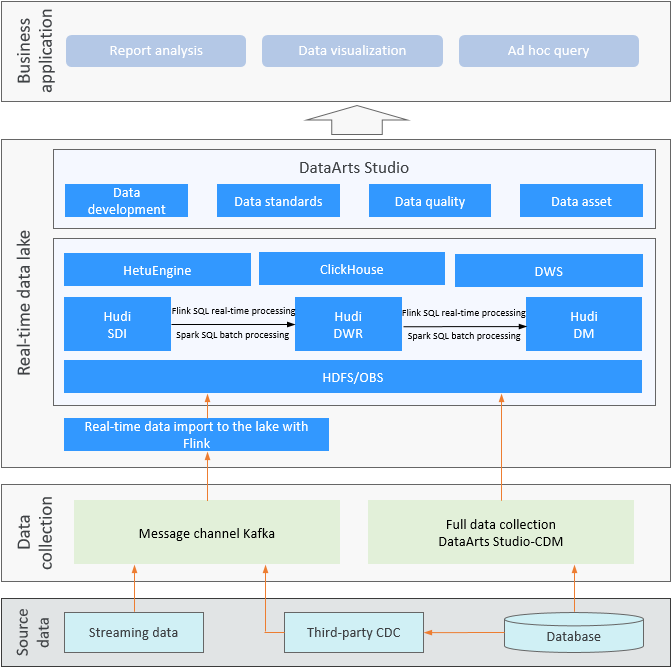

In a real-time data lake, the traditional T+1 data processing mode of offline big data processing (also called offline data lake) is enhanced to achieve minute-level data processing.

The core of a real-time data lake is the data lake. You can implement end-to-end data processing, including real-time data ingestion to a data lake, converged batch and stream data processing within a data lake, and real-time data queries.

- Supports data update and automatic merge.

- Supports streaming processing and reads new data in real time.

- Supports read and write of incremental data. The processing of new data does not require full table scan.

- Source data: TP databases or streaming message data that can be written to Kafka.

- Data collection: synchronizes data or imports data to the data lake in real time.

- Kafka: real-time message pipeline used to receive real-time streaming data or changed TP data collected by third parties.

- DataArts Studio-CDM: migrates all historical data. After all historical data is migrated, CDL is used to synchronize incremental data in real time.

- Real-time data lake: implements high-performance, real-time job processing.

- Hudi: a data lake storage format that provides the ability to update and delete data and consume new data on top of HDFS.

- Flink: real-time stream processing engine that can implement converged batch and stream processing based on the real-time incremental query capabilities of Hudi.

- Spark SQL: batch processing engine that implements Hudi-based batch processing. You can select this engine if real-time service processing is not required.

- HDFS/OBS: distributed file system, which provides data storage for various batch processing engines and can store data in various formats.

- Data Lake Insight (DLI): used for efficient access to processed data in the lake.

- HetuEngine: provides efficient interactive analysis of data within the lake.

- ClickHouse: MPP database, which is used to store and query data in the mart and provides efficient aggregation analysis for large wide tables.

- DWS: MPP database, which provides traditional data warehouse capabilities.

- DataArts Studio: a tool set for big data development.

Provides a UI for visualized data development, data standards design, data quality job design, and data asset display and search, significantly improving the job development efficiency on the data lake.

- Business application: queries and uses data in the real-time data lake. It is customized and developed by upper-layer services.

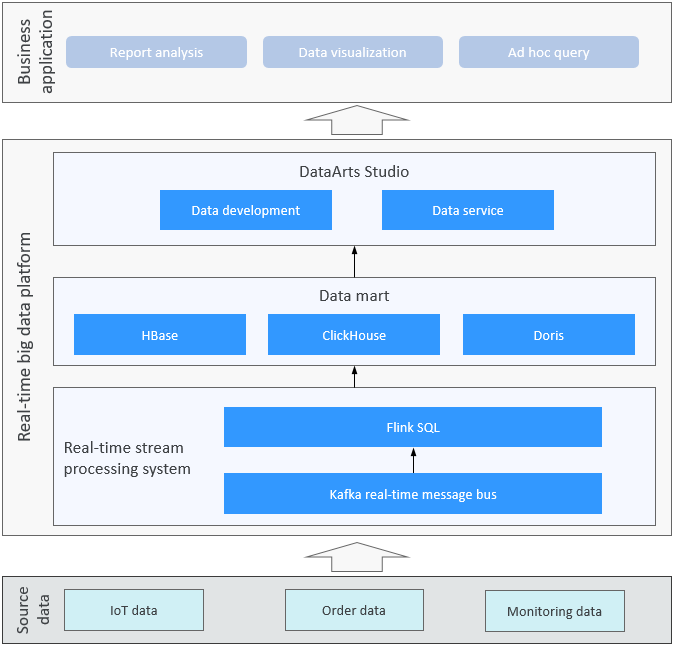

In big data scenarios, real-time stream processing is widely used, just like batch processing. Compared with batch processing, real-time stream processing greatly improves the data processing timeliness, bringing more agile service experience.

A real-time stream processing system must feature low latency, good scalability, and high availability, which can reduce the data processing latency of traditional batch processing services from days or hours to minutes or even seconds.

- Data is collected in real time and sent to the processing system as streams.

- The stream processing engine can continuously process received data in real time.

- The stream processing engine continuously generates calculation results based on received data.

- The system can interconnect with multiple data storage systems and deliver processing results to the data destination.

- The data storage system allows continuous data write and low-latency data query in real time.

- Source data: streaming message data written to Kafka, which is the data streams that increases continuously.

- Real-time stream processing system: synchronizes data or imports data to the data lake in real time.

- Kafka: real-time message pipeline, which is used to receive real-time streaming data and serves as the unified data source for real-time stream processing.

- Flink SQL: real-time stream processing. It reads data from Kafka in real time, performs complex real-time computations, and sends the results to downstream systems, achieving millisecond-level real-time stream processing.

- Data mart: enables efficient access to the data processed through real-time stream processing.

- HBase: exact query engine, which provides high-concurrency and low-latency access to massive amounts of data. It can quickly retrieve target data from huge amounts of data based on globally unique indexes.

- ClickHouse: MPP database, which is used to store and query data in the mart and provides efficient aggregation analysis for large wide tables.

- Doris: MPP database, which provides a real-time data warehouse.

- DataArts Studio: a tool set for big data development.

- Data development: provides visualized task development and scheduling capabilities for real-time stream data, and supports real-time processing tasks.

- Data service: allows you to develop and open APIs for data services. Queries in the data mart are defined as data service APIs to provide API-based data service capabilities for upper-layer applications.

- Business application: queries and uses data in the real-time data mart. It is customized and developed by upper-layer services.

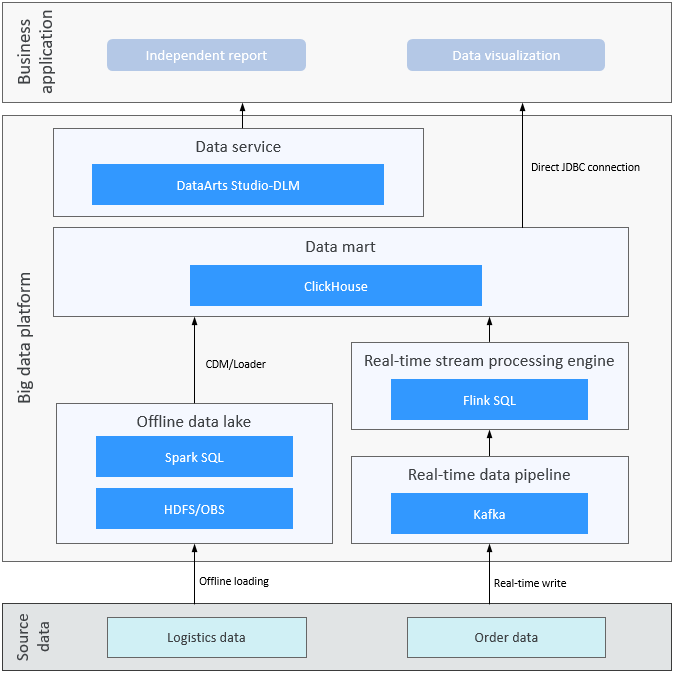

In traditional data mart implementations, real-time data and offline data are typically separated, and metrics are often pre-aggregated for custom development, which can lead to inflexibility, low timeliness, and increased complexity.

The ClickHouse-based real-time data mart solution normalizes offline and real-time data. Multi-dimensional aggregation queries can be performed within seconds on detailed data without pre-aggregation. This solution features real time, high efficiency, and good flexibility.

ClickHouse is a high-performance, column-oriented database management system (DBMS) for online analytical processing (OLAP). It provides optimal query performance with the ability to process hundreds of millions of rows per second on a single node and distributed scalability.

- Real-time data write, sub-second aggregation query delay, and high timeliness

- No preprocessing required and data query based on detailed wide tables, which is cost-effective

- Multi-dimensional flexible query with different combinations and full self-service metric query, providing high flexibility

- Source data: data generated by service systems, which can be offline file data, relational database data, or real-time streaming data.

- Big data platform: core data processing platform for the real-time wide table data mart, which consists of modules such as offline data processing, real-time data processing, and the wide table data mart.

- Real-time message pipeline: receives real-time streaming data and serves as a unified pipeline for real-time data ingestion.

- Real-time stream processing engine: reads data from Kafka in real time, performs complex real-time computations, and sends the results to downstream systems, achieving millisecond-level real-time stream processing.

- Offline data lake: collects and processes offline data from service systems, processes wide tables, uses HDFS or OBS for data storage, and uses Spark SQL as the data processing engine.

- Wide table data mart: stores and queries mart data, and provides efficient aggregation and analysis of large wide tables. ClickHouse is the core component of the wide table data mart.

- Data service: allows you to develop and open APIs for data services. Queries in the data mart are defined as data service APIs to provide API-based data service capabilities for upper-layer applications.

- Direct JDBC connection: ClickHouse provides standard JDBC APIs. When BI tools are interconnected, you can also use the JDBC APIs to connect to ClickHouse for data query.

- Business application: queries and uses data in the real-time data lake. It is customized and developed by upper-layer services.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot