Collecting Logs Using LTS

CCI 2.0 works with LTS to collect application logs and report these logs to LTS so that you can use them for troubleshooting.

Log Collection Reliability

The log system's main purpose is to record all stages of data for service components, including startup, initialization, exit, runtime details, and exceptions. It is primarily employed in O&M scenarios for tasks like checking component status and analyzing fault causes.

Standard streams (stdout and stderr) and local log files use non-persistent storage. However, data integrity may be compromised due to the following risks:

- Log rotation and compression potentially deleting old files

- Temporary storage volumes being cleared when Kubernetes pods end

- Automatic OS cleanup triggered by limited node storage space

While the Cloud Native Log Collection add-on employs techniques like multi-level buffering, priority queues, and resumable uploads to enhance log collection reliability, logs could still be lost in the following situations:

- The service log throughput surpasses the collector's processing capacity.

- The service pod is abruptly terminated and reclaimed by CCE.

- The log collector pod experiences exceptions.

The following lists some recommended best practices for cloud native log management. You can review and implement them thoughtfully.

- Use dedicated, high-reliable streams to record critical service data (for example, financial transactions) and store the data in persistent storage.

- Avoid storing sensitive information like customer details, payment credentials, and session tokens in logs.

Constraints

- Logs cannot be collected from the directory that a specified system, device, cgroup, or tmpfs is mounted to.

- A single-line log that exceeds 250 KB will not be collected.

- Regular expression match is only supported when a full path with a complete file name is specified.

- The name of each file to be logged must be unique in a container. If there are files with duplicate names, only the logs of one file are collected.

- After a pod is started, the log collection configuration cannot be updated. If the configuration is updated, the pod must be restarted for the configuration to take effect.

- A directory to be logged must exist before the container is started. If a directory is created after the container is started, the logs of the directory and of the files in that directory cannot be collected.

- If the name of a file exceeds 190 characters, its logs cannot be collected.

- Logs of init containers cannot be collected.

- Logs of Kunpeng pods cannot be collected.

Step 1 Create a Log Group

- Log in to the management console and choose Management & Deployment > Log Tank Service.

- Log in to the LTS console.

- On the Log Management page, click Create Log Group.

- On the displayed page, set log group parameters by referring to Table 1.

Table 1 Log group parameters Parameter

Description

Log Group Name

A log group is the basic unit for LTS to manage logs. It is used to classify log streams. If there are too many logs to collect, separate logs into different log groups based on log types, and name log groups in an easily identifiable way.

LTS automatically generates a default log group name. You are advised to customize one based on your service. You can also change it after the log group is created. The naming rules are as follows:

- Enter 1 to 64 characters, including only letters, digits, hyphens (-), underscores (_), and periods (.). Do not start with a period or underscore or end with a period.

- Each log group name must be unique.

Enterprise Project Name

Enterprise projects allow you to manage cloud resources and users by project.

By default, the default enterprise project is used. You are advised to select an enterprise project that fits your service needs. To see all available options, click View Enterprise Projects.

- You can use enterprise projects only after enabling the enterprise project function. For details, see Enabling the Enterprise Project Function.

- You can remove resources from an enterprise project to another. For details, see Removing Resources from an Enterprise Project.

Log Retention (Days)

Specify the log retention period for the log group, that is, how many days the logs will be stored in LTS after being reported to LTS.

By default, logs are retained for 30 days. You can set the retention period to one to 365 days.

LTS periodically deletes logs based on the configured log retention period. For example, if you set the period to 30 days, LTS retains the reported logs for 30 days and then deletes them.

NOTE:The log retention period can be extended to 1,095 days. This feature is available only to whitelisted users. To enable it, submit a service ticket.

- By default, logs are retained for seven days. You can set the retention period to one to seven days. The logs that exceed the retention period will be automatically deleted. You can transfer logs to OBS buckets for long-term storage.

- Raw logs reported to LTS will be deleted in the early morning of the next day after the log retention period expires.

Tag

Tag the log group as required. Click Add and enter a tag key and value. If you enable Apply to Log Stream, the tag will be applied to all log streams in the log group. To add more tags, repeat this step. A maximum of 20 tags can be added.

Tag key restrictions:

- A tag key can contain letters, digits, spaces, and special characters (_.:=+-@), but cannot start or end with a space or start with _sys_.

- A tag key can contain up to 128 characters.

- Each tag key must be unique.

Tag value restrictions:

- A tag value can contain letters, digits, spaces, and the following special characters: _.:=+-@

- A tag value can contain up to 255 characters.

Tag policies:

If your organization has configured tag policies for LTS, follow the policies when adding tags to log groups, log streams, log ingestion configurations, host groups, and alarm rules. Non-compliant tags may cause the creation of these resources to fail. Contact your administrator to learn more about the tag policies. For details about tag policies, see Overview of a Tag Policy. For details about tag management, see Managing Tags.

Deleting a tag:

Click Delete in the Operation column of the tag.

WARNING:Deleted tags cannot be recovered.

If a tag is used by a transfer task, you need to modify the task configuration after deleting the tag.

Remark

Enter remarks. The value contains up to 1,024 characters.

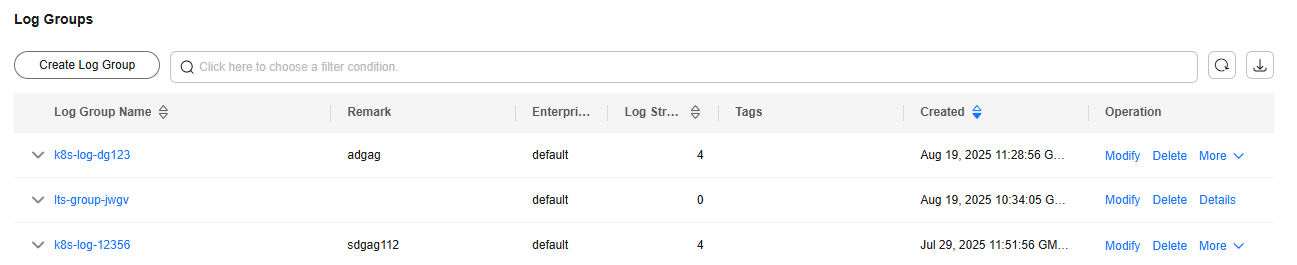

- Click OK. The created log group will be displayed in the log group list.

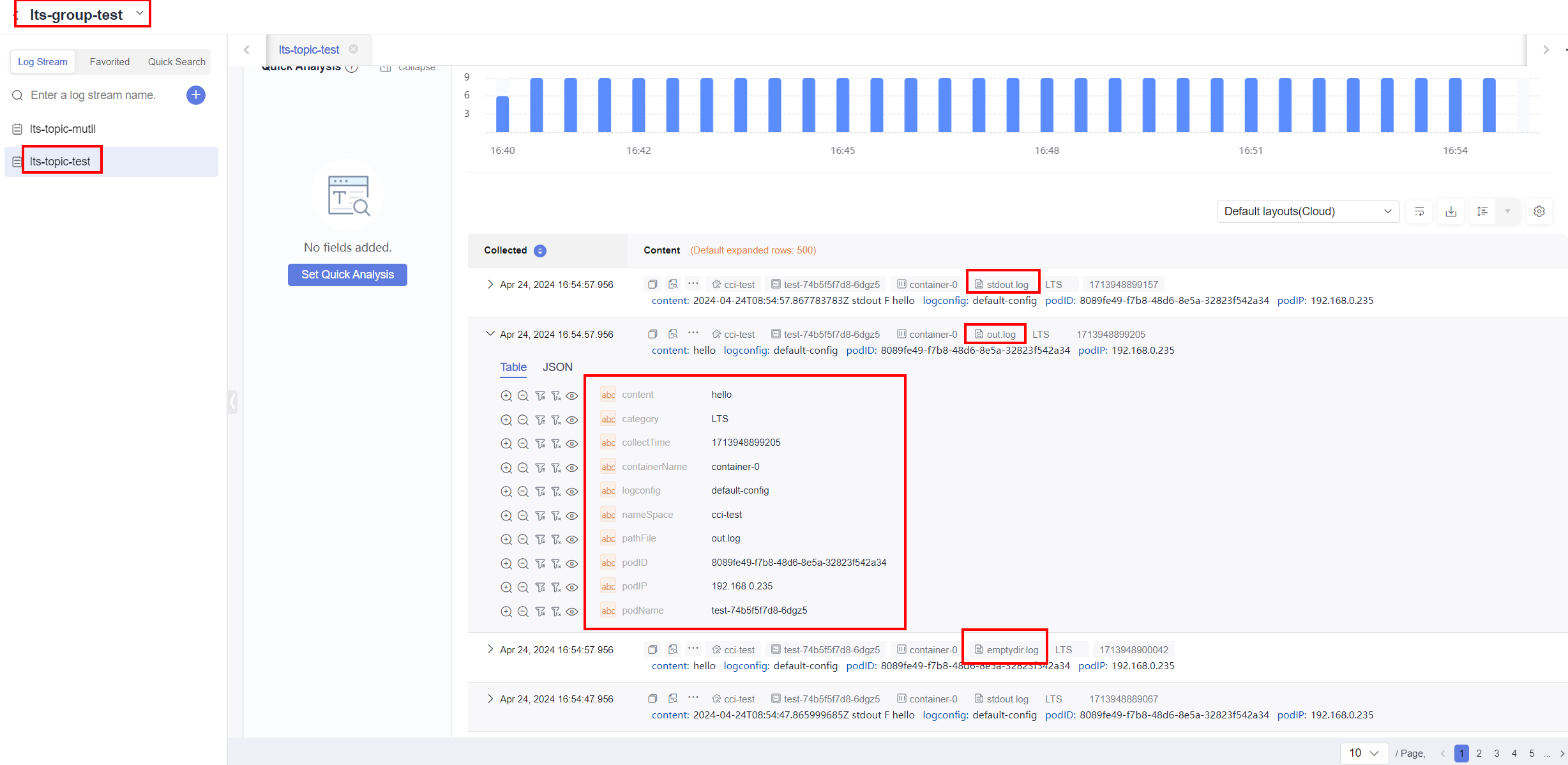

Figure 1 Log group

- In the log group list, view information such as the log group name, tags, and log streams.

- Click the log group name to access the log details page.

Step 2 Create a Log Stream

- On the LTS console, click

on the left of a log group name.

on the left of a log group name. - In the upper left corner of the displayed page, click Create Log Stream and then enter a log stream name. The log stream name:

- Can contain only letters, numbers, underscores (_), hyphens (-), and periods (.). The name cannot start with a period or underscore, or end with a period.

- Can contain 1 to 64 characters.

Collected logs are sent to the created log stream. If there are a large number of logs, you can create multiple log streams and name them for quick log search.

- Select an enterprise project. You can click View Enterprise Projects to view all enterprise projects.

- If you enable Log Retention Duration on this page, you can set the log retention duration specifically for the log stream. If you disable it, the log stream will inherit the log retention setting of the log group.

- Anonymous write is disabled by default and is suitable for log reporting on Android, iOS, applets, and browsers. If anonymous write is enabled, the anonymous write permission is granted to the log stream, and no valid authentication is performed, which may generate dirty data.

- Set the tag in the Key=Value format, for example, a=b.

- Enter remarks. A maximum of 1,024 characters are allowed.

- Click OK. In the log stream list, you can view information such as the log stream name and operations.

- You can view the log stream billing information. For details, see Price Calculator.

- The function of reporting SDRs by log stream is in Friendly User Test (FUT). You can submit a service ticket to enable it.

Step 3 Obtain the Log Group ID and Log Stream ID

- In the navigation pane, choose Log Management.

- Select the log group created in Step 1 Create a Log Group and click More > Details in the Operation column.

- Copy the log group ID.

- Click

on the left of the log group name.

on the left of the log group name. - Select the log stream created in Step 2 Create a Log Stream and click Details in the Operation column.

- Copy the log stream ID.

Step 4 Create a Deployment on the CCE Console

- Log in to the CCE console.

- In the navigation pane, choose Workloads. Then click the Deployments tab.

- Click Create from YAML to create a Deployment using YAML or JSON.

- Method 1: Use YAML to create a Deployment.

apiVersion: apps/v1 kind: Deployment metadata: name: test labels: bursting.cci.io/burst-to-cci: enforce spec: replicas: 1 selector: matchLabels: app: test template: metadata: annotations: logconf.k8s.io/fluent-bit-log-type: lts # (Mandatory) LTS is used to collect logs. logconfigs.logging.openvessel.io: | { "default-config": { # You can set the log collection paths of multiple containers. stdout.log indicates standard output. /root/out.log contains the text logs in rootfs (volumes included). /data/emptydir-xxx/*.log indicates the directories in rootfs (volumes included). "container_files": { "container-0": "stdout.log;/root/out.log;/data/emptydir-volume/*.log" }, "regulation": "", #Regular expression for matching multi-line logs. For details, see Regular Expression Matching Rules. "lts-log-info": { #Configure a log group and a log stream. "<log-group-ID>": "<log-stream-ID>" #Replace <log-group-ID> and <log-stream-ID> with those obtained in Step 3. } } } resource.cci.io/pod-size-specs: 2.00_8.0 labels: app: test sys_enterprise_project_id: "0" spec: containers: - image: busybox:latest imagePullPolicy: IfNotPresent command: ['sh', '-c', "while true; do echo hello; touch /root/out.log; echo hello >> /root/out.log; touch /data/emptydir-volume/emptydir.log; echo hello >> /data/emptydir-volume/emptydir.log; sleep 10; done"] lifecycle: {} volumeMounts: - name: emptydir-volume mountPath: /data/emptydir-volume - name: emptydir-memory-volume mountPath: /data/emptydir-memory-volume name: container-0 resources: limits: cpu: 100m memory: 100Mi requests: cpu: 100m memory: 100Mi terminationMessagePath: /dev/termination-log terminationMessagePolicy: File dnsPolicy: Default volumes: - name: emptydir-volume emptyDir: {} - name: emptydir-memory-volume emptyDir: sizeLimit: 1Gi medium: MemoryTable 2 Key parameters Parameter

Mandatory

Type

Description

logconf.k8s.io/fluent-bit-log-type

Yes

String

- Description: Log collection mode

- Constraints: This parameter is mandatory.

- Value: lts

logconfigs.logging.openvessel.io

Yes

String

- Description: Log collection configuration

When using YAML, delete the comments of the parameters in the logconfigs.logging.openvessel.io field.

- Method 2: Use JSON to create a Deployment.

{ "default-config": { "container_files": { // You can set the log collection paths of multiple containers. stdout.log indicates standard output. /root/out.log contains the text logs in rootfs (volumes included). /data/emptydir-xxx/*.log indicates the directories in rootfs (volumes included). "container-0": "stdout.log;/root/out.log;/data/emptydir-volume/*.log", "container-1": "stdout.log" }, "regulation": "", // Regular expression matching rule for collecting multi-line logs. For details about regular expression matching rules, see https://docs.fluentbit.io/manual/pipeline/parsers/configuring-parser. "lts-log-info": { // Configure a log group and a log stream. "<log-group-ID>": "<log-stream-ID>" // Replace <log-group-ID> and <log-stream-ID> with those obtained in Step 3. } }, "multi-config": { "container_files": { // You can set the log collection paths of multiple containers. stdout.log indicates standard output. /root/out.log contains the text logs in rootfs (volumes included). /data/emptydir-xxx/*.log indicates the directories in rootfs (volumes included). "container-0": "stdout.log;/root/out.log;/data/emptydir-memory-volume/*.log" }, "regulation": "/(?<log>\\d+-\\d+-\\d+ \\d+:\\d+:\\d+.*)/", // Regular expression matching rule for collecting multi-line logs "lts-log-info": { // Configure the same log group and log stream. "<log-group-ID>": "<log-stream-ID>" // Replace <log-group-ID> and <log-stream-ID> with those obtained in Step 3. } } }

When you are creating a workload using YAML or JSON, you need to add the log group ID and log stream ID obtained in Step 3 Obtain the Log Group ID and Log Stream ID to the lts-log-info parameter, for example, \"lts-log-info\":{\"log-group-ID\":\"log-stream-ID\}

- Replace log-group-ID with the log group ID obtained in Step 3 Obtain the Log Group ID and Log Stream ID.

- Replace log-stream-ID with the log stream ID obtained in Step 3 Obtain the Log Group ID and Log Stream ID.

- Method 1: Use YAML to create a Deployment.

- Click OK.

- Click the created Deployment to view its status.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot