Ingress Overview

In Kubernetes, service access management is the key for efficient, secure traffic flow inside and outside a cluster. Kubernetes Services forward traffic over TCP and UDP and are mainly used for service discovery and load balancing in a cluster. However, Kubernetes Services do not support HTTP and HTTPS and cannot process complex routing rules, domain name-based access control, and TLS termination. To solve these problems, Kubernetes provides Ingresses, another way for service access. Ingresses support HTTP and HTTPS. An Ingress is an independent resource in a Kubernetes cluster. You can create custom forwarding rules based on domain names and paths to forward traffic in a fine-grained manner.

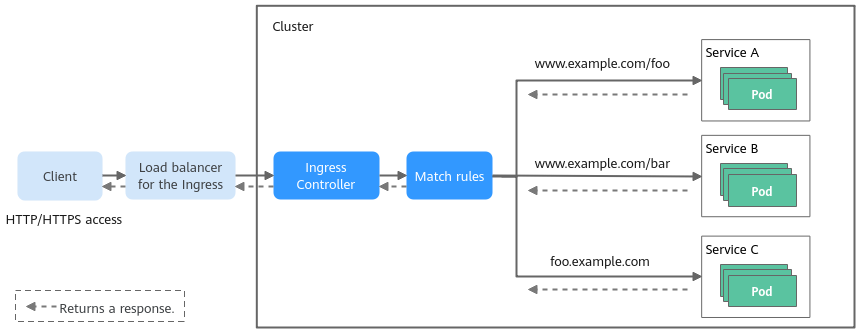

In Kubernetes, an Ingress is a collection of rules and does not directly process traffic. It depends on the Ingress Controller to forward traffic.

- Ingress object: a set of access rules that forward requests to specified Services based on domain names or paths. It can be added, deleted, modified, and queried by calling APIs.

- Ingress Controller: an executor for forwarding requests. It monitors the changes of resource objects such as Ingresses, Services, endpoints, secrets (mainly TLS certificates and keys), nodes, and ConfigMaps in real time, parses rules defined by Ingresses, and forwards requests to the target backend Services.

Workflow

Ingresses can route external HTTP or HTTPS traffic to Services in the cluster. The implementation process is described as follows:

- An external client initiates an HTTP or HTTPS request.

- The request reaches the load balancer managed by the Ingress, and the load balancer forwards the request to the Ingress Controller in the cluster.

- After receiving the request, the Ingress Controller matches the rule defined in the Ingress object based on the domain name and path of the request. The Ingress Controller forwards the request to the corresponding Service based on the matched rule. The Service finds the corresponding pod based on the label selector.

- The Service forwards the request to the pod based on the load balancing algorithm (such as round robin).

- The pod processes the request and returns a response. The response passes through the Service, Ingress Controller, and load balancer and is finally returned to the client.

Ingress Controller Type

- LoadBalancer Ingress Controller is deployed on the master nodes (which are fully hosted) and bound to a load balancer provided by ELB in the VPC where the cluster is running. It can forward traffic based on the load balancer settings. All policy configurations and forwarding behaviors are performed by the load balancer. For details, see How LoadBalancer Ingress Controller Works.

- NGINX Ingress Controller is deployed on the worker nodes (which are fully hosted) and provides external access through the container ports. External traffic is forwarded to other services in the cluster through the Nginx component. Traffic is forwarded within the cluster. For details, see How NGINX Ingress Controller Works.

|

Feature |

LoadBalancer Ingress Controller |

NGINX Ingress Controller |

|---|---|---|

|

O&M |

No O&M required |

Self-installation, upgrade, and maintenance |

|

Performance |

One Ingress supports only one load balancer. |

Multiple Ingresses can share one load balancer. |

|

Enterprise-grade load balancers are used to provide high performance and high availability. Service forwarding is not affected in upgrade and failure scenarios. |

Performance varies depending on the resource configuration of pods. |

|

|

Dynamic loading is supported. |

|

|

|

Component deployment |

Master nodes (fully hosted by CCE Autopilot) |

Worker nodes (fully hosted by CCE Autopilot). The Nginx component incurs additional costs. |

|

Route redirection |

Supported |

Supported |

|

SSL configuration |

Supported |

Supported |

|

Using an Ingress as a proxy for backend services |

Supported |

Supported. This option can be implemented through the backend-protocol: "HTTPS" annotation. |

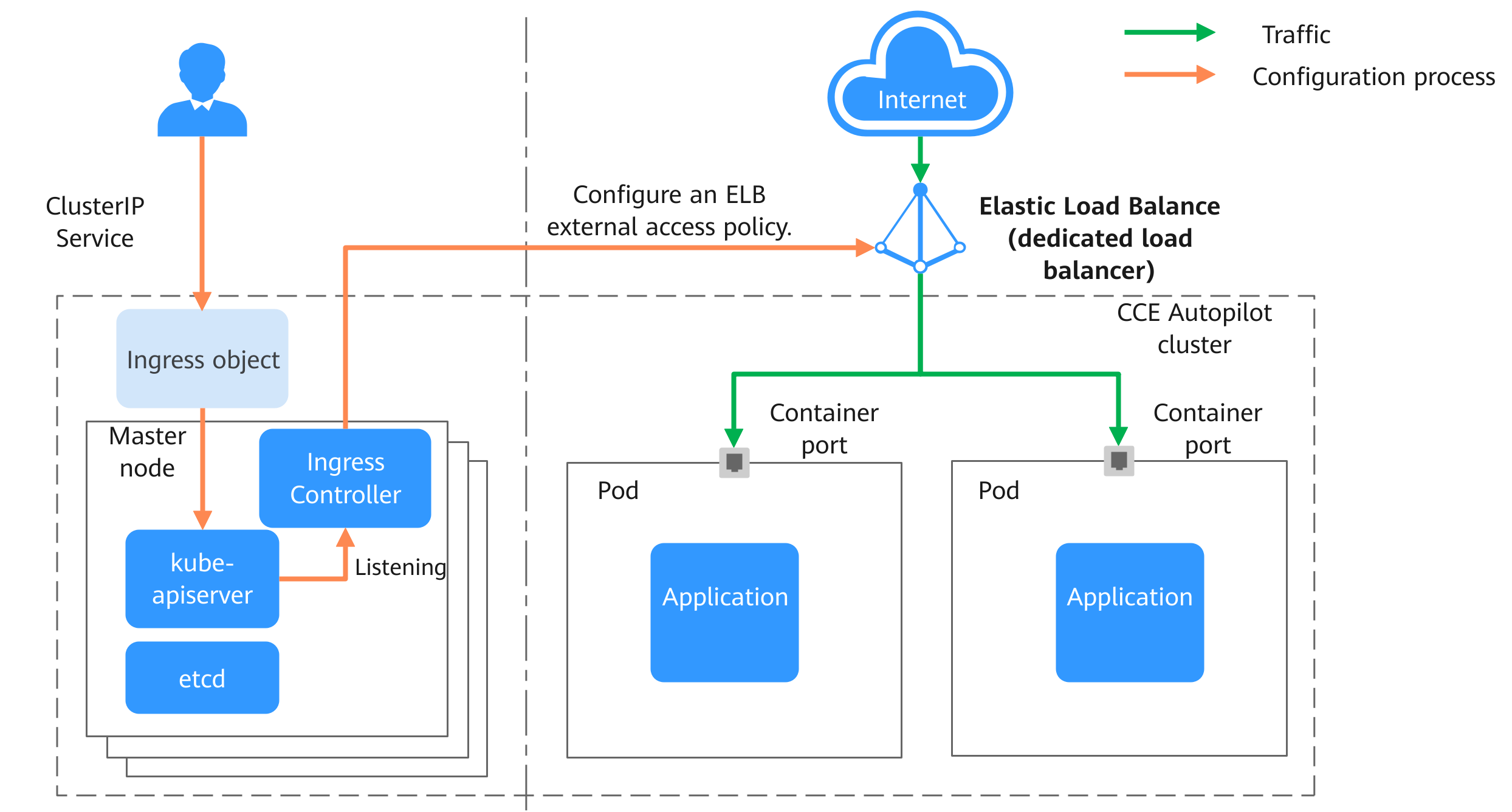

How LoadBalancer Ingress Controller Works

CCE Autopilot clusters can only use dedicated load balancers to connect to pods. When creating an Ingress for external access to the cluster, you can use the load balancer to access a ClusterIP Service and use pods as the backend servers of the load balancer listener. In this way, external traffic can directly access the pods in the cluster over container ports. Figure 2 shows how LoadBalancer Ingress Controller works. The implementation process is described as follows:

- A user creates an Ingress and configures a traffic access rule in the Ingress, including the load balancer, access path, SSL, and backend Service port.

- When LoadBalancer Ingress Controller detects that the Ingress changes, it reconfigures the listener and backend server route on the ELB based on the traffic access rule.

- When a user attempts to access a workload, the load balancer forwards the traffic to the target workload based on the configured forwarding rule.

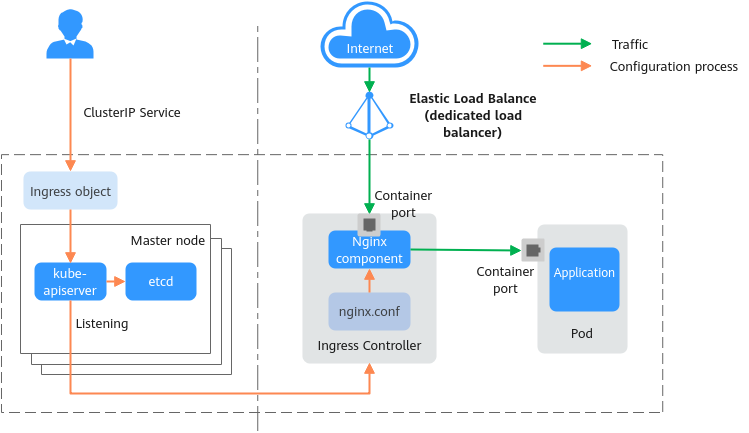

How NGINX Ingress Controller Works

When creating an Nginx Ingress in a CCE Autopilot cluster, you can select a LoadBalancer (dedicated load balancer) or ClusterIP Service. Before creating an Nginx Ingress, ensure that NGINX Ingress Controller has been installed in the cluster by following the instructions in NGINX Ingress Controller. Nginx Ingresses use load balancers provided by ELB as the traffic Ingress and use NGINX Ingress Controller to implement load balancing and access control.

NGINX Ingress Controller is deployed on the worker nodes (fully hosted by CCE Autopilot). O&M and the Nginx component incur additional costs. For details about how NGINX Ingress Controller works, see Figure 3. The implementation process is described as follows:

NGINX Ingress Controller uses the charts and images provided by the open-source community, and issues may occur during usage. CCE periodically synchronizes the community version to fix known vulnerabilities. Check whether your service requirements can be met.

- After you update Ingress objects, NGINX Ingress Controller writes a forwarding rule defined in the Ingress objects into the nginx.conf configuration file of Nginx.

- The built-in Nginx component reloads the updated configuration file to modify and update the Nginx forwarding rule.

- When a cluster is accessed, the traffic is first forwarded by the created load balancer to the Nginx component in the cluster. Then, the Nginx component forwards the traffic to each workload based on the forwarding rule.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot