Continuous Back Pressure and Full GC Occur When Flink Interconnects with HBase

Symptom

If the source of a Flink task is Kafka and the sink is a component whose performance is lower than that of Kafka, such as HBase, the following symptoms may occur:

- Symptom 1: The job is interrupted for a period of time. The following error message may be displayed, and the checkpoint fails continuously:

Caused by:org.apache.hadoop.ipc.RemoteException: File does not exist:/flink/checkpoints/xxxx does not have anyopen files.

- Symptom 2: After the job runs for a period of time, the TaskManager is faulty. The following error message may be displayed:

"org.apache.flink.util.FlinkException: The TaskExecutor is shutting down" or "the remote taskmanager has been lost".

- Symptom 3: A large amount of back pressure occurs on the first operator of the job.

Possible Causes

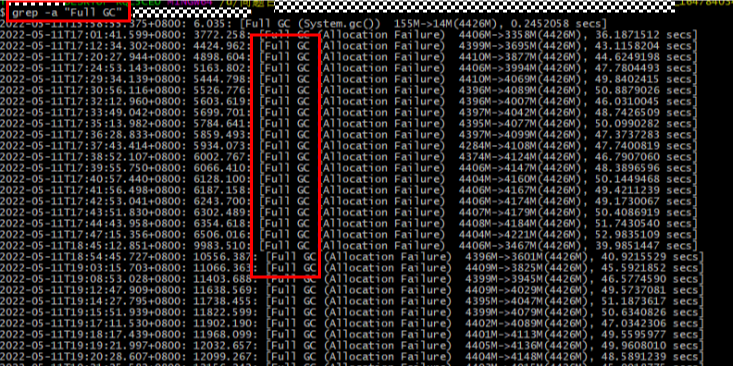

- Check whether a large number of full GC logs are generated.

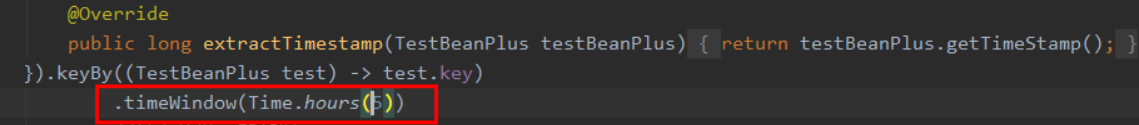

- Check whether the service uses a wide window. For example, the window wide is set to hours, the memory may be used up.

- Check whether the performance of the sink component is poor.

For example, when data is written to components such as HBase that are poorer in performance, back pressure occurs in the entire job.

Solution

- Increase the startup memory of the TaskManager.

- Optimize the window function to prevent data from being stacked in the memory.

- Optimize the sink components or increase the sink concurrency to prevent back pressure.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot