Interconnecting ClickHouse with HDFS (MRS 3.3.0-LTS and later)

Scenarios

Connect ClickHouse in security mode to HDFS in security mode to read and write files. Functions same as those provided in the open source community are available after ClickHouse in normal mode is connected to HDFS in normal mode.

Notes and Constraints

- This section applies only to MRS 3.3.0-LTS or later.

- ClickHouse and HDFS clusters must be consistently configured in either security mode (Kerberos authentication enabled) or normal mode (Kerberos authentication disabled).

Prerequisites

- The ClickHouse client has been installed in a directory, for example, /opt/client.

- A user, for example, clickhouseuser, who has permissions on ClickHouse tables and has the permission to access HDFS has been created on FusionInsight Manager.

- A corresponding directory exists in HDFS. The HDFS engine of ClickHouse only works with files but does not create or delete directories.

- When ClickHouse accesses HDFS across clusters, a user, for example, hdfsuser, who has the permission to access HDFS has been created on FusionInsight Manager in the cluster where HDFS is located.

- You have obtained the HDFS cluster domain name by logging in to FusionInsight Manager and choosing System > Permission > Domain and Mutual Trust.

- ClickHouse cannot connect to encrypted HDFS directories.

Interconnecting with HDFS in the Local Cluster

- Log in to FusionInsight Manager, choose Cluster > Services > HDFS, click Configurations and then All Configurations, search for hadoop.rpc.protection and change its value to Authentication, save the settings, and restart the HDFS service.

- Choose System > User, select clickhouseuser, and choose More > Download Authentication Credential.

For first-time authentication, change the initial password before downloading the authentication credential file. Otherwise, the security authentication will fail.

- Decompress the downloaded authentication credential package and change the name of file user.keytab to clickhouse_to_hdfs.keytab.

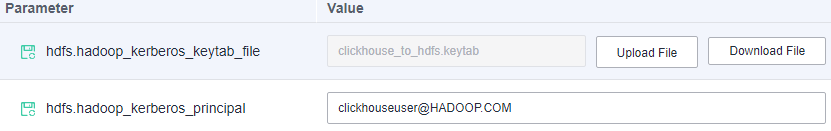

- Log in to FusionInsight Manager of the ClickHouse cluster, choose Cluster > Services > ClickHouse, and click Configurations and then All Configurations. Choose ClickHouseServer(Role) > Engine. Click Upload File next to hdfs.hadoop_kerberos_keytab_file. Then upload the authentication credential file in 3. Set hdfs.hadoop_kerberos_principal to a value in the format of Username@Domain name, for example, clickhouseuser@HADOOP.COM.

If you are connecting ClickHouse to the HDFS in HA mode, perform the following steps:

If you are connecting ClickHouse to the HDFS in HA mode, perform the following steps:- Log in to FusionInsight Manager, choose Cluster > Services > HDFS > Instances, click any instance name, and click hdfs-site.xml in the Configuration File area of the Dashboard tab.

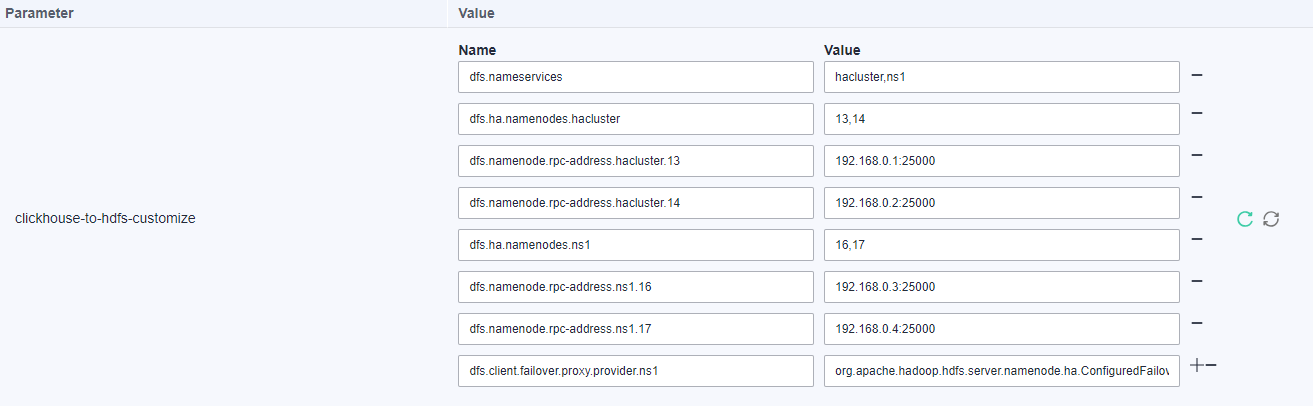

- Obtain the values of required parameters from hdfs-site.xml. For example, if two NameServices are configured for HDFS, one is hacluster and the other is ns1, you need to obtain the values of the following parameters:

dfs.nameservices, dfs.ha.namenodes.*, dfs.namenode.rpc-address.*.* and dfs.client.failover.proxy.provider.* (change its value to org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider)

- Value of dfs.namenode.rpc-address.*.* can be obtained from the HDFS. If the value is a hostname, change it to the service IP address corresponding to the hostname.

- When configuring clickhouse-to-hdfs-customize parameters, use lowercase letters for NameServices (regardless of the original letter case of the NameService). For example, if a NameService of the HDFS cluster is NS1, convert it to ns1 in the clickhouse-to-hdfs-customize file.

The following is an example:

<property> <name>dfs.nameservices</name> <value>hacluster,ns1</value> </property> <property> <name>dfs.namenode.rpc-address.hacluster.13</name> <value>192.168.0.1:25000</value> </property> <property> <name>dfs.namenode.rpc-address.hacluster.14</name> <value>192.168.0.2:25000</value> </property> <property> <name>dfs.ha.namenodes.hacluster</name> <value>13,14</value> </property> <name>dfs.namenode.rpc-address.ns1.16</name> <value>192.168.0.3:25000</value> </property> <property> <name>dfs.namenode.rpc-address.ns1.17</name> <value>192.168.0.4:25000</value> </property> <property> <name>dfs.ha.namenodes.ns1</name> <value>16,17</value> </property> <property> <name>dfs.client.failover.proxy.provider.ns1</name> <value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value> </property> <property> <name>dfs.client.failover.proxy.provider.hacluster</name> <value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value> </property>

- On FusionInsight Manager, choose Cluster > Services > ClickHouse. On the displayed page, click Configurations and then All Configurations. Choose ClickHouseServer(Role) > Engine, and add the parameter values obtained in 4.b to the custom configuration clickhouse-to-hdfs-customize.

- Save the configuration and restart ClickHouse.

- Log in to the node where the client is installed as the client installation user.

Connect to the ClickHouse server using the cluster client. For details, see ClickHouse Client Practices.

- Run the following command to connect ClickHouse to HDFS:

- Run the following command to connect to the HDFS component in HA mode:

CREATE TABLE default.hdfs_engine_table (`name` String, `value` UInt32) ENGINE = HDFS('hdfs://{nameservice}/tmp/secure_ck.txt', 'TSV')To obtain the value of nameservice, perform the following operations:

On FusionInsight Manager, choose Cluster > Services > HDFS > NameService Management and obtain the value of NameService. The nameservice used in the table creation statement must be in lowercase (regardless of whether the value in the HDFS cluster is in lowercase). For example, if the value in the HDFS cluster is NS1, the value in the table creation statement must be ns1.

- Run the following command to connect to the HDFS component in non-HA mode:

CREATE TABLE default.hdfs_engine_table (`name` String, `value` UInt32) ENGINE = HDFS('hdfs://{namenode_ip}:{dfs.namenode.rpc.port}/tmp/secure_ck.txt', 'TSV')Table 1 Parameter description Parameter

Description

namenode_ip

IP address of the NameNode instance node

On FusionInsight Manager, choose Cluster > Services > HDFS. On the displayed page, click the Instances tab, and obtain the service IP address of the active NameNode.

dfs.namenode.rpc.port

RPC port used by the NameNode to process all client requests.

On FusionInsight Manager, choose Cluster > Services > HDFS. On the displayed page, click Configurations and then All Configurations, and search for dfs.namenode.rpc.port to obtain its value.

hdfs://{namenode_ip}:{dfs.namenode.rpc.port}/tmp/

HDFS file path to be accessed

If multiple files need to be accessed, add an asterisk (*) to the end of the folder, for example, hdfs://{namenode_ip}:{dfs.namenode.rpc.port}/tmp/*.

- Run the following command to connect to the HDFS component in HA mode:

- Write data. For details, see Writing ClickHouse Data to HDFS.

Interconnecting with HDFS in Another Cluster

- Log in to FusionInsight Manager of the HDFS cluster, choose Cluster > Services > HDFS. On the displayed page, click Configuration and then All Configurations. Search for hadoop.rpc.protection, change its value to Authentication, save the settings, and restart the HDFS service.

- Log in to FusionInsight Manager of the ClickHouse cluster and choose System > Permission > Domain and Mutual Trust. Configure mutual trust or unilateral mutual trust with the HDFS cluster. To configure unilateral mutual trust, configure mutual trust with the HDFS cluster only in the ClickHouse cluster.

- Log in to FusionInsight Manager of the HDFS cluster and choose System > User. On the page that is displayed, select hdfsuser, click More, and select Download Authentication Credential.

For first-time authentication, change the initial password before downloading the authentication credential file. Otherwise, the security authentication will fail.

- Decompress the downloaded authentication credential package and change the name of file user.keytab to clickhouse_to_hdfs.keytab.

- Log in to FusionInsight Manager of the ClickHouse cluster, choose Cluster > Services > ClickHouse. On the displayed page, click Configurations and then All Configurations. Choose ClickHouseServer(Role) > Engine. Click Upload File next to hdfs.hadoop_kerberos_keytab_file to upload the authentication credential file obtained in step 3. Set hdfs.hadoop_kerberos_principal to a value in the format of Username@Domain name, for example, hdfsuser@HDFS_HADOOP.COM.

If you are connecting ClickHouse to the HDFS in HA mode, perform the following steps:

- Log in to FusionInsight Manager of the HDFS cluster, choose Cluster > Services > HDFS > Instances, click any instance name, and click hdfs-site.xml in the Configuration File area of the Dashboard tab.

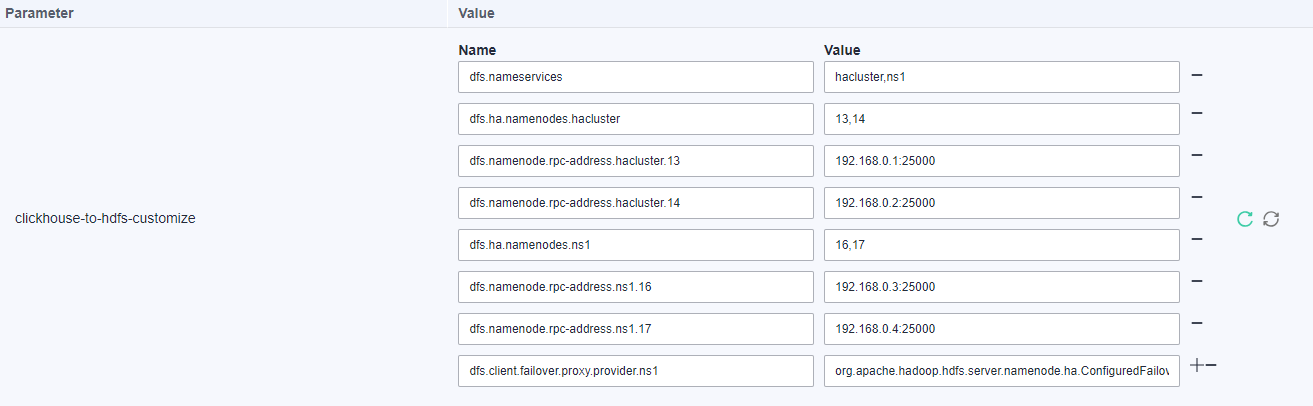

- Obtain the values of the following parameters from hdfs-site.xml. For example, if two NameServices are configured for HDFS, one is hacluster and the other is ns1, you need to obtain the values of the following parameters:

dfs.nameservices, dfs.ha.namenodes.*, dfs.namenode.rpc-address.*.* and dfs.client.failover.proxy.provider.* (change the value to org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider)

- Value of dfs.namenode.rpc-address.*.* can be obtained from the HDFS. If the value is a hostname, change it to the service IP address corresponding to the hostname.

- When configuring clickhouse-to-hdfs-customize parameters, use lowercase letters for NameServices (regardless of the original letter case of the NameService). For example, if a NameService of the HDFS cluster is NS1, convert it to ns1 in the clickhouse-to-hdfs-customize file.

The following is an example:

<property> <name>dfs.nameservices</name> <value>hacluster,ns1</value> </property> <property> <name>dfs.namenode.rpc-address.hacluster.13</name> <value>192.168.0.1:25000</value> </property> <property> <name>dfs.namenode.rpc-address.hacluster.14</name> <value>192.168.0.2:25000</value> </property> <property> <name>dfs.ha.namenodes.hacluster</name> <value>13,14</value> </property> <name>dfs.namenode.rpc-address.ns1.16</name> <value>192.168.0.3:25000</value> </property> <property> <name>dfs.namenode.rpc-address.ns1.17</name> <value>192.168.0.4:25000</value> </property> <property> <name>dfs.ha.namenodes.ns1</name> <value>16,17</value> </property> <property> <name>dfs.client.failover.proxy.provider.ns1</name> <value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value> </property> <property> <name>dfs.client.failover.proxy.provider.hacluster</name> <value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value> </property>

- On FusionInsight Manager of the ClickHouse cluster, choose Cluster > Services > ClickHouse. On the displayed page, click Configurations and then All Configurations. Choose ClickHouseServer(Role) > Engine, and add the parameter values obtained in step 5.b to the custom configuration clickhouse-to-hdfs-customize.

- Save the configuration and restart ClickHouse.

- Log in to the node where the client is installed as the client installation user.

Connect to the ClickHouse server using the cluster client. For details, see ClickHouse Client Practices.

- Connect to the HDFS component.

- Run the following command to connect to the HDFS component in HA mode:

CREATE TABLE default.hdfs_engine_table (`name` String, `value` UInt32) ENGINE = HDFS('hdfs://{nameservice}/tmp/secure_ck.txt', 'TSV')To obtain the value of nameservice, perform the following operations:

On FusionInsight Manager, choose Cluster > Services > HDFS > NameService Management and obtain the value of NameService. The nameservice used in the table creation statement must be in lowercase (regardless of whether the value in the HDFS cluster is in lowercase). For example, if the value in the HDFS cluster is NS1, the value in the table creation statement must be in lowercase ns1.

- Run the following command to connect to the HDFS component in non-HA mode:

CREATE TABLE default.hdfs_engine_table (`name` String, `value` UInt32) ENGINE = HDFS('hdfs://{namenode_ip}:{dfs.namenode.rpc.port}/tmp/secure_ck.txt', 'TSV')

- Run the following command to connect to the HDFS component in HA mode:

- Write data. For details, see Writing ClickHouse Data to HDFS.

Writing ClickHouse Data to HDFS

To write ClickHouse data to HDFS, perform the following steps:

For example, write data to the secure_ck.txt file in the /tmp directory of HDFS.

- Create an HDFS table.

CREATE TABLE hdfs_engine_table (name String, value UInt32) ENGINE=HDFS('hdfs://{namenode_ip}:{dfs.namenode.rpc.port}/tmp/secure_ck.txt', 'TSV') - Write data to the HDFS data file.

INSERT INTO hdfs_engine_table VALUES ('one', 1), ('two', 2), ('three', 3) - Query the HDFS data file.

SELECT * FROM hdfs_engine_table LIMIT 2

The result is as follows:

┌─name─┬─value─┐ │ one │ 1 │ │ two │ 2 │ └────┴─── ─┘

- If you use ClickHouse to write data in an HDFS engine table and the target data file does not exist, a data file will be automatically generated.

- ClickHouse does not support deletion, modification, and appending of HDFS engine table data.

- After the HDFS engine table is deleted from ClickHouse, data files in the HDFS remain unchanged.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot