Migrating ClickHouse Data from One MRS Cluster to Another

Scenarios

Scenario 1: As the number of MRS ClickHouse services increases, the storage and compute resources of clusters cannot meet service requirements. Thus, the clusters need to be split so that part of user service and database data can be migrated to new clusters.

Scenario 2: The data center where the backend hosts of MRS ClickHouse clusters are located needs to be migrated, so does the ClickHouse cluster data.

To meet the migration requirements, MRS provides a one-click data migration tool for migrating the databases, table objects (DDL), and service data of ClickHouse from a source cluster to the new cluster.

Notes and Constraints

This section applies only to MRS 3.2.0-LTS or later.

Migration Mechanism

- Replicated*MergeTree table migration

In this migration solution, ClickHouse uses ZooKeeper to automatically synchronize the data of Replicated*MergeTree tables of different replicas in the same shard. The logical procedure is as follows:

First, add the ZooKeeper information of the source cluster to the configuration file of the destination cluster as the auxiliary ZooKeeper. Then, in the destination cluster, create a temporary table that has the same ZooKeeper path and structure as the source cluster but different replicas from it. After the temporary table is created, data in the source cluster will be automatically synchronized to the temporary table. Once data synchronization is complete, copy data from the temporary table to the formal table.Figure 1 Replicated*MergeTree table migration architecture

- Distributed table migration

During the migration of a replicated table, its metadata in the source cluster is exported and changed to the ZooKeeper path and replica of the destination cluster. Then, you can create a table in the destination cluster based on the modified metadata.

- Non-replicated table and materialized view migration

To migrate data in the non-replicated tables and materialized views, you can call the remote function.

The preceding migration operations are encapsulated using the migration tool script. In this way, you can modify the related configuration files and run the migration scripts to complete the migration by one click. For details, see the procedure description.

Prerequisites

- The status of the ClickHouse cluster you want to migrate is normal, and the source and destination clusters must be both in security mode or normal mode. If the clusters are in normal mode, contact O&M personnel for migration.

- A destination ClickHouse cluster of MRS 3.1.3 or later has been created for data migration. The cluster must be in security mode. The number of ClickHouseServer instances in the ClickHouse cluster is greater than or equal to that in the source cluster.

- Currently, logical clusters support only data migration to clusters with the same number of replicas.

Constraints

- Only table data and table object metadata (DDL) can be migrated. SQL statements like ETL need to be manually migrated.

- To ensure data consistency before and after the migration, stop the ClickHouse service of the source cluster before the migration. For details, see the procedure description.

- If the table of the source cluster is deleted during the migration, this issue can only be solved manually.

Procedure

|

Step |

Description |

|---|---|

|

Step 1: Connect the source cluster to the destination cluster. |

This step ensures that the source and target ClickHouse clusters as well as their nodes can communicate with each other. |

|

By doing so, ZooKeeper in the source cluster can be used as the auxiliary ZooKeeper during data migration. |

|

|

You can run the corresponding script to migrate metadata such as the database name, table name, and table structure of the ClickHouse database and tables in the source cluster to the destination cluster. |

|

|

You can run the corresponding script to migrate the ClickHouse database and table data from the source cluster to the destination cluster. |

Connecting the Source Cluster to the Destination Cluster

- Connect the source cluster to the destination cluster so that the ClickHouse instance nodes in the two clusters can communicate with each other.

- Add the hosts information of the source cluster to the configuration of all nodes in the destination cluster, and add the hosts information of the destination cluster to the configuration of all nodes in the source cluster.

- Log in to FusionInsight Manager of the source ClickHouse cluster, choose Cluster > ClickHouse, click the Instance tab, and view the service IP address of the ClickHouseServer instance node.

- Log in to any ClickHouseServer node using SSH and run the following command to check the hosts configurations of the ClickHouse instance in the source cluster:

The following figure shows the host configurations of the ClickHouse instance:

- Log in to FusionInsight Manager of the destination ClickHouse cluster, choose Cluster > ClickHouse, click the Instance tab, and view the service IP address of the ClickHouseServer instance node in the destination cluster.

- Log in to all ClickHouse nodes in the destination cluster as user root and run the following command to modify the /etc/hosts configuration of the nodes:

vi /etc/hosts

Copy the host information of the ClickHouse instance of the source cluster obtained in 2.b to the hosts file.

- Repeat 2.a to 2.d to add the node IP addresses of the destination cluster to the hosts files of the source cluster.

- Configure system mutual trust between the source cluster and the destination cluster. For details, see Configuring Cross-Manager Mutual Trust Between Clusters.

If both the source and destination clusters are in normal mode, you do not need to configure mutual trust.

Adding the ZooKeeper Information of the Source Cluster to the Configuration File of the Destination Cluster

- Log in to FusionInsight Manager of the source cluster, choose Cluster > Services > ZooKeeper, and click the Instance tab. On the displayed page, view the service IP addresses of the ZooKeeper quorumpeer instance, as shown in Figure 3.

- Log in to FusionInsight Manager of the destination cluster, choose Cluster > Services > ClickHouse and click Configurations and then All Configurations. On the displayed page, search for the clickhouse-config-customize parameter.

- Add ZooKeeper instance information of the source cluster to the clickhouse-config-customize parameter by referring to Table 2.

Table 2 Configurations of clickhouse-config-customize Parameter

Value

auxiliary_zookeepers.zookeeper2.node[1].host

Service IP address of the first ZooKeeper quorumpeer instance in the source cluster obtained in 1. Currently, only the IP address of the ZooKeeper instance can be configured.

auxiliary_zookeepers.zookeeper2.node[1].port

2181

auxiliary_zookeepers.zookeeper2.node[2].host

Service IP address of the second ZooKeeper quorumpeer instance in the source cluster obtained in 1. Currently, only the IP address of the ZooKeeper instance can be configured.

auxiliary_zookeepers.zookeeper2.node[2].port

2181

auxiliary_zookeepers.zookeeper2.node[3].host

Service IP address of the third ZooKeeper quorumpeer instance in the source cluster obtained in 1. Currently, only the IP address of the ZooKeeper instance can be configured.

auxiliary_zookeepers.zookeeper2.node[3].port

2181

- After the configuration is complete, click Save. In the displayed dialog box, click OK.

- Log in to any ClickHouseServer node of the destination cluster as user root. Run the following command to view the ClickHouseServer instance information:

ps -ef |grep clickhouse

Obtain the value of --config-file, that is, the configuration file directory of the ClickHouseServer, from the query result.Figure 4 Obtaining the configuration file directory of ClickHouseServer

- Run the corresponding command to check whether the information about <auxiliary_zookeepers> is added in the ClickHouse configuration file config.xml.

cat Directory of the config.xml file obtained in 5

- If both the source and destination clusters are in security mode, go to 7.

- If both the source and destination clusters are in normal mode, go to 9.

Figure 5 Viewing the added ZooKeeper information of the source cluster

- In the configuration file directory obtained in 5, run the following command to obtain the ZooKeeper authentication information of the source cluster:

cat ENV_VARS | grep ZK

Obtain the values of ZK_SERVER_FQDN, ZK_USER_PRINCIPAL and ZK_USER_REALM.

- Log in to FusionInsight Manager of the destination cluster, choose Cluster > Services > ClickHouse, click Configurations and then All Configurations. In the navigation pane on the left, choose ClickHouseServer(Role) > Backup and set the parameters by referring to the following table.

Table 3 Configuring the cluster authentication information Parameter

Value

AUXILIARY_ZK_SERVER_FQDN

Value of ZK_SERVER_FQDN obtained in 7

AUXILIARY_ZK_SERVER_PRINCIPAL

Value of ZK_USER_PRINCIPAL obtained in 7

AUXILIARY_ZK_SERVER_REALM

Value of ZK_USER_REALM obtained in 7

METADATA_COLLECTION_TIMEOUT

180.

This parameter specifies the timeout interval for waiting for the completion of metadata backup on other nodes, in seconds.

Figure 6 Configuring the cluster authentication information

- Select Storage under ClickHouseServer(Role), and change the value of max_partition_size_to_drop and max_table_size_to_drop to 0.

Select Performance under ClickHouseServer(Role), and change the value of max_server_memory_usage_to_ram_ratio to 0.5 and the value of background_fetches_pool_size to 8.

- Click Save. In the dialog box that is displayed, click OK.

- On the ClickHouse service page, click the Instance tab. On this tab page, select the ClickHouseServer instance from the instance list, and choose More > Restart Instance in the Operation column to restart the ClickHouseServer instance.

Migrating Metadata of Databases and Tables in the Source ClickHouse Cluster to the Destination Cluster

- Log in to FusionInsight Manager of the source and destination clusters, and create the username and password required for the migration. The procedure is as follows:

- Log in to Manager and choose System > Permission > Role. On the displayed page, click Create Role.

- Specify Role Name, for example, ckrole. In the Configure Resource Permission area, click the cluster name. On the displayed service list page, click the ClickHouse service.

- Select SUPER_USER_GROUP and click OK.

- Choose System. On the navigation pane on the left, choose Permission > User and click Create.

- Select Human-Machine for User Type and set Password and Confirm Password to the password of the user.

- Username: The username cannot contain hyphens (-). Otherwise, the authentication will fail.

- Password: The password cannot contain special characters $, ., and #. Otherwise, the authentication will fail.

- In the Role area, click Add . In the displayed dialog box, select the role name in 1.b and click OK to add the role. Then, click OK.

- After the user is created, click the user name in the upper right corner to log out of the system. Log in to FusionInsight Manager as the new user and change its password as prompted.

- Download the ClickHouse client and install it as user omm to the destination cluster.

- Log in to the client node as user omm, go to the Client installation directory/ClickHouse/clickhouse_migration_tool/clickhouse-metadata-migration directory, and configure migration information. Run the following command to modify the example_config.yaml configuration file by referring to Table 4:

cd Client installation directory/ClickHouse/clickhouse_migration_tool/clickhouse-metadata-migration

vi example_config.yaml

After the configuration is modified, you must delete all comment with number sign(#) and retain only valid configurations. Otherwise, an error may occur during script migration.

Table 4 Parameters in the example_config.yaml file Configuration Item

Sub-item

Value and Description

source_cluster

host

IP address of any ClickHouseServer node in the source cluster.

cluster_name

Name of the source ClickHouse cluster. You can log in to the ClickHouse client by referring to ClickHouse Client Practices and run the following command to obtain the value. If the source cluster name has not been changed, the default value is default_cluster.

select cluster,shard_num,replica_num,host_name from system.clusters;

https_port

- For security mode, log in to FusionInsight Manager of the source cluster, choose Cluster > Services > ClickHouse > Configurations > All Configurations, and search for https_port.

- For normal mode, log in to FusionInsight Manager of the source cluster, choose Cluster > Services > ClickHouse > Configurations > All Configurations, and search for http_port.

zookeeper_root_path

To obtain the value, log in to FusionInsight Manager of the source cluster, choose Cluster > Services > ClickHouse, and click Configurations and then All Configurations. In the displayed page, search for clickhouse.zookeeper.root.path.

system

System parameter. Retain the default value.

databases

Optional.

- If this parameter is specified, data in the specified database of the source ClickHouse cluster is migrated. You can specify multiple databases. The following configuration is for your reference:

databases: - "database" - "database_1"Data in the database and database_1 databases of the source cluster is migrated.

- If this parameter is not specified, table data of all databases in the source ClickHouse cluster is migrated. Leave the databases parameter empty. The following is an example:

databases:

Table information of all databases in the source ClickHouse cluster is migrated.

tables

Optional. The value is in the format of Database name.Table name. The database name must be in the databases parameter list.

- If this parameter is specified, data in specified tables in the source ClickHouse cluster database is migrated. You can configure multiple tables. The following configuration is for your reference:

tables: - "database.table_1" - "database_1.table_2"Data in table_1 of database and table_2 of database_1 of the source cluster is migrated.

- If this parameter is not specified and the databases parameter is specified, all table data in the databases database is migrated. If the databases parameter is not specified, all table data in all databases of the source ClickHouse cluster is migrated. The following configuration is for your reference:

tables:

destination_cluster

host

IP address of any ClickHouseServer node in the destination cluster.

cluster_name

Name of the destination ClickHouse cluster. You can log in to the ClickHouse client by referring to ClickHouse Client Practices and run the following command to obtain the value. If the destination cluster name has not been changed, the default value is default_cluster.

select cluster,shard_num,replica_num,host_name from system.clusters;

user

Username created in 1 for logging in to FusionInsight Manager of the destination ClickHouse cluster.

https_port

- For security mode, log in to FusionInsight Manager of the source cluster, choose Cluster > Services > ClickHouse > Configurations > All Configurations, and search for https_port.

- For normal mode, log in to FusionInsight Manager of the source cluster, choose Cluster > Services > ClickHouse > Configurations > All Configurations, and search for http_port.

zookeeper_root_path

To obtain the value, log in to FusionInsight Manager of the destination cluster, choose Cluster > Services > ClickHouse, and click Configurations and then All Configurations. In the displayed page, search for clickhouse.zookeeper.root.path.

system

System parameter. Retain the default value.

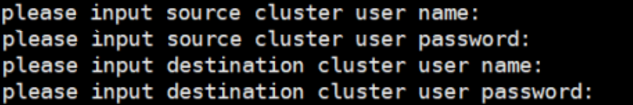

- Run the following command to migrate data and wait until the script execution is complete:

./clickhouse_migrate_metadata.sh -f yaml_file

Enter the usernames and passwords of the source and destination clusters.

If metadata migration fails, perform the following steps:

- Locate the failure cause. Specifically, check whether any parameters in the configuration file are incorrectly configured.

- If yes, reconfigure the parameters and perform metadata migration.

- If no, go to 2.

- Set the names of the tables that fail to be migrated in the metadata migration configuration file based on the databases and tables parameters in Table 4 and run the metadata migration command again. If the migration fails, contact O&M personnel.

Migrating Data of the Databases and Tables in the Source ClickHouse Cluster to the Destination Cluster

- Log in to the ClickHouse client node in the destination cluster as user omm and go to Client installation directory/ClickHouse/clickhouse_migration_tool/clickhouse-data-migration.

cd Client installation directory/ClickHouse/clickhouse_migration_tool/clickhouse-data-migration

- Run the following command to modify the example_config.yaml configuration file by referring to Table 5:

vi example_config.yaml

After the configuration is modified, you must delete all comment with number sign(#) and retain only valid configurations. Otherwise, an error may occur during script migration.Table 5 Parameters in example_config.yaml Configuration Item

Sub-item

Value and Description

source_cluster

host

IP address of any ClickHouseServer node in the source cluster.

cluster_name

Name of the source ClickHouse cluster. You can log in to the ClickHouse client by referring to ClickHouse Client Practices and run the following command to obtain the value. If the source cluster name has not been changed, the default value is default_cluster.

select cluster,shard_num,replica_num,host_name from system.clusters;

user

Username created in 1 for logging in to FusionInsight Manager of the source ClickHouse cluster.

https_port

- For security mode, log in to FusionInsight Manager of the source cluster, choose Cluster > Services > ClickHouse > Configurations > All Configurations, and search for https_port.

- For normal mode, log in to FusionInsight Manager of the source cluster, choose Cluster > Services > ClickHouse > Configurations > All Configurations, and search for http_port.

tcp_port

To obtain the value, log in to FusionInsight Manager of the source cluster, choose Cluster > Services > ClickHouse, and click Configurations and then All Configurations. In the displayed page, search for tcp_port_secure if the cluster is in security mode. Otherwise, search for tcp_port.

zookeeper_root_path

To obtain the value, log in to FusionInsight Manager of the source cluster, choose Cluster > Services > ClickHouse, and click Configurations and then All Configurations. In the displayed page, search for clickhouse.zookeeper.root.path.

system

System parameter. Retain the default value.

databases

Optional.

- If this parameter is specified, data in the specified database of the source ClickHouse cluster is migrated. You can specify multiple databases. The following configuration is for your reference:

databases: - "database" - "database_1"Data in the database and database_1 databases of the source cluster is migrated.

- If this parameter is not specified, table data of all databases in the source ClickHouse cluster is migrated. Leave the databases parameter empty. The following is an example:

databases:

Table information of all databases in the source ClickHouse cluster is migrated.

tables

Optional. The value is in the format of Database name.Table name. The database name must be in the databases parameter list.

- If this parameter is specified, data in specified tables in the source ClickHouse cluster database is migrated. You can configure multiple tables. The following configuration is for your reference:

tables: - "database.table_1" - "database_1.table_2"Data in table_1 of database and table_2 of database_1 of the source cluster is migrated.

- If this parameter is not specified and the databases parameter is specified, all table data in the databases database is migrated. If the databases parameter is not specified, all table data in all databases of the source ClickHouse cluster is migrated. The following configuration is for your reference:

tables:

destination_cluster

host

IP address of any ClickHouseServer node in the destination cluster.

cluster_name

Name of the destination ClickHouse cluster. You can log in to the ClickHouse client by referring to ClickHouse Client Practices and run the following command to obtain the value. If the destination cluster name has not been changed, the default value is default_cluster.

select cluster,shard_num,replica_num,host_name from system.clusters;

user

Username created in 1 for logging in to FusionInsight Manager of the destination ClickHouse cluster.

https_port

- For security mode, log in to FusionInsight Manager of the source cluster, choose Cluster > Services > ClickHouse > Configurations > All Configurations, and search for https_port.

- For normal mode, log in to FusionInsight Manager of the source cluster, choose Cluster > Services > ClickHouse > Configurations > All Configurations, and search for http_port.

tcp_port

To obtain the value, log in to FusionInsight Manager of the destination cluster, choose Cluster > Services > ClickHouse, and click Configurations and then All Configurations. In the displayed page, search for tcp_port_secure if the cluster is in security mode. Otherwise, search for tcp_port.

zookeeper_root_path

To obtain the value, log in to FusionInsight Manager of the destination cluster, choose Cluster > Services > ClickHouse, and click Configurations and then All Configurations. In the displayed page, search for clickhouse.zookeeper.root.path.

system

System parameter. Retain the default value.

auxiliary_zookeepers

name

ZooKeeper name of the source ClickHouse cluster configured in 3, for example, zookeeper2.

hosts

IP address of the ZooKeeper instance of the source ClickHouse. To obtain the IP address, log in to FusionInsight Manager of the source cluster, choose Cluster > Services > ZooKeeper, and click the Instances tab. On the displayed page, view the service IP addresses of the ZooKeeper quorumpeer instance.

The format is as follows:hosts: - "192.168.1.2" - "192.168.1.3" - "192.168.1.4"port

2181

execution_procedure

-

This parameter is left blank by default, indicating that the script is executed once to synchronize service data. Value options are firststep and secondstep.

- firststep: Only the temporary replication table is created. The auxiliary ZooKeeper can synchronize data from the original cluster to the temporary table in real time.

- secondstep: data in the temporary replication table is attached to the local table of the destination cluster.

CAUTION:

If this parameter is set to secondstep, O&M personnel and users need to confirm that ClickHouse-related services have been stopped before script execution.

onereplica_use_auxiliaryzookeeper

-

- If this parameter is set to 1, temporary tables are created for only one replica of each shard.

- If this parameter is set to 0, temporary tables are created for two replicas of each shard.

migrate_timeout

-

ClickHouse data migration timeout. If the migration duration exceeds the specified value, the migration is considered complete and the temporary table clearing starts. The default value is 1440, in minutes. Set this parameter based on the data volume.

NOTE:This parameter applies to MRS 3.3.0-LTS.1.1.

- Stop the ClickHouse service of the source cluster.

- Run the following command to migrate data and wait until the script execution is complete:

./clickhouse_migrate_data.sh -f yaml_file

Enter the usernames and passwords of the source and destination clusters.

- After the script is executed successfully, perform the following steps to check whether the migrated data in the source cluster is consistent with that in the destination cluster based on the migration result logs:

Log in to the ClickHouse client node in the destination cluster and go to the Client installation directory/ClickHouse/clickhouse_migration_tool/clickhouse-data-migration/comparison_result directory.

Compare the following result file information to check the data consistency between the source cluster and the destination cluster:

- source_cluster_table_info: statistics of data migrated from the source cluster

- destination_cluster_table_info: statistics of data migrated to the destination cluster

- compare_result_file.txt: data consistency comparison result before and after migration

If the data is inconsistent before and after the migration, clear the data in the table of the destination cluster and migrate the data in the table separately or manually.

In addition, you can log in to the ClickHouse databases of the source and destination clusters to manually check whether the number of table data records and partitions are consistent.

- Log in to FusionInsight Manager of the destination cluster and delete the ZooKeeper information added to clickhouse-config-customize in 2.

Click Save. In the displayed dialog box, click OK.

- After data migration is complete, switch services to the target ClickHouse cluster.

- Go to Client installation directory/ClickHouse/clickhouse_migration_tool/clickhouse-data-migration and Client installation directory/ClickHouse/clickhouse_migration_tool/clickhouse-metadata-migration on the ClickHouse node in the destination cluster.

vi example_config.yaml

Delete the password from the configuration file to prevent password leakage.

If service data migration fails, perform the following steps:

- Locate the failure cause. Specifically, check whether any parameters in the configuration file are incorrectly configured.

- If yes, reconfigure the parameters and perform service data migration.

- If no, go to 2.

- Run the drop table table_name command to delete the data tables related to the table from the node that fails to be migrated in the destination cluster.

- Run the show create table table_name command to query the table creation statements related to the table in the source cluster and create the table in the destination cluster again.

- Set the names of the tables that fail to be migrated in the service data migration configuration file based on the databases and tables parameters in Table 5 and run the service data migration command again. If the command fails to execute, contact O&M personnel.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot