Using MRS Spark SQL to Access GaussDB(DWS)

Application Scenarios

You can use MRS to quickly build and operate a full-stack cloud-native big data platform on Huawei Cloud. Big data components, such as HDFS, Hive, HBase, and Spark, are available on the platform for analyzing enterprise data at scale.

You can process structured data with the Spark SQL language that is similar to SQL. With Spark SQL, you can access different databases, extract data from these databases, process the data, and load it to different data stores.

This practice demonstrates how to use MRS Spark SQL to access GaussDB(DWS) data.

Solution Architecture

Figure 1 describes the application running architecture of Spark.

- An application is running in the cluster as a collection of processes. Driver coordinates the running of applications.

- To run an application, Driver connects to the cluster manager (such as Standalone, Mesos, and YARN) to apply for the executor resources, and start ExecutorBackend. The cluster manager schedules resources between different applications. Driver schedules DAGs, divides stages, and generates tasks for the application at the same time.

- Then, Spark sends the codes of the application (the codes transferred to SparkContext, which is defined by JAR or Python) to an executor.

- After all tasks are finished, the running of the user application is stopped.

Notes and Constraints

- This topic is available for MRS 3.x and later only.

- To ensure network connectivity, the AZ, VPC, and security group of the GaussDB(DWS) cluster must be the same as those of the MRS cluster.

Prerequisites

- A GaussDB(DWS) cluster has been created. For details, see Creating a GaussDB(DWS) Cluster.

- You have obtained the IP address, port number, database name, username, and password for connecting to the GaussDB(DWS) database. The user must have the read and write permissions on GaussDB(DWS) tables.

Creating an MRS Cluster

- Create an MRS cluster.

Create an MRS cluster that contains the Spark component. For details, see Buying a Custom Cluster.

- If Kerberos authentication is enabled for the cluster, log in to FusionInsight Manager, choose System > Permission > User, and add the human-machine user sparkuser to the user groups hadoop (primary) and hive.

Add the ADD JAR permission by referring to Adding a Ranger Access Permission Policy for Spark2x.

If Kerberos authentication is disabled for the MRS cluster, you do not need to add the user.

- Install an MRS cluster client.

The MRS cluster client has been installed. For details, see Installing a Client.

Configuring MRS Spark SQL to Access GaussDB(DWS) Tables

- Prepare data and create databases and tables in the GaussDB(DWS) cluster.

- Log in to the GaussDB(DWS) console and click Log In in the Operation column of the cluster.

- Log in to the default database gaussdb of the cluster and run the following command to create the dws_test database:

CREATE DATABASE dws_test;

- Connect to the created database and run the following command to create the dws_order table:

CREATE SCHEMA dws_data;

CREATE TABLE dws_data.dws_order

( order_id VARCHAR,

order_channel VARCHAR,

order_time VARCHAR,

cust_code VARCHAR,

pay_amount DOUBLE PRECISION,

real_pay DOUBLE PRECISION );

- Run the following command to insert data to the dws_order table:

INSERT INTO dws_data.dws_order VALUES ('202306270001', 'webShop', '2023-06-27 10:00:00', 'CUST1', 1000, 1000);

INSERT INTO dws_data.dws_order VALUES ('202306270002', 'webShop', '2023-06-27 11:00:00', 'CUST2', 5000, 5000);

- Run the following command to query the table data to check whether the data is inserted:

SELECT * FROM dws_data.dws_order;

- Download the JDBC driver of the GaussDB(DWS) database and upload it to the MRS cluster.

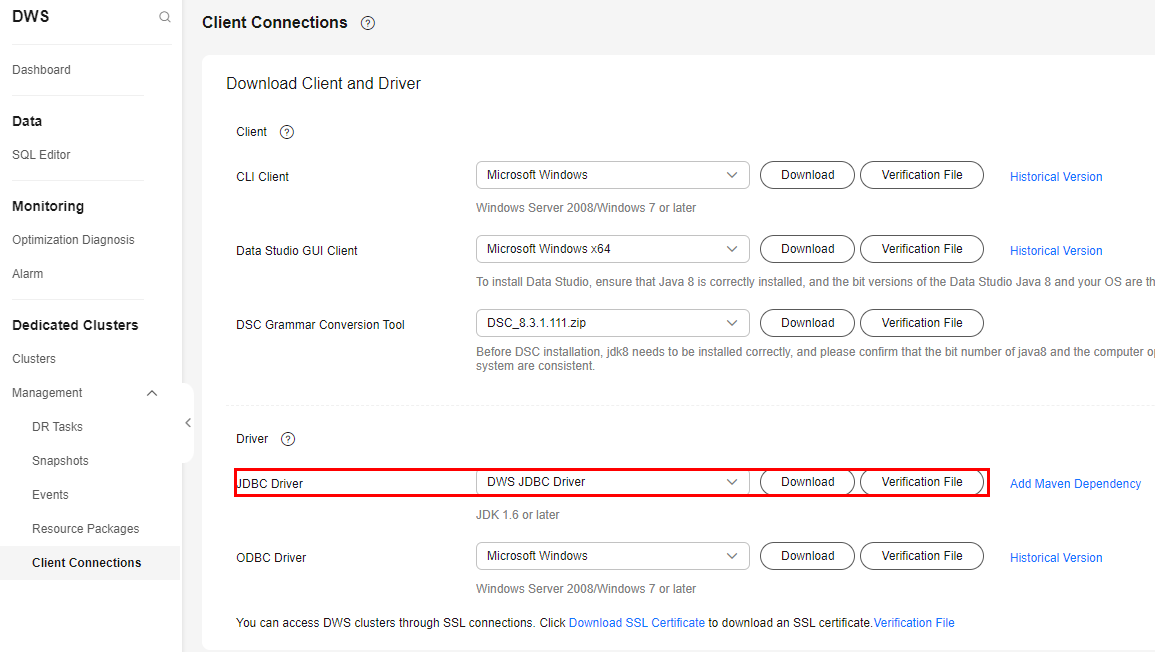

- Log in to the GaussDB (DWS) console, choose Management > Client Connections in the navigation pane on the left, and download the JDBC driver.

Figure 2 Downloading the JDBC driver

- Decompress the package to obtain the gsjdbc200.jar file and upload it to the active Master node of the MRS cluster, for example, to the /tmp directory.

- Log in to the active Master node as user root and run the following commands:

cd Client installation directory

source bigdata_env

kinit sparkuser (Change the password upon the first authentication. If Kerberos authentication is disabled, you do not need to run this command.)

hdfs dfs -put /tmp/gsjdbc200.jar /tmp

- Log in to the GaussDB (DWS) console, choose Management > Client Connections in the navigation pane on the left, and download the JDBC driver.

- Create a data source table in MRS Spark and access the GaussDB(DWS) table.

- Log in to the Spark client node and run the following commands:

cd Client installation directory

source ./bigdata_env

kinit sparkuser

spark-sql --master yarn

- Run the following command to add the driver Jar package:

add jar hdfs://hacluster/tmp/gsjdbc200.jar;

- Run the following commands to create a data source table in Spark and access GaussDB(DWS) data:

CREATE TABLE IF NOT EXISTS spk_dws_order

USING JDBC OPTIONS (

'url'='jdbc:gaussdb://192.168.0.228:8000/dws_test',

'driver'='com.huawei.gauss200.jdbc.Driver',

'dbtable'='dws_data.dws_order',

'user'='dbadmin',

'password'='xxx');

- Run the following command to query the Spark table. Check whether the displayed data is the same as the GaussDB(DWS) data.

Verify that the returned data is the same as that shown in 1.

- Log in to the Spark client node and run the following commands:

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot