MaaS Standard API V1

MaaS provides powerful real-time inference. You can call built-in model services directly or deploy models on dedicated instances. This chapter describes the specifications for calling chat APIs.

Constraints

This function is only available in the CN-Hong Kong region.

API Information

|

Parameter |

Description |

Example Value |

|---|---|---|

|

API Host |

API URL for calling the model service. |

https://api.modelarts-maas.com/v1/chat/completions |

|

model |

model parameter in an API call |

You can obtain the model parameter value using either of the following methods:

|

Models Supported by Built-in Commercial Services

|

Model |

Version |

Supported Region |

Value of model |

Sequence Length |

Function Call |

|---|---|---|---|---|---|

|

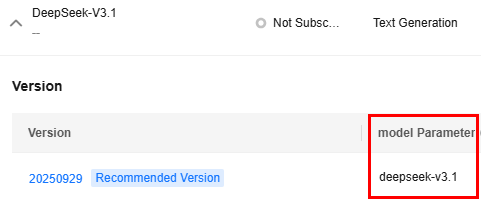

DeepSeek |

DeepSeek-V3.1 |

CN-Hong Kong |

deepseek-v3.1 |

131,072 |

Supported |

|

DeepSeek-R1-64K |

CN-Hong Kong |

DeepSeek-R1 |

65,536 |

Supported |

|

|

DeepSeek-V3-64K |

CN-Hong Kong |

DeepSeek-V3 |

65,536 |

Supported |

|

|

DeepSeek-V3.2-Exp |

CN-Hong Kong |

deepseek-v3.2-exp |

163,840 |

Supported |

|

|

Qwen 3 |

Qwen3-32B-32K |

CN-Hong Kong |

qwen3-32b |

32,768 |

Not supported |

Chain of Thought (CoT)

CoT refers to the model's ability to generate a series of intermediate reasoning steps when solving complex problems. This capability allows the model not only to provide the final answer but also to demonstrate its reasoning process, thereby enhancing the model's explainability and transparency.

Only DeepSeek-V3.1 and DeepSeek-V3.2-Exp support enabling or disabling the CoT.

The constraints for DeepSeek-V3.1 are as follows:

- Function calling is incompatible with the CoT; it is not recommended to use them simultaneously.

- Enabling the CoT does not support prefix continuation.

- The ability to truncate only the content and not the CoT is ineffective.

- After enabling the CoT, guided_choice is unavailable, and reasoning_content is incompatible with guided_decoding.

The constraints for DeepSeek-V3.2-Exp are as follows:

- Function calling is incompatible with the CoT; it is not recommended to use them simultaneously.

- The ability to truncate only the content and not the CoT is ineffective.

- Prefix continuation and guided_choice are not supported.

Creating a Chat Request

- Authentication description

MaaS inference services support API key authentication. The authentication header is in the following format:

'Authorization': 'Bearer API key of the region where the service is deployed'

- The request and response parameters are as follows.

Table 2 Request parameters Parameter

Mandatory

Default Value

Type

Description

model

Yes

None

Str

Model name for calling. For details about the value, see Table 1.

messages

Yes

N/A

Array

Input question. role shows the role, and content shows the dialog content. Example:

"messages": [ {"role": "system","content": "You are a helpful AI assistant."}, {"role": "user","content": "Which number is larger, 9.11 or 9.8?"} ]For more information, see Table 3.

stream_options

No

None

Object

Specifies whether to display the number of used tokens during streaming output. This parameter is only valid when stream is set to True. You need to set stream_options to {"include_usage": true} to print the number of tokens used. For more information, see Table 4.

max_tokens

No

None

Int

Maximum number of tokens that can be generated for the current task, including tokens generated by the model and reasoning tokens for deep thinking.

top_k

No

-1

Int

The candidate set size determines the sampling range during generation. For example, setting it to 50 means only the top 50 scoring tokens are sampled at each step. A larger size increases randomness; a smaller one makes the output more predictable.

top_p

No

1.0

Float

Nucleus sampling. It keeps only the words with combined probabilities above the threshold p and removes the rest. These selected words are then normalized and sampled again.

Lower settings reduce word options, making outputs focused and cautious. Higher settings expand word choices, creating varied and creative outputs.

Adjust either temperature or top_p separately for best results, not both at once.

Value range: 0 to 1. The value 1 indicates that all tokens are considered.

temperature

No

1.0

Float

Model sampling temperature. The higher the value, the more random the model output; the lower the value, the more deterministic the output.

Adjust either temperature or top_p separately for best results, not both at once.

Recommended value of temperature: 0.6 for DeepSeek-R1, DeepSeek-V3, and Qwen3 series, and 0.2 for Qwen2.5-VL series.

stop

No

None

None/Str/List

A list of strings used to stop generation. The output does not contain the stop strings.

For example, if the value is set to ["You," "Good"], text generation will stop once either You or Good is reached.

stream

No

False

Bool

Controls whether to enable streaming inference. The default value is False, indicating that streaming inference is disabled.

n

No

1

Int

Number of responses generated for each input message.

- If beam_search is not used, the recommended value range of n is 1 ≤ n ≤10. If n is greater than 1, ensure that greedy_sample is not used for sampling, that is, top_k is greater than 1 and temperature is greater than 0.

- If beam_search is used, the recommended value range of n is 1 < n ≤ 10. If n is 1, the inference request will fail.

NOTE:

- For optimal performance, keep n at 10 or below. Large values of n can significantly slow down processing. Inadequate video RAM may cause inference requests to fail.

- You cannot set n higher than 1 for DeepSeek-R1 and DeepSeek-V3.

use_beam_search

No

False

Bool

Controls whether to use beam_search to replace sampling.

When this parameter is used, the following parameters must be configured as required:

- n: > 1

- top_p: 1.0

- top_k: -1

- temperature: 0.0

NOTE:

You cannot set n higher than 1 for DeepSeek-R1 and DeepSeek-V3.

presence_penalty

No

0.0

Float

Applies rewards or penalties based on the presence of new words in the generated text. The value range is [-2.0,2.0].

frequency_penalty

No

0.0

Float

Applies rewards or penalties based on the frequency of each word in the generated text. The value range is [-2.0,2.0].

length_penalty

No

1.0

Float

Imposes a larger penalty on longer sequences in a beam search process.

When this parameter is used, the following parameters must be configured as required:

- top_k: -1

- use_beam_search: true

- best_of: > 1

NOTE:You cannot set length_penalty for DeepSeek-R1 and DeepSeek-V3.

chat_template_kwargs.thinking

No

false

Bool

The CoT is disabled by default. Only DeepSeek-V3.1 and DeepSeek-V3.2-Exp are supported. For details about the constraints, see Chain of Thought (CoT).

Example of enabling the CoT:{ "model": "deepseek-v3.1", "messages": [{ "role": "system", "content": "You are a helpful assistant." }, { "role": "user", "content": "Hello" }], "chat_template_kwargs": { "thinking": true } }Table 3 Request parameter messages Parameter

Mandatory

Default Value

Type

Description

role

Yes

None

Str

Different roles correspond to different message types.

- system: developer-entered instructions like response formats and roles for the model to follow.

- user: user-entered messages including prompts and context information.

- assistant: responses generated by the model.

- tool: information returned by the tool when the model calls it.

content

Yes

None

Str

- When role is set to system, this parameter indicates the AI model's personality.

{"role": "system","content": "You are a helpful AI assistant."} - When role is set to user, this parameter indicates the question asked by the user.

{"role": "user","content": "Which number is larger, 9.11 or 9.8?"} - When role is set to assistant, this parameter indicates the content output by the AI model.

{"role": "assistant","content": "9.11 is larger than 9.8."} - When role is set to tool, this parameter indicates the responses returned by the tool when the model calls it.

{"role": "tool", "content": "The weather in Shanghai is sunny today. The temperature is 10°C."}

Table 4 Request parameter stream_options Parameter

Mandatory

Default Value

Type

Description

include_usage

No

true

Bool

Specifies whether the streaming response outputs token usage information.

- true: Each chunk outputs a usage field that shows the total token usage.

- false: The token usage is not displayed.

Table 5 Response parameters Parameter

Type

Description

id

Str

Unique ID of the request.

object

Str

chat.completion type: Multi-turn dialogs are returned.

created

Int

Timestamp.

model

Str

Model name for calling.

choices

Array

Model output, including the index and message parameters. In message:

- content is the model's final reply.

- reasoning content is the model's deep thinking content (for DeepSeek models only).

usage

Object

Statistics on tokens consumed by the request:

- This parameter is returned by default for non-streaming requests.

- This parameter is returned by default for streaming requests. Each chunk outputs a usage field that shows the token usage.

Parameters:

- prompt tokens: number of input tokens.

- completion tokens: number of output tokens.

- total tokens: total number of tokens.

prompt_logprobs

Float

Log probability. You can use this to measure the model's confidence in its output or to explore other options the model provides.

DeepSeek-V3 Text Generation (Non-Streaming) Request Example

- REST API request example:

- Python request example:

import requests import json if __name__ == '__main__': url = "https://api.modelarts-maas.com/v1/chat/completions" # API address api_key = "MAAS_API_KEY" # Replace MAAS_API_KEY with the obtained API key. # Send a request. headers = { 'Content-Type': 'application/json', 'Authorization': f'Bearer {api_key}' } data = { "model":"deepseek-v3", # Model name "messages": [ {"role": "system", "content": "You are a helpful assistant."}, {"role": "user", "content": "Hello"} ] } response = requests.post(url, headers=headers, data=json.dumps(data), verify=False) # Print result. print(response.status_code) print(response.text) - cURL request example

curl -X POST "https://api.modelarts-maas.com/v1/chat/completions" \ -H "Content-Type: application/json" \ -H "Authorization: Bearer $MAAS_API_KEY" \ -d '{ "model": "deepseek-v3", "messages": [ {"role": "system", "content": "You are a helpful assistant."}, {"role": "user", "content": "Hello"} ] }'

- Python request example:

- OpenAI SDK request example:

from openai import OpenAI base_url = "https://api.modelarts-maas.com/v1" # API address api_key = "MAAS_API_KEY" # Replace MAAS_API_KEY with the obtained API key. client = OpenAI(api_key=api_key, base_url=base_url) response = client.chat.completions.create( model="deepseek-v3", # Model name messages=[ {"role": "system", "content": "You are a helpful assistant"}, {"role": "user", "content": "Hello"} ] ) print(response.choices[0].message.content)

DeepSeek-V3 Text Generation (Streaming) Request Example

- Python request example:

from openai import OpenAI base_url = "https://api.modelarts-maas.com/v1" # API address api_key = "MAAS_API_KEY" # Replace MAAS_API_KEY with the obtained API key. client = OpenAI(api_key=api_key, base_url=base_url) response = client.chat.completions.create( model="deepseek-v3", # Model name messages=[ {"role": "system", "content": "You are a helpful assistant"}, {"role": "user", "content": "Hello"} ], stream = True ) for chunk in response: if not chunk.choices: continue print(chunk.choices[0].delta.content, end="") - cURL request example:

curl -X POST "https://api.modelarts-maas.com/v1/chat/completions" \ -H "Content-Type: application/json" \ -H "Authorization: Bearer $MAAS_API_KEY" \ -d '{ "model": "deepseek-v3", "messages": [ {"role": "system", "content": "You are a helpful assistant."}, {"role": "user", "content": "Hello"} ], "stream": true, "stream_options": { "include_usage": true } }'

DeepSeek-V3.1 Text Generation (Non-Streaming) Request Example

- REST API request example:

- Python request example:

import requests import json if __name__ == '__main__': url = "https://api.modelarts-maas.com/v1/chat/completions" # API address api_key = "MAAS_API_KEY" # Replace MAAS_API_KEY with the obtained API key. # Send a request. headers = { 'Content-Type': 'application/json', 'Authorization': f'Bearer {api_key}' } data = { "model": "deepseek-v3.1", # Model "messages": [ {"role": "system", "content": "You are a helpful assistant."}, {"role": "user", "content": "Hello"} ], "chat_template_kwargs": { "thinking": True # Specifies whether to enable deep thinking. It is disabled by default. } } response = requests.post(url, headers=headers, data=json.dumps(data), verify=False) # Print result. print(response.status_code) print(response.text) - cURL request example

curl -X POST "https://api.modelarts-maas.com/v1/chat/completions" \ -H "Content-Type: application/json" \ -H "Authorization: Bearer $MAAS_API_KEY" \ -d '{ "model": "deepseek-v3.1", "messages": [ {"role": "system", "content": "You are a helpful assistant."}, {"role": "user", "content": "Hello"} ], "chat_template_kwargs": { "thinking": true } }'

- Python request example:

- OpenAI SDK request example:

from openai import OpenAI base_url = "https://api.modelarts-maas.com/v1" # API address api_key = "MAAS_API_KEY" # Replace MAAS_API_KEY with the obtained API key. client = OpenAI(api_key=api_key, base_url=base_url) response = client.chat.completions.create( model="deepseek-v3.1", # Model messages=[ {"role": "system", "content": "You are a helpful assistant"}, {"role": "user", "content": "Hello"}, ], extra_body={ "chat_template_kwargs": { "thinking": True # Specifies whether to enable deep thinking. It is disabled by default. } } ) print(response.choices[0].message.content)

Qwen2.5-VL-7B Image Understanding (Non-Streaming) Request Example

- REST API request example:

- Python request example:

import requests import json import base64 # Convert the image to the Base64 encoding format. def encode_image(image_path): with open(image_path, "rb") as image_file: return base64.b64encode(image_file.read()).decode("utf-8") base64_image = encode_image("test.png") if __name__ == '__main__': url = "https://api.modelarts-maas.com/v1/chat/completions" # API address api_key = "MAAS_API_KEY" # Replace MAAS_API_KEY with the obtained API key. # Send a request. headers = { 'Content-Type': 'application/json', 'Authorization': f'Bearer {api_key}' } data = { "model": "qwen2.5-vl-7b", # Model "messages": [ { "role": "user", "content": [ { "type": "text", "text": "Describe the content in the image." }, { "type": "image_url", # Ensure the Base64 encoding matches the image/{format} specified in the Content-Type header listed for supported images. The "f" represents the string formatting method. # PNG image: f"data:image/png;base64,{base64_image}" # JPEG image: f"data:image/jpeg;base64,{base64_image}" # WEBP image: f"data:image/webp;base64,{base64_image}" "image_url": { "url": f"data:image/png;base64,{base64_image}" } } ] } ] } response = requests.post(url, headers=headers, data=json.dumps(data), verify=False) # Print result. print(response.status_code) print(response.text) - cURL request example

curl -X POST "https://api.modelarts-maas.com/v1/chat/completions" \ -H "Content-Type: application/json" \ -H "Authorization: Bearer $MAAS_API_KEY" \ -d '{ "model": "qwen2.5-vl-72b", "messages": [ { "role": "user", "content": [ {"type": "text", "text": "Describe the content in the image."}, {"type": "image_url", "image_url": {"url": "data:image/png;base64,$BASE64_IMAGE"}} ] } ] }'

- Python request example:

- OpenAI SDK request example:

import base64 from openai import OpenAI base_url = "https://api.modelarts-maas.com/v1" # API address api_key = "MAAS_API_KEY" # Replace MAAS_API_KEY with the obtained API key. # Convert the image to the Base64 encoding format. def encode_image(image_path): with open(image_path, "rb") as image_file: return base64.b64encode(image_file.read()).decode("utf-8") base64_image = encode_image("test.png") client = OpenAI(api_key=api_key, base_url=base_url) response = client.chat.completions.create( model = "qwen2.5-vl-72b", # Model messages = [ { "role": "user", "content": [ {"type": "text", "text": "Describe the content in the image."}, { "type": "image_url", # Ensure the Base64 encoding matches the image/{format} specified in the Content-Type header listed for supported images. The "f" represents the string formatting method. # PNG image: f"data:image/png;base64,{base64_image}" # JPEG image: f"data:image/jpeg;base64,{base64_image}" # WEBP image: f"data:image/webp;base64,{base64_image}" "image_url": { "url": f"data:image/png;base64,{base64_image}" } } ] } ] ) print(response.choices[0].message.content)

Response Example

{

"id":"chat-71406e38b0d248c9b284709f8435****",

"object":"chat.completion",

"created":1740809549,

"model":"DeepSeek-R1",

"choices":[

{

"index":0,

"message":{

"role":"assistant",

"content":"\n\n Compare 9.11 and 9.8.:\n\n1. **Compare the integer part**: The integer part of both is 9, which is equal.\n2. **Compare the tenths place**:\n - The tenths place of 9.11 is **1**\n - 9.8 can be considered as 9.80, and its tenths place is **8**\n - **8 > 1**, so 9.8 is larger.\n\n**Conclusion**:\n**9.8 > 9.11**\n(When comparing decimals, line up the digits and compare them directly.)",

"reasoning_content": "Well, I now need to compare 9.11 and 9.8 which is larger. First of all, I have to recall the method of comparing decimals. When comparing decimals, start by comparing the integer parts. If the integer parts are the same, compare the tenths and hundredths of the decimal parts in sequence until the larger number is determined. \n\n The integer parts of the two numbers are both 9, so they are the same. Next, compare the tenths. The tenth digit of 9.11 is 1, while the tenth digit of 9.8 is 8. This can be problematic, as some people might directly treat 9.8 as 9.80, or focus on comparing the digits in the tenths place. \n\n Now, comparing the tenths place, 9.8 has an 8, while 9.11 has a 1. Clearly, 8 is greater than 1. So, should we conclude that 9.8 is greater than 9.11? \n\n However, it is important to note that some people might incorrectly assume that the more decimal places a number has, the larger its value. But this is not true; for instance, 0.9 is greater than 0.8999. Thus, having more decimal places does not necessarily mean a larger value. \n\n Additionally, the decimal parts of the two numbers can be aligned to have the same number of digits for comparison. For instance, 9.8 can be written as 9.80, where the tenths place is 8 and the hundredths place is 0. On the other hand, for 9.11, the tenths place is 1 and the hundredths place is 1. Since 8 in the tenths place is greater than 1, 9.80 (which is 9.8) is greater than 9.11. \n\n Therefore, the final conclusion is that 9.8 is larger than 9.11.\n",

"tool_calls":[]

},

"logprobs":null,

"finish_reason":"stop",

"stop_reason":null

}

],

"usage":{

"prompt_tokens":21,

"total_tokens":437,

"completion_tokens":416

},

"prompt_logprobs":null

}

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot