Scaling Model Service Instances in ModelArts Studio (MaaS)

When using inference with LLMs, service requirements can vary greatly, necessitating flexible scaling to handle load changes and ensure high availability and efficient resource use.

MaaS enables manual scaling of model service instances without disrupting service operation.

Prerequisites

A model has been deployed in MaaS.

Constraints

This function is available only in the CN-Hong Kong region.

The number of instances can only be changed when the model service is in the Running or Alarm state.

Billing

When you add more model service instances, costs for compute, storage, and tokens accumulate as you use the built-in MaaS services. For details, see Model Inference Billing Items.

Scaling Instances

- Log in to the ModelArts Studio (MaaS) console and select the target region on the top navigation bar.

- In the navigation pane on the left, choose Real-Time Inference.

- On the Real-Time Inference page, click the My Services tab. Choose More > Scale in the Operation column of the target service.

- Perform the following operations as required.

- Scale-out: Increase the number of instances as required and click OK. In the Scale Service dialog box, click OK.

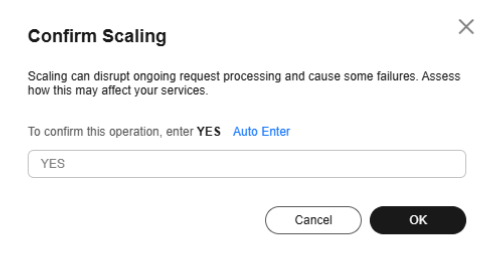

- Scale-in: Reduce the number of instances as required and click OK. In the Confirm Scaling dialog box, confirm the information, enter YES, and click OK.

Figure 1 Confirm Scaling

In the My Services tab, click the service name to access its details page and check whether the change takes effect.

Follow-Up Operations

- Calling a Model Service in ModelArts Studio (MaaS): After a model service is scaled in or out, the model service can be called in other service environments for prediction.

- Viewing the Call Data and Monitoring Metrics of Real-Time Inference on ModelArts Studio (MaaS): MaaS provides call statistics. You can view the call data details of a model service in a specified period and monitor the service usage and resource consumption.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot