How Do I Automatically Restore Services When Cluster Node Faults Occur?

AI servers will inevitably experience hardware failures. As resource pools grow in large-scale compute scenarios, the likelihood of such failures increases. These issues can disrupt services on affected nodes.

When a Lite Cluster node detects a fault, it sends the fault details to both the Kubernetes node and AOM. For details, see Reporting Cluster Node Faults. ModelArts detects faults, sends notifications, and collaborates with services to swiftly recover operations within Kubernetes.

The following shows the process of automatically recovering services based on the faulty node information.

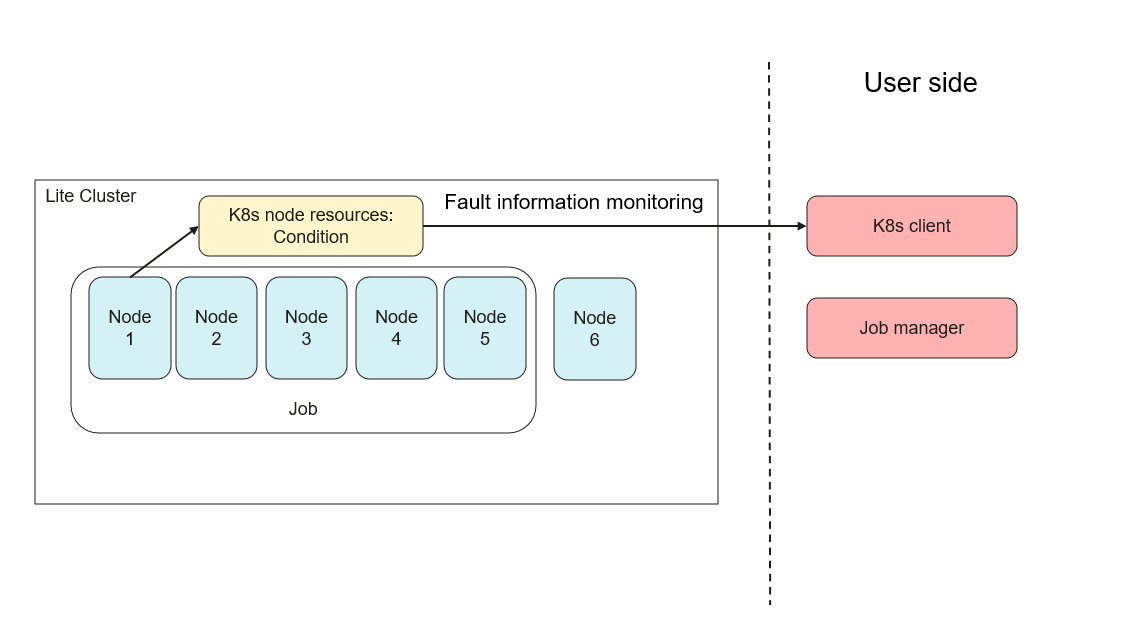

Step 1: Obtaining the Information About the Faulty Node (The Kubernetes Watch is used as an example.)

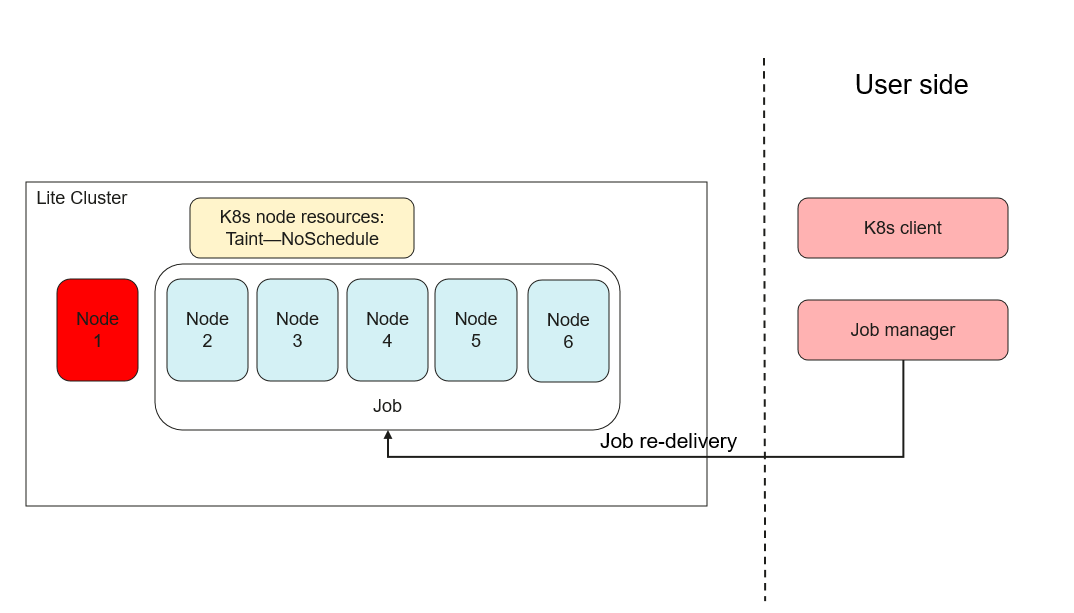

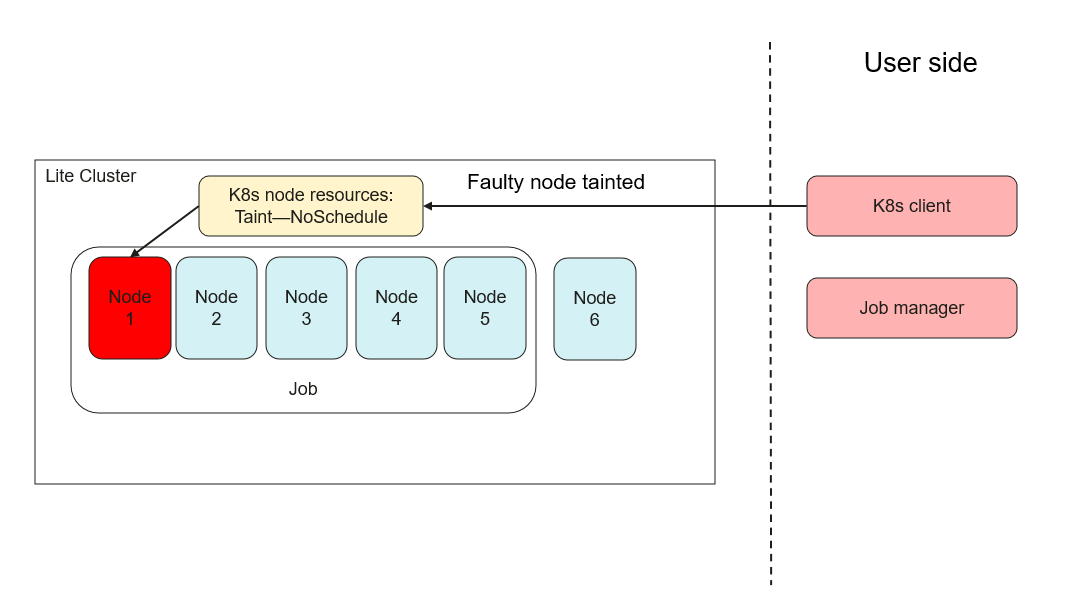

Step 2: Isolating the Faulty Node in the Cluster Using Taints

After services are automatically recovered, rectify the faulty node. For details, see How Do I Locate and Rectify a Node Fault in a Cluster Resource Pool?.

Reporting Cluster Node Faults

For ModelArts Lite resource pools, the node-agent component is deployed on each node in DaemonSet mode.

The node-agent and gpu device-plugin components are installed for GPU resource pools. The node-agent and npu device-plugin components are installed for NPU resource pools.

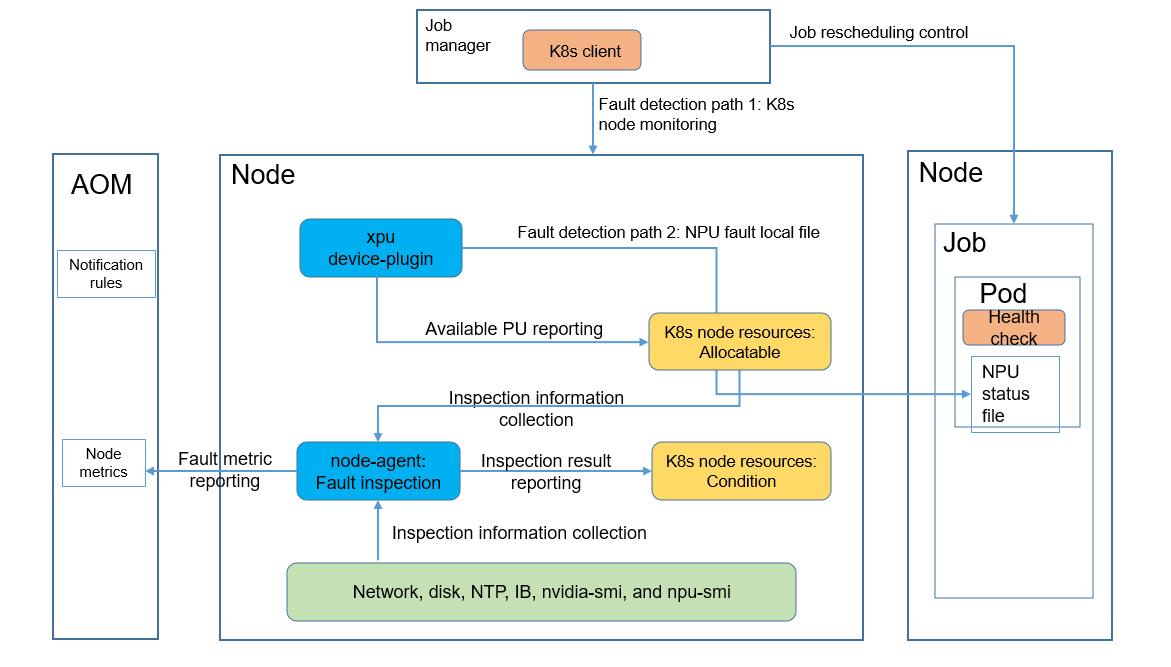

Figure 1 shows how node faults are reported.

Fault detection: device-plugin is responsible for detecting xpu faults, and node-agent is responsible for checking the AI runtime environment. device-plugin obtains chip faults from the driver and reports the number of available PUs to K8s NodeAllocatable in real time. device-plugin automatically mounts NPU status files to Kubernetes pods that use NPUs for health check. node-agent collects fault information using health check scripts, aggregates the detection results, and writes the results to K8s NodeCondition.

Fault notification: After collecting the check result, node-agent periodically reports node fault metrics to AOM. For details about how to configure timely notification, see How Do I Locate and Rectify a Node Fault in a Cluster Resource Pool?.

For details about K8s NodeAllocatable and Condition, see Kubernetes Node Status.

Example of K8s NodeCondition:

{

"type": "NT_NPU_CARD_LOSE",

"status": "False",

"lastHeartbeatTime": null,

"lastTransitionTime": "2024-09-27T10: 45: 55Z",

"reason": "os_task_name:npu-card-lose",

"message": "ok"

}

The value of status can be False, True, or Unknown. In the preceding example, if status is True, the node has a fault whose type is NT_NPU_CARD_LOSE. For details about all fault types, see How Do I Locate and Rectify a Node Fault in a Cluster Resource Pool?.

Step 1: Obtaining the Information About the Faulty Node

You can access the cluster through the Kubernetes API and obtain the details about the nodes in the cluster. The following is a go client example:

package main

import (

"context"

"fmt"

"time"

"k8s.io/api/core/v1"

metav1 "k8s.io/apimachinery/pkg/apis/meta/v1"

"k8s.io/client-go/kubernetes"

"k8s.io/client-go/tools/clientcmd"

)

func main() {

// Generally, you can use clientcmd.RecommendedHomeFile. The value is $HOME/.kube/config. You can change the value as required.

config, _ := clientcmd.BuildConfigFromFlags("", clientcmd.RecommendedHomeFile)

// Create a clientset client.

clientset, _ := kubernetes.NewForConfig(config)

// Create a watcher.

watcher, err := clientset.CoreV1().Nodes().Watch(context.TODO(), metav1.ListOptions{})

if err != nil {

fmt.Printf("Failed to create watcher: %v\n", err)

}

defer watcher.Stop()

termCh := make(chan struct{}, 1)

// Obtain node fault information in event-driven mode.

go func() {

for {

select {

case <-termCh:

return

case event, ok := <-watcher.ResultChan():

if !ok {

fmt.Printf("Failed to get watcher chan: %v\n", err)

return

}

node := event.Object.(*v1.Node)

fmt.Printf("Event type: %v, Node name: %s\n", event.Type, node.Name)

for _, v := range node.Status.Conditions {

fmt.Printf("Node Condition Type: %v, Type Status: %v\n", v.Type, v.Status)

}

}

}

}()

// Alternatively, obtain all nodes.

nodes, _ := clientset.CoreV1().Nodes().List(context.TODO(), metav1.ListOptions{})

fmt.Printf("There are %d nodes in the cluster\n", len(nodes.Items))

time.Sleep(10 * time.Second)

// Define the end signal.

termCh <- struct{}{}

}

Step 2: Isolating the Faulty Node in the Cluster Using Taints

If the service is a training job, you can set breakpoints for resumable training.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot