How Do I Upgrade the Engine Version of a DLI Job?

DLI offers Spark and Flink compute engines, providing users with a one-stop serverless converged processing and analysis services for stream processing, batch processing, and interactive analysis. Currently, the recommended version for Flink compute engine is Flink 1.15, and for Spark compute engine is Spark 3.3.1.

The following walks you through on how to upgrade the engine version of a DLI job.

- SQL job:

The engine version cannot be configured for SQL jobs. You need to create a queue to run SQL jobs, and the new queue will automatically use the latest version of the Spark engine.

- Flink OpenSource SQL job:

- Log in to the DLI management console.

- In the navigation pane on the left, choose Job Management > Flink Jobs. In the job list, locate the desired Flink OpenSource SQL job.

- Click Edit in the Operation column.

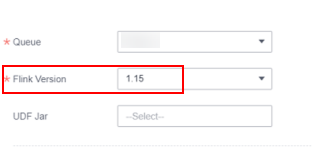

- On the Running Parameters tab, select a new Flink version for Flink Version.

When running a job using Flink 1.15 or later, you need to configure agency information on the Runtime Configuration tab. The key should be flink.dli.job.agency.name and the value should be the name of the agency. Failure to do so may affect the job's execution. For how to customize DLI agency permissions, see Customizing DLI Agency Permissions.

For the syntax of Flink 1.15, see Flink 1.15 Syntax Overview.

- Flink Jar job:

- Log in to the DLI management console.

- In the navigation pane on the left, choose Job Management > Flink Jobs. In the job list, locate the desired Flink Jar job.

- Click Edit in the Operation column.

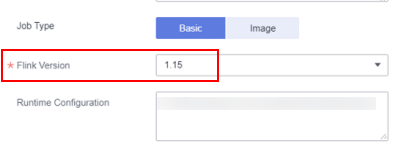

- In the parameter configuration area, select a new Flink version for Flink Version.

When running a job using Flink 1.15 or later, you need to configure agency information in Runtime Configuration. The key should be flink.dli.job.agency.name and the value should be the name of the agency. Failure to do so may affect the job's execution. For how to customize DLI agency permissions, see Customizing DLI Agency Permissions.

For the syntax of Flink 1.15, see Flink 1.15 Syntax Overview.

- Spark Jar job:

- Log in to the DLI management console.

- In the navigation pane on the left, choose Job Management > Flink Jobs. In the job list, locate the desired Spark Jar job.

- Click Edit in the Operation column.

- In the parameter configuration area, select a new Spark version for Spark Version.

When running a job using Spark 3.3.1 or later, you need to configure a custom agency name in Spark Arguments(--conf). Failure to do so may affect the job's execution. For how to customize DLI agency permissions, see Customizing DLI Agency Permissions.

- Learn more:

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot