Creating a Hive Table in ZSTD Compression Format

Scenario

Compressed files can save storage space and speed up data reading from disks data transmission over networks. Hive supports the SNAPPY, ZLIB, Gzip, Bzip2 and ZSTD compression formats.

Zstandard (ZSTD) is an open-source lossless data compression algorithm. It outperforms other Hadoop compression formats in terms of compression performance and compression ratio. This section describes how to create a Hive table in ZSTD compression format. Hive supports ZSTD-compressed files in ORC, RCFile, TextFile, JsonFile, Parquet, SequenceFile, and CSV formats.

For details about other compression formats supported by Hive tables, see Table 1.

|

Compression Format |

Description |

Supported Hive table storage formats |

Applicable Scenario |

|---|---|---|---|

|

SNAPPY |

SNAPPY is featured by extremely high compression and decompression speeds, but fairly moderate in compression ratio. |

TextFile, RCFile, SequenceFile, Parquet, and ORC |

Suitable for scenarios requiring fast compression and decompression. |

|

ZLIB |

ZLIB is featured as an efficient, reliable, and widely used data compression library. Based on the DEFLATE algorithm, ZLIB supports multiple compression levels and streaming processing. |

TextFile, RCFile, SequenceFile, Parquet, and ORC |

Suitable for scenarios requiring a high compression ratio. |

|

Gzip |

Gzip is a widely used compression algorithm. It is featured with a high compression ratio but a low compression and decompression speed. Gzip works based on ZLIB and compresses data with the DEFLATE algorithm. However, the Gzip compressed files contain some additional metadata. |

Suitable for storage scenarios requiring high compression ratio. |

|

|

Bzip2 |

Compared with Gzip, Bzip2 is featured with a higher compression ratio but a slower compression and decompression speed. |

TextFile and SequenceFile |

Suitable for scenarios requiring higher compression ratio, where a lower compression and decompression speed is acceptable. |

|

LZO |

Lempel-Ziv-Oberhumer (LZO) is a fast compression algorithm that supports high compression ratio and fast decompression. It is applicable to the Hadoop ecosystem. |

TextFile, RCFile, SequenceFile, and Parquet |

Suitable for scenarios where the Hadoop ecosystem is used and the fast decompression is required. |

|

ZSTD |

ZSTD is an open-source lossless data compression algorithm. It outperforms other compression formats supported by Hadoop in terms of compression performance and compression ratio. |

ORC, RCFile, TextFile, JsonFile, Parquet, SequenceFile, and CSV |

Suitable for scenarios requiring high compression performance and compression ratio. |

|

ZSTD_JNI |

ZSTD_JNI is a native implementation of the ZSTD compression algorithm. Compared with ZSTD, ZSTD_JNI has higher compression read/write efficiency and compression ratio, and allows you to specify the compression level as well as the compression mode for data columns in a specific format. For details, see Using the ZSTD_JNI Compression Algorithm to Compress Hive ORC Tables. |

ORC |

Suitable for scenarios requiring better performance and compression ratio. |

Prerequisites

- The cluster client has been installed. For details about how to install the client, see Installing a Client.

- A Hive service user has been created and granted the permission to create Hive tables. For example, the user has been added to the hive (primary group) and hadoop user groups. For details about how to create a Hive user, see Creating a Hive User and Binding the User to a Role.

Creating a Hive Table in ZSTD Compression Format

- Log in to the node where the client is installed as the client installation user.

- Go to the client installation directory.

cd /opt/hadoopclient

- Configure environment variables.

source bigdata_env

- Authenticate the user. If Kerberos authentication is not enabled, skip this step.

kinit Hive service user - Log in to the Hive client.

beeline

- Create a table compressed in ZSTD format. The SQL operations, such as addition, deletion, query, and aggregation, on tables compressed in ZSTD format are the same as those on other common compressed tables.

- To create a table in ORC format, specify TBLPROPERTIES("orc.compress"="zstd").

create table tab_1(id string,name string) stored as orc TBLPROPERTIES("orc.compress"="zstd");

- To create a table in Parquet format, specify TBLPROPERTIES("parquet.compression"="zstd").

create table tab_2(id string,name string) stored as parquet TBLPROPERTIES("parquet.compression"="zstd");

To default the compression format of Parquet tables to ZSTD, run the following command on the Hive Beeline client:

set hive.parquet.default.compression.codec=zstd;

This command is applied to the current session only.

- To create a table in other formats or in the general format, set compress.codec to org.apache.hadoop.io.compress.ZStandardCode.

- Run the following commands to set the parameters in Table 2:

set Parameter name=Parameter value;

Example:

set hive.exec.compress.output=true;

Table 2 Setting the compression algorithm of Hive tables to ZSTD Parameter

Description

Value

hive.exec.compress.output

Whether to compress the output when Hive executes a query.

- true: The output is compressed when Hive executes a query.

- false (default value): The output is not compressed when Hive executes a query.

true

mapreduce.map.output.compress

Whether to compress the output in the Map phase.

- true: The output in the Map phase is compressed.

- false (default value): The output in the Map phase is not compressed.

true

mapreduce.map.output.compress.codec

The compression algorithm of the intermediate result output in the Map phase. The algorithm is used to minimize the size of data stored on disks and transmitted over networks, thereby improving the overall data processing efficiency.

org.apache.hadoop.io.compress.ZStandardCodec

mapreduce.output.fileoutputformat.compress

Whether to compress the final output of MapReduce jobs.

- true: The final output of the MapReduce jobs is compressed.

- false (default value): The final output of MapReduce jobs is not compressed.

true

mapreduce.output.fileoutputformat.compress.codec

The compression algorithm of the final output of MapReduce jobs. The algorithm is used to reduce the overhead of storage space and network transmission.

org.apache.hadoop.io.compress.ZStandardCodec

hive.exec.compress.intermediate

Whether to compress the intermediate result when Hive executes a query.

- true: The intermediate result is compressed when Hive executes a query.

- false (default value): The intermediate result is not compressed when Hive executes a query.

true

- Create a Hive table.

create table tab_3(id string,name string) stored as textfile;

- Run the following commands to set the parameters in Table 2:

- To create a table in ORC format, specify TBLPROPERTIES("orc.compress"="zstd").

- View the table information.

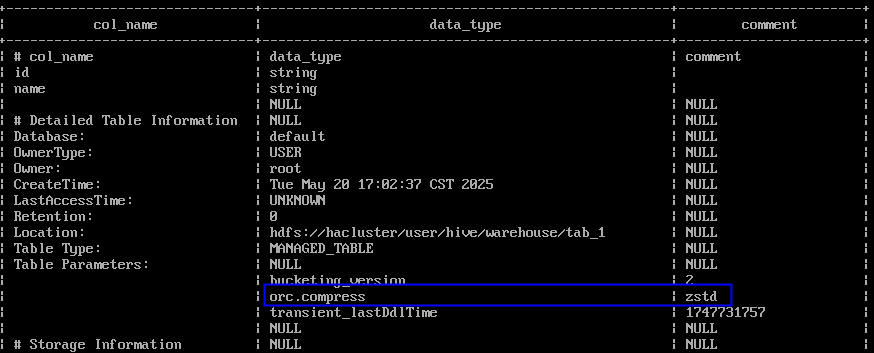

desc formatted tab_1;The command output displays the compression format. The Hive table in ORC format in Figure 1 is compressed in zstd format.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot