Configuring the Column Statistics Histogram for Higher CBO Accuracy

Scenario

Typically, Spark SQL statements are optimized using heuristic optimization rules. Such rules are provided only based on the characteristics of the logical plan but the characteristics of the data (the execution cost of the operator) are not considered. Spark 2.2 introduces cost-based optimizer (CBO). CBO gathers statistics on tables and columns, and uses this information to estimate the number of output records and byte size for each operator based on the input dataset. These estimates determine the cost of executing each operator.

CBO adjusts the execution plan to minimize the end-to-end query time. The idea is as follows:

- Filter out irrelevant data as early as possible.

- Minimize the cost of each operator.

The CBO optimization process is divided into two steps:

- Collect statistics.

- Estimate the output data set of a specific operator based on the input dataset.

Table-level statistics include the number of records and the total size of table data files.

Column-level statistics include the number of unique values, maximum value, minimum value, number of null values, average length, maximum length, and histogram.

After the statistics are obtained, the execution cost of the operator can be estimated. Common operators include the Filter and Join operators.

Histogram is a type of column statistics. It can clearly describe the distribution of column data. The column data is distributed to a specified number of bins that are displayed in ascending order by size. The upper and lower limits of each bin are calculated. The amount of data in all bins is the same (a contour histogram). With the detailed distribution of data, the cost estimation of each operator is more accurate and the optimization effect is better.

This feature can be enabled by using the following parameter.

spark.sql.statistics.histogram.enabled: specifies whether to enable the histogram function. The default value is false.

Configuring Parameters

- Log in to FusionInsight Manager.

For details, see Accessing FusionInsight Manager.

- Choose Cluster > Services > Spark2x or Spark > Configurations, click All Configurations, and search for the following parameters and adjust their values:

Parameter

Description

Example Value

spark.sql.cbo.enabled

Whether to enable the cost-based optimizer (CBO). CBO is a query optimization policy that collects and uses table statistics to generate more efficient query plans.

- true: Spark uses the CBO to generate query plans.

- false: Spark uses the rule-based optimizer to generate query plans.

false

spark.sql.cbo.joinReorder.enabled

Whether to enable the join reordering function of the CBO. Join reordering reorders joins in a query to generate more efficient query plans.

- true: Spark uses the CBO to reorder joins.

- false: Spark uses the default join order, which is usually determined by the sequence of tables in the query.

false

spark.sql.cbo.joinReorder.dp.threshold

Maximum number of join nodes allowed in the Dynamic Programming (DP) algorithm when CBO performs join reordering.

Value range: no less than 1

12

spark.sql.cbo.joinReorder.card.weight

The weight of a dimension (row count) in the cost comparison of the reconnected execution plan by the CBO. The weight is calculated as follows: Number of rows x Weight + File size x (1 – Weight).

Value range: 0 to 1

0.7

spark.sql.statistics.size.autoUpdate.enabled

Whether to automatically update the table size in the table statistics. If this parameter is enabled, Spark automatically updates the table size at a proper time.

If there are a large number of data files in a table, this operation consumes a lot of resources and slows down data operations.

- true: Spark automatically updates the table statistics.

- false: Spark does not automatically update the table statistics. You need to do it manually.

false

spark.sql.statistics.histogram.enabled

After this function is enabled, a histogram is generated when column information is collected. Histograms can improve estimation accuracy, but collecting histogram information requires additional workload.

- true: Spark collects and uses histogram statistics.

- false: Spark does not collect histogram statistics.

false

spark.sql.statistics.histogram.numBins

The number of bins in histogram statistics. Histogram is a statistical method of data distribution. It divides data into several bins to help the query optimizer estimate the query cost more accurately.

Value range: no less than 2

254

spark.sql.statistics.ndv.maxError

Maximum estimation error allowed by the HyperLogLog++ algorithm when column-level statistics are generated. A smaller error indicates a more accurate estimation, but a higher calculation cost.

Value range: 0 to 1

0.05

spark.sql.statistics.percentile.accuracy

Accuracy of percentile estimation when generating equal height histograms. A larger value indicates more accuracy. The estimated error value can be obtained using 1.0/Percentile estimation accuracy.

Value range: no less than 1

10000

A histogram takes effect in CBO only when the following conditions are met:- spark.sql.statistics.histogram.enabled: The default value is false. Change the value to true to enable the histogram function.

- spark.sql.cbo.enabled: The default value is false. Change the value to true to enable CBO.

- spark.sql.cbo.joinReorder.enabled: The default value is false. Change the value to true to enable connection reordering.

- After the parameter settings are modified, click Save, perform operations as prompted, and wait until the settings are saved successfully.

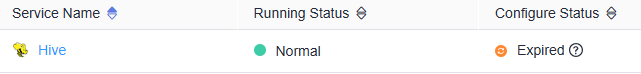

- After the Spark server configurations are updated, if Configure Status is Expired, restart the component for the configurations to take effect.

Figure 1 Modifying Spark configurations

On the Spark dashboard page, choose More > Restart Service or Service Rolling Restart, enter the administrator password, and wait until the service restarts.

If you use the Spark client to submit tasks, you need to download the client again for the modification of the cluster parameters spark.sql.cbo.enabled, spark.sql.cbo.joinReorder.enabled, spark.sql.cbo.joinReorder.dp.threshold, spark.sql.cbo.joinReorder.card.weight, spark.sql.statistics.size.autoUpdate.enabled, spark.sql.statistics.histogram.enabled, spark.sql.statistics.histogram.numBins, spark.sql.statistics.ndv.maxError and spark.sql.statistics.percentile.accuracy to take effect. For details, see Using an MRS Client.

Components are unavailable during the restart, affecting upper-layer services in the cluster. To minimize the impact, perform this operation during off-peak hours or after confirming that the operation does not have adverse impact.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot