Configuring the Compression Format of a Parquet Table

Scenarios

In Spark, Parquet tables are a widely used data storage and processing method. Parquet tables are designed with efficient compression and encoding mechanisms that minimize storage usage while accelerating data retrieval.

The compression format of a Parquet table can be configured as follows:

- If the Parquet table is a partitioned one, set the parquet.compression parameter of the Parquet table to specify the compression format. For example, set tblproperties in the table creation statement: "parquet.compression"="snappy".

- If the Parquet table is a non-partitioned one, set the spark.sql.parquet.compression.codec parameter to specify the compression format. The configuration of the parquet.compression parameter is invalid, because the value of the spark.sql.parquet.compression.codec parameter is read by the parquet.compression parameter. If the spark.sql.parquet.compression.codec parameter is not configured, the default value is snappy and will be read by the parquet.compression parameter.

Therefore, the spark.sql.parquet.compression.codec parameter can only be used to set the compression format of a non-partitioned Parquet table.

Configuration parameters

- Log in to FusionInsight Manager.

For details, see Accessing FusionInsight Manager.

- Choose Cluster > Services > Spark2x or Spark, click Configurations and then All Configurations, and search for the following parameters and adjust their values.

Table 1 Parameter description Parameter

Description

Example Value

spark.sql.parquet.compression.codec

Used to set the compression format of a non-partitioned Parquet table.

Possible values are as follows:

- none: No compression is applied. Use this setting for uncompressed data or in scenarios where storage space is not a concern.

- uncompressed: No compression is applied. This setting is suitable for quick data retrieval but consumes a large amount of storage space.

- snappy: offers fast compression with a moderate compression ratio, making it the default choice for most scenarios. It is the default value.

- gzip: delivers a high compression ratio. However, its compression and decompression speeds are relatively slow.

- lz4: offers fast compression and decompression with a moderate compression ratio.

- zstd: delivers a high compression ratio while maintaining fast compression and decompression speeds. This makes it ideal for scenarios where both storage efficiency and performance are important.

snappy

- After the parameter settings are modified, click Save, perform operations as prompted, and wait until the settings are saved successfully.

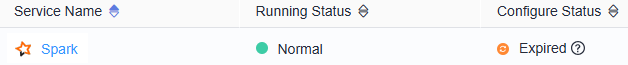

- After the Spark server configurations are updated, if Configure Status is Expired, restart the component for the configurations to take effect.

Figure 1 Modifying Spark configurations

On the Spark dashboard page, choose More > Restart Service or Service Rolling Restart, enter the administrator password, and wait until the service restarts.

If you use the Spark client to submit tasks, after the cluster parameter spark.sql.parquet.compression.codec is modified, you need to download the client again for the configuration to take effect. For details, see Using an MRS Client.

Components are unavailable during the restart, affecting upper-layer services in the cluster. To minimize the impact, perform this operation during off-peak hours or after confirming that the operation does not have adverse impact.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot