Configuring the Size of the Spark Event Queue

Scenarios

Functions such as UI, EventLog, and dynamic resource scheduling in Spark are implemented through event transfer. Events include SparkListenerJobStart and SparkListenerJobEnd, which record each important process.

Each event is saved to a queue after it occurs. When creating a SparkContext object, Driver starts a thread to obtain an event from the queue in sequence and sends the event to each Listener. Each Listener processes the event after detecting the event.

If the following information is displayed in the Driver log, the queue overflows.

- Common application:

Dropping SparkListenerEvent because no remaining room in event queue. This likely means one of the SparkListeners is too slow and cannot keep up with the rate at which tasks are being started by the scheduler.

- Spark Streaming application:

Dropping StreamingListenerEvent because no remaining room in event queue. This likely means one of the StreamingListeners is too slow and cannot keep up with the rate at which events are being started by the scheduler.

Therefore, when the queuing speed is faster than the read speed, the queue overflows. As a result, the overflow event is lost, affecting the UI, EventLog, and dynamic resource scheduling functions. Therefore, a configuration item is added for more flexible use. You can set a proper value based on the memory size of the driver.

Procedure

- Log in to FusionInsight Manager.

For details, see Accessing FusionInsight Manager.

- Choose Cluster > Services > Spark2x or Spark, click Configurations and then All Configurations, and search for the following parameters and adjust their values.

Table 1 Parameter description Parameter

Description

Example Value

spark.scheduler.listenerbus.eventqueue.capacity

The maximum number of events that the event queue can hold.

If events are produced faster than they can be consumed, new events will be dropped, and some listeners may not be able to receive all events.

Increasing the event queue capacity can reduce the risk of event loss, but may increase memory usage. Therefore, you need to set the parameter based on the actual requirements and the driver memory size.

1000000

- After the parameter settings are modified, click Save, perform operations as prompted, and wait until the settings are saved successfully.

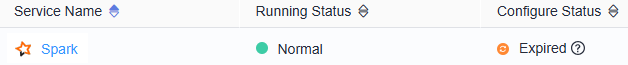

- After the Spark server configurations are updated, if Configure Status is Expired, restart the component for the configurations to take effect.

Figure 1 Modifying Spark configurations

On the Spark dashboard page, choose More > Restart Service or Service Rolling Restart, enter the administrator password, and wait until the service restarts.

If you use the Spark client to submit tasks, after the cluster parameter spark.scheduler.listenerbus.eventqueue.capacity is modified, you need to download the client again for the configuration to take effect. For details, see Using an MRS Client.

Components are unavailable during the restart, affecting upper-layer services in the cluster. To minimize the impact, perform this operation during off-peak hours or after confirming that the operation does not have adverse impact.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot