Solution Overview

Scenarios

In recent years, AI has developed rapidly and been applied to many fields, such as autonomous driving, large models, AI generated content (AIGC), and scientific AI. The implementation of AI requires a large number of infrastructure resources, including high-performance compute power and high-speed storage and network. The powerful compute, storage, and network performance of the AI infrastructure ensure the balanced development of AI compute power.

From classic AI in the past to large models and autonomous driving today, we can see that the parameters and compute power of AI models increase exponentially, bringing new challenges to the storage infrastructure.

- High-throughput data access: As enterprises use more GPUs and NPUs, they expect the storage system to provide higher throughput capabilities that can fully use the compute performance of GPUs and NPUs. Specifically, they hope that training data can be read quicker to reduce the wait time of compute I/Os and checkpoint saves and loads take as little time as possible to reduce the training interruption time.

- Shared data access through file interfaces: AI architectures require large-scale compute clusters (GPU and NPU servers). Data accessed by cluster servers comes from a unified data source, which is a shared storage space. Shared data access has many advantages. It ensures data consistency across different servers and reduces data redundancy when data is retained on different servers. PyTorch, a popular open-source deep learning framework in the AI ecosystem, is a good example. PyTorch accesses data through file interfaces by default. AI algorithm developers are used to using file interfaces. So, file interfaces are the most friendly shared storage access method.

To know more about this solution or if you have any questions when using this solution, seek support through Solution Consultation.

Solution Architecture

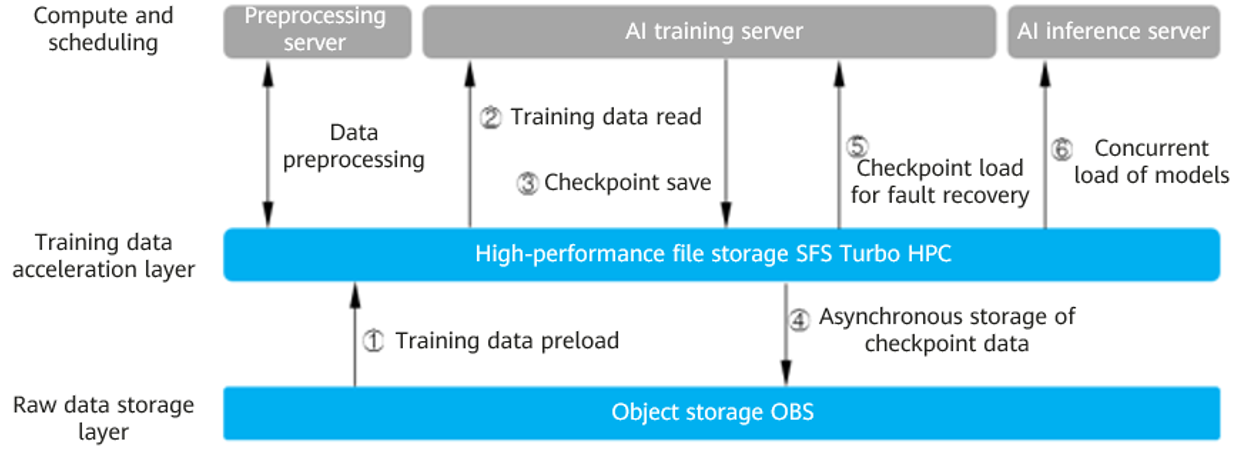

To address the problems faced in AI training, Huawei Cloud provides an AI cloud storage solution based on Object Storage Service (OBS) and Scalable File Service Turbo (SFS Turbo). As shown in Figure 1, SFS Turbo can work with OBS. You can use SFS Turbo HPC file systems to speed up data access to OBS and asynchronously store the generated data to OBS for low-cost, long term persistent storage.

Solution Advantages

Table 1 describes the advantages of Huawei Cloud AI cloud storage solution.

|

No. |

Advantage |

Description |

|---|---|---|

|

1 |

Decoupled storage and compute improves resource utilization. |

GPU and NPU compute powers are decoupled from the SFS Turbo storage. Compute and storage resources can be expanded separately as needed, improving resource utilization. |

|

2 |

High-performance SFS Turbo accelerates the training process. |

|

|

3 |

Asynchronous data import and export do not take up the training time. No external migration tools need to be deployed. |

|

|

4 |

Automatic flow between hot and cold data reduces storage costs. |

|

|

5 |

Multiple AI development platforms and ecosystem compatibility. |

Mainstream AI application frameworks, such as PyTorch and MindSpore, Kubernetes containers, and algorithm development can all access shared data in SFS Turbo through file semantics. No development adaptations need to be made. |

To know more about this solution or if you have any questions when using this solution, seek support through Solution Consultation.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot