Processing Datasets

Introduction to Data Processing

ModelArts Studio provides the data processing function, covering data processing, data synthesis, and data labeling. This function ensures that the original data meets service requirements and model training standards. This function is the core of data engineering.

- Data processing

Use dedicated processing operators to preprocess data, ensuring it meets the model training standards and service requirements. Different types of datasets utilize operators specially designed for removing noise and redundant information, to enhance data quality. In addition, you can create custom operators to flexibly process data based on specific service scenarios and model requirements. This further optimizes the data processing process and improves the accuracy and robustness of models.

- Data synthesis

Using either a preset or custom data instruction, process the original data, and generate new data based on a specified number of epochs. This process can extend the dataset to some extent and enhance the diversity and generalization capability of the trained model.

- Data labeling

Add accurate labels to unlabeled datasets to ensure high-quality data required for model training. The platform supports both manual annotation and AI pre-annotation. You can choose an appropriate annotation method based on your needs. The quality of data labeling directly impacts the training effectiveness and accuracy of the model.

- Data combination

Dataset combination involves combining multiple datasets based on a specific ratio into a processed dataset. A proper ratio ensures the diversity, balance, and representativeness of datasets and avoids issues resulting from uneven data distribution.

Through data processing, the platform can effectively clear noise data and standardize data formats, helping improve the overall quality of datasets. Data processing aims at optimization based on data types and service scenarios to provide high-quality input for model training and improve model performance.

Procedure

- Log in to ModelArts Studio and access the desired workspace.

- In the navigation pane, choose Data Engineering > Data Processing. On the Processing Tasks page, click Create Processing Job in the upper right corner.

- Select the dataset to be processed and click Next Step. The processing operator selection page is displayed.

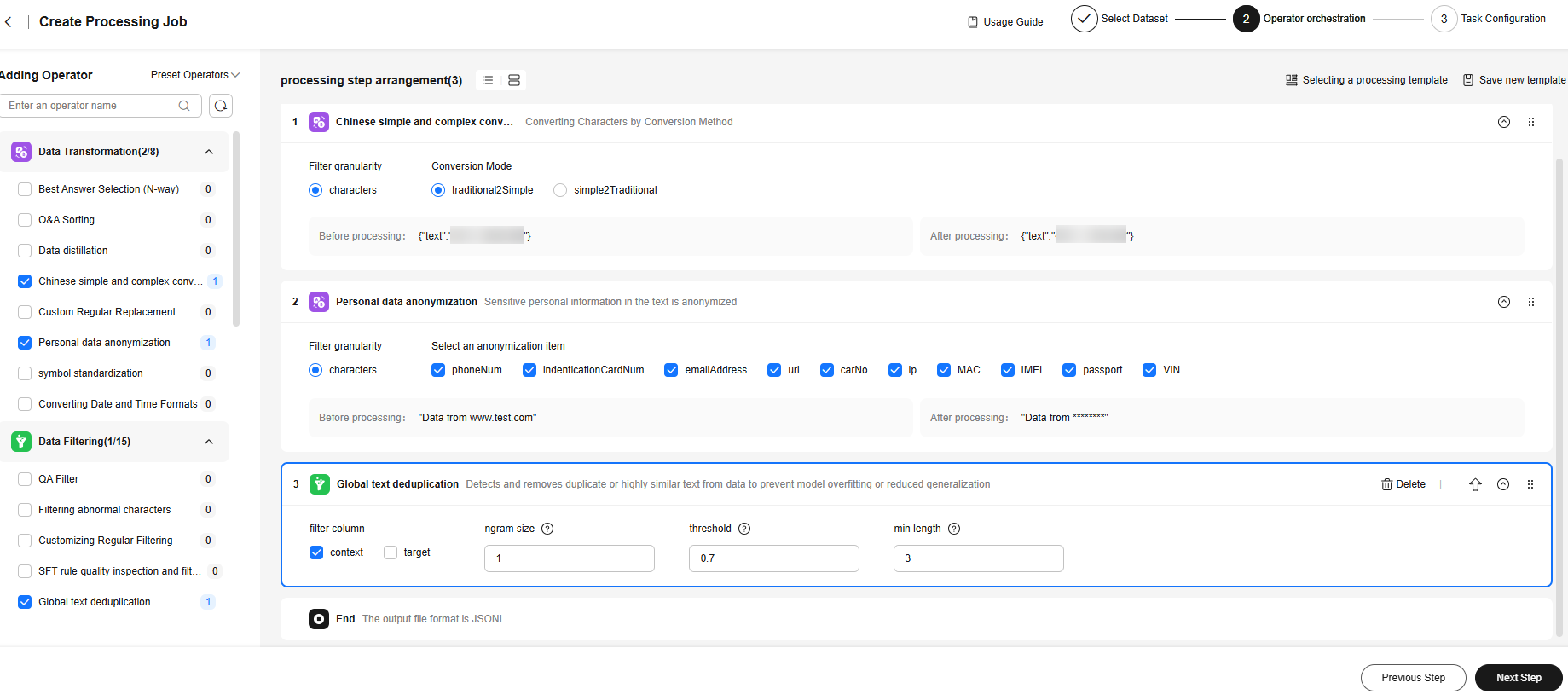

- Select the processing operator to be used for data processing. For example, use the "Chinese simple and complex conversion" operator to unify the simplified and traditional types, use the "Personal data anonymization" operator to encrypt sensitive data such as websites and phone numbers, and use the "Q&A pair deduplication" operator to remove text with high similarity from a dataset. For details about the data processing operators supported by ModelArts Studio, see Text Processing Operators. Click Next in the lower right corner of the page.

- In the Adding Operator pane on the left, select the required operators.

- On the processing step orchestration page on the right, set operator parameters. You can drag

on the right to adjust the operator execution sequence.

on the right to adjust the operator execution sequence.

If the operator parameters involve the selection of a model, you need to purchase and deploy the model in ModelArts Studio.

- During orchestration, you can click Save new template in the upper right corner to save the current orchestration process as a template. During the creation of subsequent data processing tasks, you can select a processing template.

If you select a processing template, the orchestrated processing steps will be deleted.

Figure 1 Selecting a processing template

Figure 2 Selecting a data processing operator

To customize a processing operator, click Manage Processing Operators in the upper right corner of the Processing Tasks page. On the displayed page, click Create Custom Operator in the upper right corner. For details, see Custom Dataset Processing Operators. The created custom operator can be selected and called on the processing operator selection page.

- After the processing steps are orchestrated, click Next to go to the Task Configuration page.

- Resource Allocation

Click

to expand resource configuration and set task resources. You can also customize parameters. Click Add Parameters and enter the parameter name and value.Figure 3 Resource Allocation

to expand resource configuration and set task resources. You can also customize parameters. Click Add Parameters and enter the parameter name and value.Figure 3 Resource Allocation

Table 1 describes the parameters.

Table 1 Parameter configuration Parameter

Description

numExecutors

Number of executors. The default value is 2. An executor is a process running on a worker node. It executes tasks and returns the calculation result to the driver. One core in an executor can run one task at the same time. Therefore, more tasks can be processed at the same time if you increase the number of the executors. You can add executors (if they are available) to process more tasks concurrently and improve efficiency.

numExecutors x executorMemory must be greater than or equal to 4 and less than or equal to 16.

executorCores

Number of CPU kernels used by each executor process. The default value is 2. Multiple cores in an executor can run multiple tasks at the same time, which increases the task concurrency. However, because all cores share the memory of an executor, you need to balance the memory and the number of cores.

The minimum value of the product of numExecutors and executorMemory is 4, and the maximum value is 16. The ratio of executorCores to executorMemory must be in the range of 1:2 to 1:4.

executorMemory

Memory size used by each Executor process. The default value is 4. The executor memory is used for task execution and communication. You can increase the memory for a job that requires a great number of resources, and run small jobs concurrently with a smaller memory.

The ratio of executorCores to executorMemory must be in the range of 1:2 to 1:4.

driverCores

Number of CPU kernels used by each driver process. The default value is 2. The driver schedules jobs and communicates with executors.

The ratio of driverCores to driverMemory must be in the range of 1:2 to 1:4.

driverMemory

Memory used by the driver process. The default value is 4. The driver schedules jobs and communicates with executors. Add driver memory when the number and parallelism level of the tasks increases.

The ratio of driverCores to driverMemory must be in the range of 1:2 to 1:4.

- Automatically Generate Processing Dataset

Select and configure the information about the generated dataset, as shown in Figure 4. Click OK in the lower right corner. The platform starts the data processing task. After the processing task is successfully executed, a processed dataset is automatically generated.

- (Optional) Extended Info

- Resource Allocation

- Click Start Process in the lower right corner of the page to return to the Processing Tasks page. On this page, you can view the status of the dataset processing task. If the status is Dataset generated successfully, the data processing is successful.

- To view the processed dataset, choose Data Engineering > Data Management > Datasets, and click the Processed Dataset tab.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot