Migrating Data Between Elasticsearch Clusters Using Huawei Cloud Logstash

You can use a Logstash cluster created using Huawei Cloud's Cloud Search Service (CSS) to migrate data between Elasticsearch clusters.

Scenarios

Huawei Cloud Logstash is a fully managed data ingestion and processing service. It is compatible with open-source Logstash and can be used for data migration between Elasticsearch clusters.

You can use Huawei Cloud Logstash to migrate data from Huawei Cloud Elasticsearch, self-built Elasticsearch, or third-party Elasticsearch to Huawei Cloud Elasticsearch. This solution applies to the following scenarios:

- Cross-version migration: Migrate data between different versions to maintain data availability and consistency in the new version, leveraging the compatibility and flexibility of Logstash. This mode applies to migration scenarios where the Elasticsearch cluster version span is large, for example, from 6.X to 7.X.

- Cluster merging: Utilize Logstash to transfer and consolidate data from multiple Elasticsearch clusters into a single Elasticsearch cluster, enabling unified management and analysis of data from different Elasticsearch clusters.

- Cloud migration: Migrate an on-premises Elasticsearch service to the cloud to enjoy the benefits of cloud services, such as scalability, ease-of-maintenance, and cost-effectiveness.

- Changing the service provider: An enterprise currently using a third-party Elasticsearch service wishes to switch to Huawei Cloud for reasons such as cost, performance, or other strategic considerations.

Solution Architecture

Figure 1 shows how to migrate data between Elasticsearch clusters using Huawei Cloud Logstash.

- Input: Huawei Cloud Logstash receives data from Huawei Cloud Elasticsearch, self-built Elasticsearch, or third-party Elasticsearch.

The procedure for migrating data from Huawei Cloud Elasticsearch, self-built Elasticsearch, or third-party Elasticsearch to Huawei Cloud Elasticsearch remains the same. The only difference is the access address of the source cluster. For details, see Obtaining Elasticsearch Cluster Information.

- Filter: Huawei Cloud Logstash cleanses and converts data.

- Output: Huawei Cloud Logstash outputs data to the destination system, for example, Huawei Cloud Elasticsearch.

- Full data migration: Utilize Logstash to perform a complete data migration. This method is suitable for the initial phase of migration or scenarios where data integrity must be ensured.

- Incremental data migration: Configure Logstash to perform incremental queries and migrate only index data with incremental fields. This method is suitable for scenarios requiring continuous data synchronization or real-time data updates.

Advantages

- Compatibility with later versions: This solution supports the migration of Elasticsearch clusters across different versions.

- Efficient data processing: Logstash supports batch read and write operations, significantly enhancing data migration efficiency.

- Concurrent synchronization technology: The slice concurrent synchronization technology can be utilized to boost data migration speed and performance, especially when handling large volumes of data.

- Simple configuration: Huawei Cloud Logstash offers a straightforward and intuitive configuration process, allowing you to input, process, and output data through configuration files.

- Powerful data processing: Logstash includes various built-in filters to clean, convert, and enrich data during migration.

- Flexible migration policies: You can choose between full migration or incremental migration based on service requirements to optimize storage usage and migration time.

Impact on Performance

- If the source cluster has a high resource usage, it is advisable to tune the size parameter to slow down the data retrieval speed or perform the migration during off-peak hours, reducing impact on the performance of the source cluster.

- If the source cluster has a low resource usage, keep the default settings of the Scroll API. In the meantime, monitor the load of the source cluster. Tune the size and slice parameters based on the load conditions of the source cluster, optimizing migration efficiency and resource utilization.

Constraints

During cluster migration, do not add, delete, or modify indexes in the source cluster, or the source and destination clusters will have inconsistent data after the migration.

Prerequisites

- The source and destination Elasticsearch clusters are available.

- You have created a CSS Logstash cluster (for example, Logstash-ES) and confirmed that Logstash and the Elasticsearch clusters involved are deployed in the same VPC and the network between them is connected.

- If the source cluster, Logstash, and destination cluster are in different VPCs, establish a VPC peering connection between them. For details, see VPC Peering Connection Overview.

- To migrate an on-premises Elasticsearch cluster to Huawei Cloud, you can configure public network access for the on-premises Elasticsearch cluster.

- To migrate a third-party Elasticsearch cluster to Huawei Cloud, you need to establish a VPN or Direct Connect connection between the third party's internal data center and Huawei Cloud.

- Ensure that _source has been enabled for indexes in the cluster.

By default, _source is enabled. You can run the GET {index}/_search command to check whether it is enabled. If the returned index information contains _source, it is enabled.

Procedure

- Obtaining Elasticsearch Cluster Information.

- (Optional) Migrating the Index Structure: Migrate an Elasticsearch cluster's index template and index structure using scripts.

- Verifying Connectivity Between Clusters: Verify the connectivity between the Logstash cluster and the source Elasticsearch cluster.

- Migrate the source Elasticsearch cluster using Logstash.

- Using Logstash to Perform Full Data Migration is recommended at the initial stage of cluster migration or in scenarios where data integrity needs to be ensured.

- Using Logstash to Incrementally Migrate Cluster Data is recommended for scenarios that require continuous data synchronization or real-time data updates.

- Deleting a Logstash Cluster: After the cluster migration is complete, release the Logstash cluster in a timely manner.

Obtaining Elasticsearch Cluster Information

Before migrating a cluster, you need to obtain necessary cluster information for configuring a migration task.

|

Cluster Source |

Required Information |

How to Obtain |

|

|---|---|---|---|

|

Source cluster |

Huawei Cloud Elasticsearch cluster |

|

|

|

Self-built Elasticsearch cluster |

|

Contact the service administrator to obtain the information. |

|

|

Third-party Elasticsearch cluster |

|

Contact the service administrator to obtain the information. |

|

|

Destination cluster |

Huawei Cloud Elasticsearch cluster |

|

|

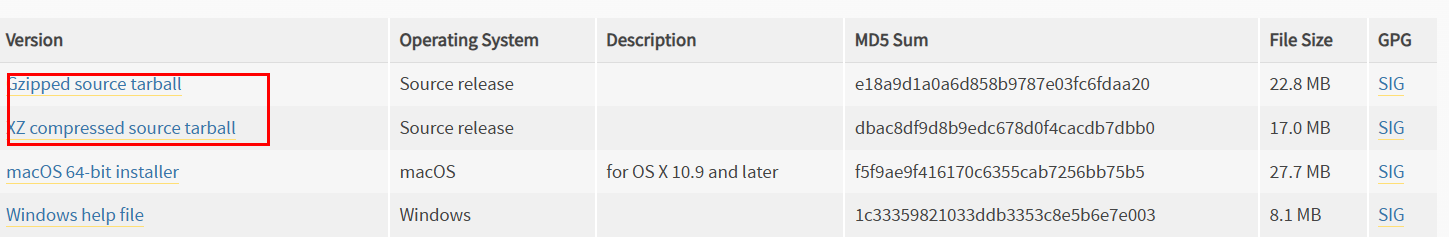

The method of obtaining the cluster information varies depending on the source cluster. This section describes how to obtain information about the Huawei Cloud Elasticsearch cluster.

- Log in to the CSS management console.

- In the navigation pane on the left, choose .

- In the cluster list, find the destination cluster and obtain the cluster name and address.

Figure 2 Obtaining cluster information

(Optional) Migrating the Index Structure

If you plan to manually create an index structure in the destination Elasticsearch cluster, skip this section. This section describes how to migrate an Elasticsearch cluster's index template and index structure using scripts.

- Create an ECS to migrate the metadata of the source cluster.

- Create an ECS. Select CentOS as the OS of the ECS and 2U4G as its flavor. The ECS must be in the same VPC and security group as the CSS cluster.

- Test the connectivity between the ECS and the source and destination clusters.

Run the curl http:// {ip}:{port} command on the ECS to test the connectivity. If 200 is returned, the connection is successful.

IP indicates the access address of the source or destination cluster. port indicates the port number. The default port number is 9200. Use the actual port number of the cluster.

- Install Python for executing the migration script.

Select a proper Python installation method based on the ECS (executor) environment.

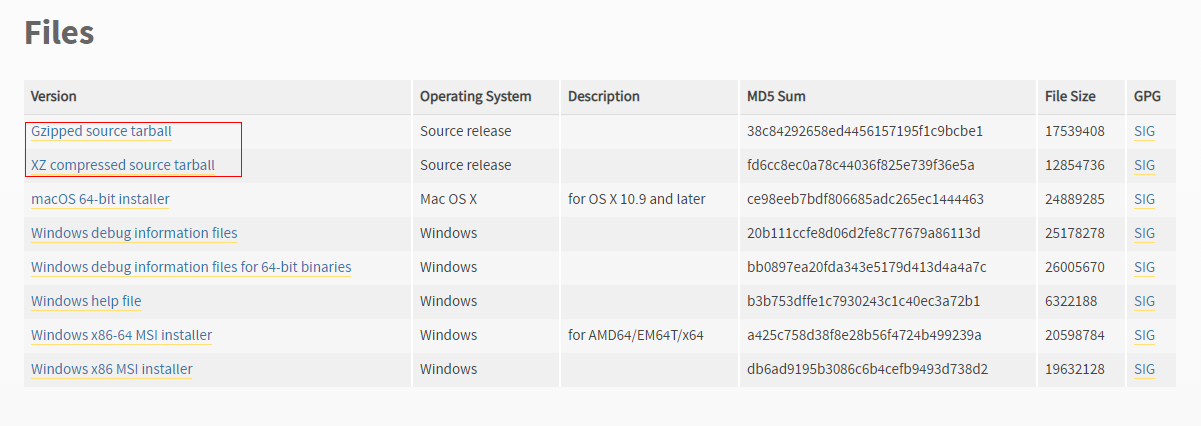

Table 2 Python installation methods Python Version

Internet Connection

Operation Guide

Python3

Yes

No

Python2

Yes

No

- Prepare the index migration script of the source Elasticsearch cluster.

- Run the following commands to migrate the index template and index structure of the Elasticsearch cluster:

python migrateTemplate.py python migrateMapping.py

Verifying Connectivity Between Clusters

Before starting a migration task, verify the network connectivity between Logstash and the source Elasticsearch cluster.

- Go to the Configuration Center page of Logstash.

- Log in to the CSS management console.

- In the navigation pane on the left, choose Clusters > Logstash.

- In the cluster list, click the name of the target cluster. The cluster information page is displayed.

- Click the Configuration Center tab.

- On the Configuration Center page, click Test Connectivity.

- In the dialog box that is displayed, enter the IP address and port number of the source cluster and click Test.

Figure 5 Testing connectivity

If Available is displayed, the network is connected. If the network is disconnected, configure routes for the Logstash cluster to connect the clusters. For details, see Configuring Routes for a Logstash Cluster.

Using Logstash to Perform Full Data Migration

At the initial stage of cluster migration, or in scenarios where guaranteeing data integrity takes top priority, it is recommended to use Logstash for full data migration. This approach migrates the entire Elasticsearch cluster's data in one go.

- Go to the Configuration Center page of Logstash.

- Log in to the CSS management console.

- In the navigation pane on the left, choose Clusters > Logstash.

- In the cluster list, click the name of the target cluster. The cluster information page is displayed.

- Click the Configuration Center tab.

- On the Configuration Center page, click Create in the upper right corner. On the Create Configuration File page, edit the configuration file for the full Elasticsearch cluster migration.

- Selecting a cluster template: Expand the system template list, select elasticsearch, and click Apply in the Operation column.

- Setting the name of the configuration file: Set Name, for example, es-es-all.

- Editing the configuration file: Enter the migration configuration plan of the Elasticsearch cluster in Configuration File Content. The following is an example of the configuration file: For details about how to obtain the cluster information, see Obtaining Elasticsearch Cluster Information.

input{ elasticsearch{ # Address for accessing the source Elasticsearch cluster. hosts => ["xx.xx.xx.xx:9200", "xx.xx.xx.xx:9200"] # Username and password for accessing the source cluster. You do not need to configure them for a non-security-mode cluster. # user => "css_logstash" # password => "*****" # Configure the indexes to be migrated. Use commas (,) to separate multiple indexes. You can use wildcard characters, for example, index*. index => "*_202102" docinfo => true slices => 3 size => 3000 # If the destination cluster is accessed through HTTPS, you need to configure the following information: # HTTPS access certificate of the cluster. Retain the following for the CSS cluster: # ca_file => "/rds/datastore/logstash/v7.10.0/package/logstash-7.10.0/extend/certs" # for 7.10.0 # Whether to enable HTTPS communication. Set this parameter to true for a cluster accessed through HTTPS. #ssl => true } } # Remove specified fields added by Logstash. filter { mutate { remove_field => ["@version"] } } output{ elasticsearch{ # Access address of the destination Elasticsearch cluster hosts => ["xx.xx.xx.xx:9200","xx.xx.xx.xx:9200"] # Username and password for accessing the destination cluster. You do not need to configure them for a non-security-mode cluster. # user => "css_logstash" # password => "*****" # Configure the index of the target cluster. The following configuration indicates that the index name is the same as that of the source end. index => "%{[@metadata][_index]}" document_type => "%{[@metadata][_type]}" document_id => "%{[@metadata][_id]}" # If the destination cluster is accessed through HTTPS, you need to configure the following information: # HTTPS access certificate of the cluster. Retain the following for the CSS cluster: #cacert => "/rds/datastore/logstash/v7.10.0/package/logstash-7.10.0/extend/certs" # for 7.10.0 # Whether to enable HTTPS communication. Set this parameter to true for a cluster accessed through HTTPS. #ssl => true # Whether to verify the elasticsearch certificate on the server. Set this parameter to false, indicating that the certificate is not verified. #ssl_certificate_verification => false } }Table 3 Configuration items for full migration Item

Description

input

hosts

IP address of the source cluster. If the cluster has multiple access nodes, separate them with commas (,).

user

Username for accessing the cluster. For a non-security-mode cluster, use # to comment out this parameter.

password

Password for accessing the cluster. For a non-security-mode cluster, use # to comment out this item.

index

The source indexes to be fully migrated. Use commas (,) to separate multiple indexes. Wildcard is supported, for example, index*.

docinfo

Indicates whether to re-index the document. The value must be true.

slices

In some cases, it is possible to improve overall throughput by consuming multiple distinct slices of a query simultaneously using sliced scrolls. It is recommended that the value range from 2 to 8.

size

Maximum number of hits returned for each query.

output

hosts

Access address of the destination cluster. If the cluster has multiple nodes, separate them with commas (,).

user

Username for accessing the cluster. For a non-security-mode cluster, use # to comment out this parameter.

password

Password for accessing the cluster. For a non-security-mode cluster, use # to comment out this item.

index

Name of the index migrated to the destination cluster. It can be modified and expanded, for example, Logstash-%{+yyyy.MM.dd}.

document_type

Ensure that the document type on the destination end is the same as that on the source end.

document_id

Document ID in the index. It is advisable to keep consistent document IDs on the source and destination clusters. If you want to have document IDs automatically generated, use the number sign (#) to comment it out.

- Click Next to configure Logstash pipeline parameters.

In this example, retain the default values. For details about how to set the parameters, see .

- Click OK.

On the Configuration Center page, you can check the created configuration file. If its status changes to Available, it has been successfully created.

- Execute the full migration task.

- In the configuration file list, select configuration file es-es-all and click Start in the upper left corner.

- In the Start Logstash dialog box, select Keepalive if necessary. In this example, Keepalive is not enabled.

When Keepalive is enabled, a daemon process is configured on each node. If the Logstash service becomes faulty, the daemon process will try to rectify the fault and restart the service, ensuring that the Logstash pipelines run efficiently and reliably. You are advised to enable Keepalive for services running long-term. Do not enable it for services running only short-term, or your migration tasks may fail due to a lack of source data.

- Click OK to execute the configuration file and thereby start the Logstash full migration task.

You can view the started configuration file in the pipeline list.

- After data migration is complete, check data consistency.

- Method 1: Use PuTTY to log in to the VM used for the migration and run the python checkIndices.py command to compare the data.

- Method 2: Run the GET _cat/indices command on the Kibana console of the source and destination clusters, separately, to check whether their indexes are consistent.

Using Logstash to Incrementally Migrate Cluster Data

In scenarios where continuous data synchronization or real-time data is required, it is recommended to use Logstash incremental cluster data migration. This method involves configuring incremental queries in Logstash, allowing only index data with incremental fields to be migrated.

- Go to the Configuration Center page of Logstash.

- Log in to the CSS management console.

- In the navigation pane on the left, choose Clusters > Logstash.

- In the cluster list, click the name of the target cluster. The cluster information page is displayed.

- Click the Configuration Center tab.

- On the Configuration Center page, click Create in the upper right corner. On the Create Configuration File page, edit the configuration file for the incremental migration.

- Selecting a cluster template: Expand the system template list, select elasticsearch, and click Apply in the Operation column.

- Setting the name of the configuration file: Set Name, for example, es-es-inc.

- Editing the configuration file: Enter the migration configuration plan of the Elasticsearch cluster in Configuration File Content. The following is an example of the configuration file:

The incremental migration configuration varies according to the index and must be provided based on the index analysis. For details about how to obtain the cluster information, see Obtaining Elasticsearch Cluster Information.

input{ elasticsearch{ # Access address of the source Elasticsearch cluster. You do not need to add a protocol. If you add the HTTPS protocol, an error will be reported. hosts => ["xx.xx.xx.xx:9200"] # Username and password for accessing the source cluster. You do not need to configure them for a non-security-mode cluster. user => "css_logstash" password => "******" # Configure incremental migration indexes. index => "*_202102" # Configure incremental migration query statements. query => '{"query":{"bool":{"should":[{"range":{"postsDate":{"from":"2021-05-25 00:00:00"}}}]}}}' docinfo => true size => 1000 # If the destination cluster is accessed through HTTPS, you need to configure the following information: # HTTPS access certificate of the cluster. Retain the following for the CSS cluster: # ca_file => "/rds/datastore/logstash/v7.10.0/package/logstash-7.10.0/extend/certs" # for 7.10.0 # Whether to enable HTTPS communication. Set this parameter to true for HTTPS-based cluster access. #ssl => true } } filter { mutate { remove_field => ["@timestamp", "@version"] } } output{ elasticsearch{ # Access address of the destination cluster. hosts => ["xx.xx.xx.xx:9200","xx.xx.xx.xx:9200"] # Username and password for accessing the destination cluster. You do not need to configure them for a non-security-mode cluster. #user => "admin" #password => "******" # Configure the index of the target cluster. The following configuration indicates that the index name is the same as that of the source end. index => "%{[@metadata][_index]}" document_type => "%{[@metadata][_type]}" document_id => "%{[@metadata][_id]}" # If the destination cluster is accessed through HTTPS, you need to configure the following information: # HTTPS access certificate of the cluster. Retain the default value for the CSS cluster. #cacert => "/rds/datastore/logstash/v7.10.0/package/logstash-7.10.0/extend/certs" # for 7.10.0 # Whether to enable HTTPS communication. Set this parameter to true for HTTPS-based cluster access. #ssl => true # Whether to verify the elasticsearch certificate on the server. Set this parameter to false, indicating that the certificate is not verified. #ssl_certificate_verification => false } #stdout { codec => rubydebug { metadata => true }} }Table 4 Incremental migration configuration items Configuration

Description

hosts

Access addresses of the source and target clusters. If a cluster has multiple nodes, enter all their access addresses.

user

Username for accessing the cluster. For a non-security-mode cluster, use # to comment out this parameter.

password

Password for accessing the cluster. For a non-security-mode cluster, use # to comment out this item.

index

Indexes to be incrementally migrated. One configuration file supports the incremental migration of only one index.

query

Identifier of incremental data. Typically, it is a DLS statement of Elasticsearch and needs to be analyzed in advance. postsDate indicates the time field in the service.

{"query":{"bool":{"should":[{"range":{"postsDate":{"from":"2021-05-25 00:00:00"}}}]}}}This command means to migrate data added after 2021-05-25. During multiple incremental migrations, you need to change the log value. If the indexes in the source end Elasticsearch use the timestamp format, convert the data to a timestamp here. The validity of this command must be verified in advance.

scroll

If there is massive data on the source end, you can use the scroll function to obtain data in batches to prevent Logstash memory overflow. The default value is 1m. The interval cannot be too long. Otherwise, data may be lost.

- Execute the incremental migration task.

- In the configuration file list, select configuration file es-es-inc and click Start in the upper left corner.

- In the Start Logstash dialog box, select Keepalive if necessary. In this example, Keepalive is not enabled.

When Keepalive is enabled, a daemon process is configured on each node. If the Logstash service becomes faulty, the daemon process will try to rectify the fault and restart the service, ensuring that the Logstash pipelines run efficiently and reliably. You are advised to enable Keepalive for services running long-term. Do not enable it for services running only short-term, or your migration tasks may fail due to a lack of source data.

- Click OK to execute the configuration file and thereby start the Logstash incremental migration task.

You can view the started configuration file in the pipeline list.

- After data migration is complete, check data consistency.

- Method 1: Use PuTTY to log in to the VM used for the migration and run the python checkIndices.py command to compare the data.

- Method 2: Run the GET _cat/indices command on the Kibana console of the source and destination clusters, separately, to check whether their indexes are consistent.

Deleting a Logstash Cluster

After the migration is complete, release the Logstash cluster in a timely manner to save resources and avoid unnecessary fees.

- Log in to the CSS management console.

- In the navigation pane on the left, choose Clusters > Logstash.

- In the Logstash cluster list, select the Logstash cluster Logstash-ES and click More > Delete in the Operation column. In the confirmation dialog box, manually type in DELETE, and click OK.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot