Managing Environment Variables of a Training Container

What Is an Environment Variable

This section describes environment variables preset in a training container. The environment variables include:

- Path environment variables

- Environment variables of a distributed training job

- Nvidia Collective multi-GPU Communication Library (NCCL) environment variables

- OBS environment variables

- Environment variables of the pip source

- Environment variables of the API Gateway address

- Environment variables of job metadata

Notes and Constraints

When defining custom environment variables, avoid using names that start with MA_ to prevent conflicts with system environment variables.

Configuring Environment Variables

When you create a training job, you can add environment variables or modify environment variables preset in the training container.

To ensure data security, do not enter sensitive information, such as plaintext passwords.

Environment Variables Preset in a Training Container

Table 1, Table 2, Table 3, Table 4, Table 5, Table 6, and Table 7 list environment variables preset in a training container.

The environment variable values are examples only.

|

Variable |

Description |

Example |

Default Value |

|---|---|---|---|

|

PATH |

Executable file paths |

PATH=/usr/local/bin:/usr/local/cuda/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin |

Not fixed |

|

LD_LIBRARY_PATH |

Dynamic load library paths |

LD_LIBRARY_PATH=/usr/local/seccomponent/lib:/usr/local/cuda/lib64:/usr/local/cuda/compat:/root/miniconda3/lib:/usr/local/lib:/usr/local/nvidia/lib64 |

Not fixed |

|

LIBRARY_PATH |

Static library paths |

LIBRARY_PATH=/usr/local/cuda/lib64/stubs |

Not fixed |

|

MA_HOME |

Main directory of a training job |

MA_HOME=/home/ma-user |

/home/ma-user |

|

MA_JOB_DIR |

Parent directory of the training algorithm folder |

MA_JOB_DIR=/home/ma-user/modelarts/user-job-dir |

/home/ma-user/modelarts/user-job-dir |

|

MA_MOUNT_PATH |

Path mounted to a ModelArts training container, which is used to temporarily store training algorithms, algorithm input, algorithm output, and logs |

MA_MOUNT_PATH=/home/ma-user/modelarts |

/home/ma-user/modelarts |

|

MA_LOG_DIR |

Training log directory |

MA_LOG_DIR=/home/ma-user/modelarts/log |

/home/ma-user/modelarts/log |

|

MA_SCRIPT_INTERPRETER |

Training script interpreter |

MA_SCRIPT_INTERPRETER= |

Not set |

|

Variable |

Description |

Example |

Default Value |

|---|---|---|---|

|

MA_CURRENT_IP |

IP address of a job container. |

MA_CURRENT_IP=192.168.23.38 |

Not fixed |

|

MA_NUM_GPUS |

Number of accelerator cards in a job container. |

MA_NUM_GPUS=8 |

0 |

|

MA_TASK_NAME |

Name of a job container, for example:

|

MA_TASK_NAME=worker |

worker |

|

MA_NUM_HOSTS |

Number of instances which is automatically obtained from Compute Nodes. |

MA_NUM_HOSTS=4 |

1 |

|

VC_TASK_INDEX |

Container index, starting from 0. This parameter is invalid for single-node training. In multi-node training jobs, you can use this parameter to determine the algorithm logic of the container. |

VC_TASK_INDEX=0 |

0 |

|

VC_WORKER_NUM |

Instances required for a training job. |

VC_WORKER_NUM=4 |

1 |

|

VC_WORKER_HOSTS |

Domain name of each node for multi-node training. Use commas (,) to separate the domain names in sequence. You can obtain the IP address through domain name resolution. |

VC_WORKER_HOSTS=modelarts-job-a0978141-1712-4f9b-8a83-000000000000-worker-0.modelarts-job-a0978141-1712-4f9b-8a83-000000000000,modelarts-job-a0978141-1712-4f9b-8a83-000000000000-worker-1.ob-a0978141-1712-4f9b-8a83-000000000000,modelarts-job-a0978141-1712-4f9b-8a83-000000000000-worker-2.modelarts-job-a0978141-1712-4f9b-8a83-000000000000,ob-a0978141-1712-4f9b-8a83-000000000000-worker-3.modelarts-job-a0978141-1712-4f9b-8a83-000000000000 |

Not fixed |

|

${MA_VJ_NAME}-${MA_TASK_NAME}-N.${MA_VJ_NAME} |

Communication domain name of a node. For example, the communication domain name of node 0 is ${MA_VJ_NAME}-${MA_TASK_NAME}-0.${MA_VJ_NAME}. N indicates the number of instances.

WARNING:

This method does not work for creating communication domain names for supernode resource pools. Instead, you can get the communication domain names for all nodes directly from VC_WORKER_HOSTS across all resource pools, including supernode ones. |

For example, if there are four instances, the environment variables are as follows: ${MA_VJ_NAME}-${MA_TASK_NAME}-0.${MA_VJ_NAME} ${MA_VJ_NAME}-${MA_TASK_NAME}-1.${MA_VJ_NAME} ${MA_VJ_NAME}-${MA_TASK_NAME}-2.${MA_VJ_NAME} ${MA_VJ_NAME}-${MA_TASK_NAME}-3.${MA_VJ_NAME} |

Not fixed |

|

Variable |

Description |

Example |

Default Value |

|---|---|---|---|

|

NCCL_VERSION |

NCCL version |

NCCL_VERSION=2.7.8 |

Not fixed |

|

NCCL_DEBUG |

NCCL log level |

NCCL_DEBUG=INFO |

INFO |

|

NCCL_IB_HCA |

InfiniBand NIC to use for communication |

NCCL_IB_HCA=^mlx5_bond_0 |

Not fixed |

|

NCCL_IB_TIMEOUT |

InfiniBand transmission timeout interval |

NCCL_IB_TIMEOUT=18 |

18 |

|

NCCL_IB_RETRY_CNT |

Maximum number of InfiniBand transmission retries |

NCCL_IB_RETRY_CNT=15 |

15 |

|

NCCL_IB_GID_INDEX |

Global ID index used in RoCE mode |

NCCL_IB_GID_INDEX=3 |

3 |

|

NCCL_IB_TC |

InfiniBand traffic type |

NCCL_IB_TC=128 |

128 |

|

NCCL_SOCKET_IFNAME |

IP interface to use for communication |

NCCL_SOCKET_IFNAME=bond0,eth0 |

Not fixed |

|

NCCL_NET_PLUGIN |

Network plug-in used by NCCL |

NCCL_NET_PLUGIN=none |

none |

|

Variable |

Description |

Example |

Default Value |

|---|---|---|---|

|

MA_S3_ENDPOINT |

OBS address. Ensure that applications can properly connect to the specified OBS. |

N/A |

Not fixed |

|

S3_VERIFY_SSL |

Specifies whether to use SSL to access OBS. If this parameter is set to 0, the SSL certificate is not verified. If this parameter is set to 1, the SSL certificate is verified. |

S3_VERIFY_SSL=0 |

0 |

|

S3_USE_HTTPS |

Specifies whether to use HTTPS to access OBS. If the parameter is set to 1, HTTPS is used. If the parameter is set to 0, HTTP is used. |

S3_USE_HTTPS=1 |

1 |

|

Variable |

Description |

Example |

Default Value |

|---|---|---|---|

|

MA_PIP_HOST |

Domain name of the pip source |

MA_PIP_HOST=repo.example.com |

Domain name of the Huawei internal pip source |

|

MA_PIP_URL |

Address of the pip source |

MA_PIP_URL=http://repo.example.com/repository/pypi/simple/ |

Address of the Huawei internal pip source |

|

MA_APIGW_ENDPOINT |

ModelArts API Gateway address |

MA_APIGW_ENDPOINT=https://modelarts.region.xxx.example.com |

Not fixed |

|

Variable |

Description |

Example |

Default Value |

|---|---|---|---|

|

MA_CURRENT_INSTANCE_NAME |

Name of the current node for multi-node training |

MA_CURRENT_INSTANCE_NAME=modelarts-job-a0978141-1712-4f9b-8a83-000000000000-worker-1 |

Not fixed |

|

Variable |

Description |

Example |

Default Value |

|---|---|---|---|

|

MA_SKIP_IMAGE_DETECT |

Specifies whether to enable ModelArts precheck. If this parameter is set to 1 or not set, precheck is enabled. If this parameter is set to 0, pre-check is disabled. It is good practice to enable precheck to detect node and driver faults before they affect services. This parameter is not set by default and precheck is enabled. |

1 |

Not set |

|

Variable |

Description |

Example |

Default Value |

|---|---|---|---|

|

MA_HANG_DETECT_TIME |

Suspension detection time. The job is considered suspended if its process I/O does not change for this time. Value range: 10 to 720 Unit: minute Default value: 30 |

30 |

30 |

How Do I View Training Environment Variables?

Environment variables may be injected into the container or processes, depending on the service. Environment variables injected into processes are invisible in Cloud Shell.

You are advised to use method 1 to view all environment variables.

- View training environment variables using the boot command.

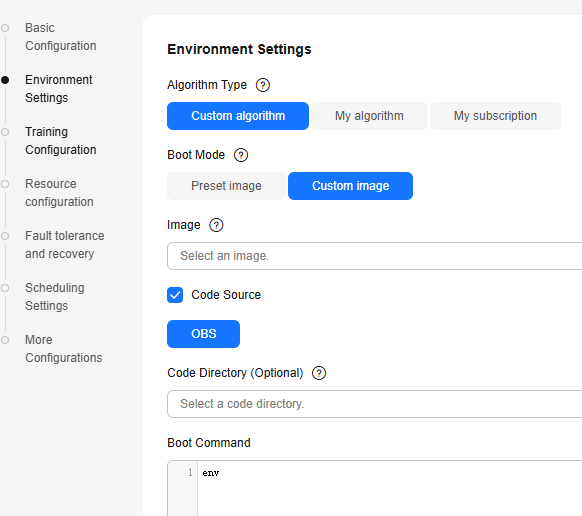

When creating a training job, select Custom algorithm for Algorithm Type and Custom image for Boot Mode, enter env for Boot Command, and retain default settings for other parameters.

Figure 1 Boot Command

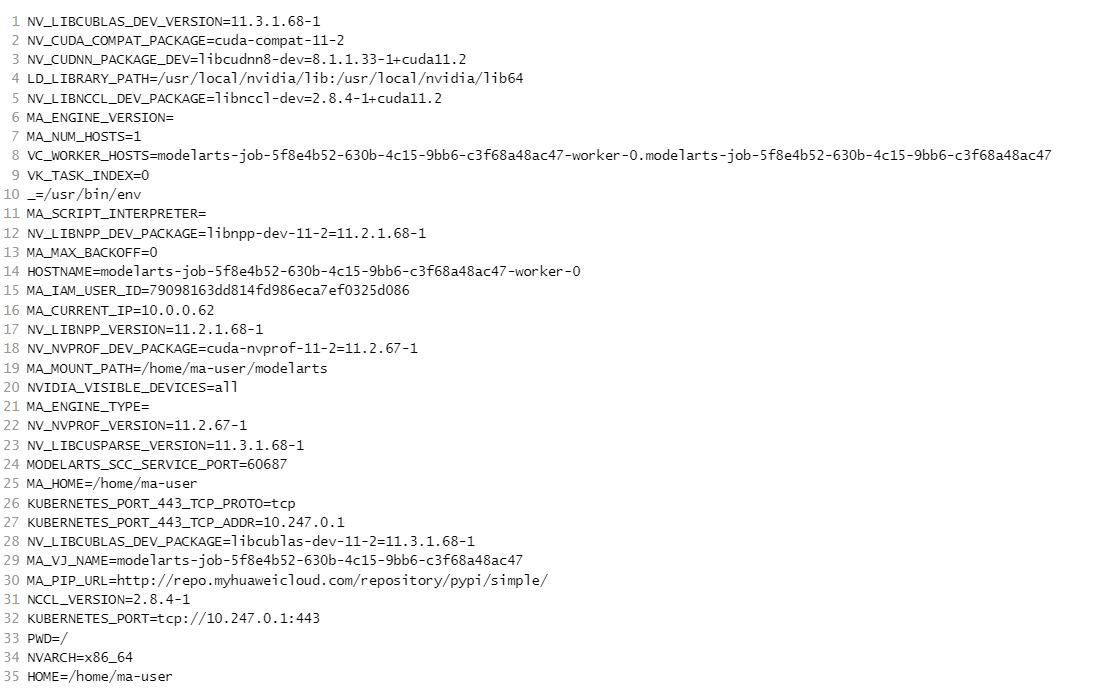

After the training job is complete, check the Logs tab on the training job details page. The logs contain information about all environment variables.

Figure 2 Viewing logs

- Check training environment variables using Cloud Shell.

Run the env command on Cloud Shell to obtain the environment variables.

This method fails to obtain environment variables injected by the training platform during processes, such as VC_TASK_INDEX, VC_WORKER_NUM, and VC_WORKER_HOSTS in supernode scenarios. This method is not recommended.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot