Configuring that Location Cannot Be Specified When a Hive Internal Table Is Created

Scenario

When creating a Hive table, you can specify the location of the table to an HDFS or OBS directory (in the decoupled storage-compute scenario). However, you cannot directly specify the location when creating a Hive internal table. In this case, you can configure the hive.internaltable.notallowlocation parameter to achieve this purpose. After a Hive internal table is created, the location of the table is created in the default warehouse directory /user/hive/warehouse and cannot be specified to other directories. If the location is specified when an internal table is created, the table fails to be created.

After this function is enabled, if there is a table that points to a directory other than the default warehouse directory in the database, Exercise caution when creating databases, migrating table scripts, and rebuilding metadata to prevent errors.

Procedure

- Log in to FusionInsight Manager, choose Cluster > Services > Hive, click Configurations, and click All Configurations.

- Choose HiveServer(Role) > Customization, add a custom parameter to the hive-site.xml file, and set Name to hive.internaltable.notallowlocation. The parameter values can be:

- true: The table location cannot be specified when a Hive internal table is created.

- false (default value): The table location can be specified when a Hive internal table is created.

Set this parameter to true.

- Click Save. Click Instances, select all Hive instances, click More then Restart Instance, enter the user password, and click OK to restart all Hive instances.

- Determine whether to enable this function on the Spark/Spark2x client.

- If yes, download and install the Spark/Spark2x client again.

- If no, no further action is required.

- Log in to the node where the client is installed as the client installation user.

For details about how to download and install the cluster client, see Installing an MRS Cluster Client.

- Configure environment variables and authenticate the user.

Go to the client installation directory.

cd Client installation directoryLoad the environment variables.

source bigdata_env

Authenticate the user. Skip this step for clusters with Kerberos authentication disabled.

kinit Hive service user - Log in to the Hive client.

beeline

- Create a Hive internal table.

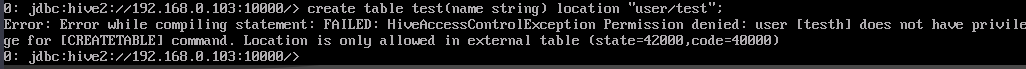

If the location of a Hive internal table is specified, for example, /user/test in HDFS, run the following command:

create table test(name string) location "user/test";

If an error is reported after the command is executed and the table fails to be created, the configuration of that the location cannot be specified when the Hive internal table is created is successful.

Delete the location parameter from the command and run the command again to create the Hive table.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot