Configuring the HA Function for SAP NetWeaver (Distributed HA Deployment)

Scenarios

To prevent the SAP NetWeaver from being affected by a single point of failure and improve the availability of the SAP NetWeaver, configure HA for the active and standby ASCS nodes of SAP NetWeaver. This operation is required only in the distributed HA deployment scenario.

Prerequisites

- The mutual trust relationship has been established between the active and standby ASCS nodes.

- You have disabled the firewall of the OS. For details, see section Modifying OS Configurations.

- To ensure that the communication between the active and standby ASCS nodes is normal, add the mapping between the virtual IP addresses and virtual hostnames to the hosts file after installing the SAP NetWeaver instance.

- Log in to the active and standby ASCS nodes one by one and modify the /etc/hosts file:

vi /etc/hosts

- Change the IP addresses corresponding to the virtual hostnames to the virtual IP addresses.

10.0.3.52 netweaver-0001 10.0.3.196 netweaver-0002 10.0.3.220 ascsha 10.0.3.2 ersha

ascsha indicates the virtual hostname of the active ASCS node and ersha indicates the virtual hostname of the standby ASCS node. Virtual hostnames can be customized.

- Log in to the active and standby ASCS nodes one by one and modify the /etc/hosts file:

- Check that both the active and standby ASCS nodes have the /var/log/cluster directory. If the directory does not exist, create one.

- Update the SAP resource-agents package on the active and standby ASCS nodes.

- Run the following command to check whether the resource-agents package has been installed:

sudo grep 'parameter name="IS_ERS"' /usr/lib/ocf/resource.d/heartbeat/SAPInstance

- If the following information is displayed, the patch package has been installed. No further action is required.

- If the following information is not displayed, install the patch package. Go to 2.

<parameter name="IS_ERS" unique="0" required="0">

- Install the resource-agents package.

If the image is SLES 12 SP1, run the following command:

sudo zypper in -t patch SUSE-SLE-HA-12-SP1-2017-885=1

If the image is SLES 12 SP2, run the following command:

sudo zypper in -t patch SUSE-SLE-HA-12-SP2-2018-1923=1

If the image is SLES 12 SP3, run the following command:

sudo zypper in -t patch SUSE-SLE-HA-12-SP3-2018-1922=1

- Run the following command to check whether the resource-agents package has been installed:

- Update the sap_suse_cluster_connector package on the active and standby ASCS nodes.

- Ensure that the dependency packages patterns-ha-ha_sles and sap-suse-cluster-connector have been installed.

Run the following commands to check whether the dependency packages have been installed:

rpm -qa | grep patterns-ha-ha_sles

rpm -qa | grep sap-suse-cluster-connector

If no, run the following commands:

zypper in -y patterns-ha-ha_sles

zypper in -y sap-suse-cluster-connector

Procedure

- Log in to the ASCS instance node, obtain the ha_auto_script.zip package, and decompress it to any directory.

- Obtain the ha_auto_script.zip package.

CN-Hong Kong: wget https://obs-sap-ap-southeast-1.obs.ap-southeast-1.myhuaweicloud.com/ha_auto_script/ha_auto_script.zip -P /sapmnt

AP-Bangkok: wget https://obs-sap-ap-southeast-2.obs.ap-southeast-2.myhuaweicloud.com/ha_auto_script/ha_auto_script.zip -P /sapmnt

AF-Johannesburg: wget https://obs-sap-af-south-1.obs.af-south-1.myhuaweicloud.com/ha_auto_script/ha_auto_script.zip -P /sapmnt

LA-Santiago: wget https://obs-sap-la-south-2.obs.la-south-2.myhuaweicloud.com/ha_auto_script/ha_auto_script.zip -P /sapmnt

LA-Sao Paulo1: wget https://obs-sap-sa-brazil-11.obs.sa-brazil-1.myhuaweicloud.com/ha_auto_script/ha_auto_script.zip -P /sapmnt

LA-Mexico City1: wget https://obs-sap-na-mexico-1.obs.na-mexico-1.myhuaweicloud.com/ha_auto_script/ha_auto_script.zip -P /sapmnt

LA-Mexico City2: wget https://obs-sap-la-north-2.obs.la-north-2.myhuaweicloud.com/ha_auto_script/ha_auto_script.zip -P /sapmnt

- Run the following commands to decompress the package:

cd /sapmnt

unzip ha_auto_script.zip

- Obtain the ha_auto_script.zip package.

- Set parameters in the ascs_ha.cfg file based on the site requirements. Table 1 describes the parameters in the file.

Table 1 Parameters in the ascs_ha.cfg file Type

Name

Description

masterNode

masterName

ASCS instance node name

masterHeartbeatIP1

Heartbeat plane IP address 1 of the ASCS instance node

masterHeartbeatIP2

Service plane IP address of the ASCS instance node

slaveNode

slaveName

ERS instance node name

slaveHeartbeatIP1

Heartbeat plane IP address 1 of the ERS instance node

slaveHeartbeatIP2

Service plane IP address of the ERS instance node

ASCSInstance

ASCSFloatIP

Service IP address of the ASCS instance node

ASCSInstanceDir

Directory of the ASCS instance

ASCSDevice

Disk partition used by the ASCS instance directory

ASCSProfile

Profile file of the ASCS instance

ERSInstance

NOTE:You need to log in to the ERS instance node to obtain the information about ERSInstanceDir, ERSDevice, and ERSProfile parameters.

ERSFloatIP

Service IP address of the ERS instance node

ERSInstanceDir

Directory of the ERS instance

ERSDevice

Disk partition used by the ERS instance directory

ERSProfile

Profile file of the ERS instance

trunkInfo

SBDDevice

Disk partition used by the SBD. A maximum of three disk partitions are supported. Every two partitions are separated by a comma (,), for example, /dev/sda, /dev/sdb, /dev/sdc.

- Run the following command to perform automatic HA deployment:

sh ascs_auto_ha.sh

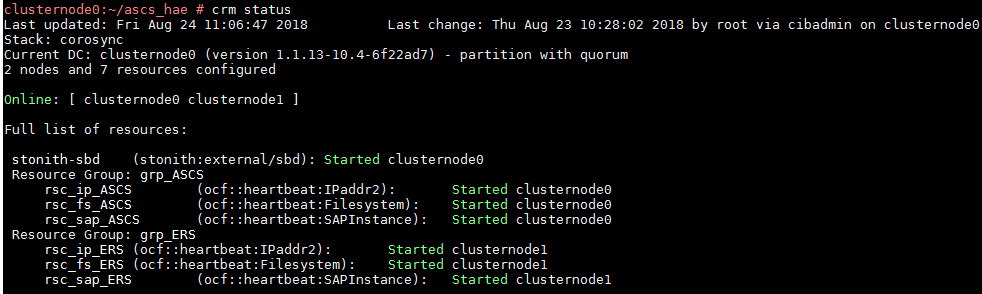

- Run the crm status command to check the resource status.

After the HA function is configured, HAE manages resources. Do not start or stop resources in other modes. If you need to manually perform test or modification operations, switch the cluster to the maintenance mode first.

crm configure property maintenance-mode=true

Exit the maintenance mode after the modification is complete.

crm configure property maintenance-mode=false

If you need to stop or restart the node, manually stop the cluster service.

systemctl stop pacemaker

After the ECS is started or restarted, run the following command to start the cluster service:

systemctl start pacemaker

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot