MRS Job Introduction

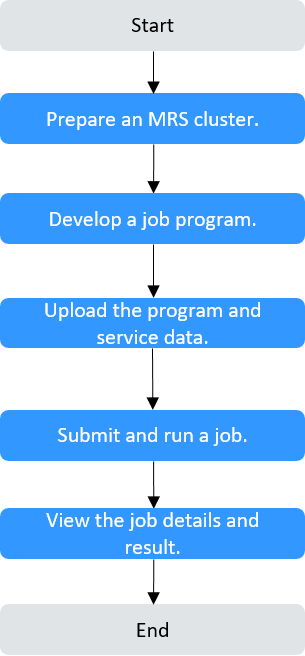

After an MRS cluster is created, you can develop upper-layer applications or scripts based on service requirements to process service data. You can develop job programs or scripts based on big data component capabilities and actual service requirements, upload the programs or scripts together with service data to the cloud platform, and run the programs or scripts for specific services.

- MRS clusters enable online job management. You can create and run jobs on the MRS console. The MRS service supports MapReduce, Spark, Hive SQL, and Spark SQL jobs. You can also use the cluster client to submit jobs to a big data component server.

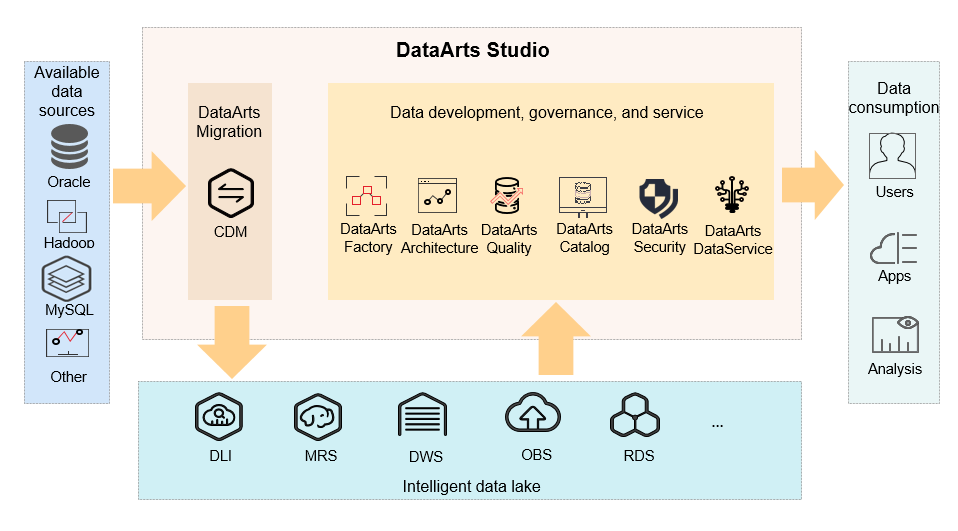

- DataArts Studio provides a one-stop big data collaboration development environment and fully managed big data scheduling capabilities. With DataArts Studio, you can develop and debug MRS Hive SQL/Spark SQL scripts online, develop MRS application jobs by performing drag-and-drop operations, and migrate and integrate data between MRS and other heterogeneous data sources.

DataArts Studio and MRS can be used together to build a full-link big data solution encompassing data ingestion, processing, and analytics.

- During enterprise data lake building, DataArts Studio can use the data integration module to collect data from multi-source heterogeneous systems, use the data development module to compile Hive, Spark, and Flink scripts and submit the scripts to the MRS cluster for distributed computing, and use MRS HDFS or HBase to store massive amounts of raw data. Meanwhile, the data governance function provided by DataArts Studio can also be used to manage metadata, monitors data quality, and traces data lineage.

- During real-time data processing, DataArts Studio can orchestrate streaming jobs, connect to MRS Kafka to obtain real-time data streams, and perform real-time data cleaning and aggregation using the MRS Spark Streaming or Flink cluster. The results are stored in the MRS Hive data warehouse. The report module of DataArts Studio then performs dynamic and visualized analysis based on the results.

- During offline batch processing, DataArts Studio can schedule MRS Hadoop MapReduce jobs to complete ETL processing of large-scale data, such as transaction reconciliation in the financial industry and device log analysis in the manufacturing industry. In addition, you can use the intelligent data modeling function of DataArts Studio to build business models for data stored in MRS, achieving integrated operation of data value mining and compliance governance.

Data processed by MRS jobs is usually stored in OBS or HDFS. Before creating a job, you need to upload the data to be analyzed to an OBS file system or the HDFS in the MRS cluster. The MRS console also allows you to quickly import data from OBS to HDFS. HDFS and OBS can store compressed data in .bz2 and .gz formats.

MRS clusters support the following job types:

- MapReduce can quickly process large-scale data in parallel. It is a distributed data processing model and execution environment. MRS supports the submission of MapReduce JAR programs.

- Spark is a distributed in-memory computing framework. MRS supports SparkSubmit, Spark Script, and Spark SQL jobs.

- SparkSubmit: You can submit Spark JAR and Spark Python programs, execute the Spark Application, and compute and process user data.

- SparkScript: You can submit SparkScript scripts and batch execute Spark SQL statements.

- Spark SQL: You can use Spark SQL statements (similar to SQL statements) to query and analyze user data in real time.

- Hive is an open-source data warehouse based on Hadoop. MRS allows you to submit HiveScript scripts and directly execute Hive SQL statements.

- Flink is a distributed big data processing engine that can perform stateful computations over both unbounded and bounded data streams.

- Hadoop Streaming works similarly to a standard Hadoop job, where you can define the input and output HDFS paths, as well as the mapper and reducer executable programs.

User Permissions Required for Running Jobs on the MRS Console

Before submitting a job on the MRS console, you need to synchronize IAM users to the user system of the MRS cluster. Whether a synchronized IAM user has the permission to submit jobs depends on the IAM policy associated with the user during IAM synchronization. For details about the job submission policy, see Table 1 in Synchronizing IAM Users to MRS.

When a user submits a job that involves the resource usage of a specific component, such as accessing HDFS directories and Hive tables, the FusionInsight Manager administrator (for example, user admin) must grant the required permissions to the user.

- Log in to FusionInsight Manager of the cluster as user admin. For details, see Accessing MRS Manager.

- Add the role of the component whose permission is required by the user. For details, see Managing MRS Cluster Roles.

- Change the user group to which the user who submits the job belongs and add the new component role to the user group. For details, see Managing MRS Cluster User Groups.

After the component role associated with the user group to which the user belongs is modified, it takes at least 5 minutes for the role permissions to take effect.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot