ALM-14022 NameNode Average RPC Queuing Time Exceeds the Threshold

Alarm Description

The system checks the average RPC queuing time of NameNode every 30 seconds, and compares the actual average RPC queuing time with the threshold (default value: 200 ms). This alarm is generated when the system detects that the average RPC queuing time exceeds the threshold for several consecutive times (10 times by default).

You can choose O&M > Alarm > Thresholds > HDFS and change the threshold.

When Trigger Count is 1, this alarm is cleared when the average RPC queuing time of NameNode is less than or equal to the threshold. When Trigger Count is greater than 1, this alarm is cleared when the average RPC queuing time of NameNode is less than or equal to 90% of the threshold.

Alarm Attributes

|

Alarm ID |

Alarm Severity |

Auto Cleared |

|---|---|---|

|

14022 |

Major |

Yes |

Alarm Parameters

|

Parameter |

Description |

|---|---|

|

Source |

Specifies the cluster for which the alarm is generated. |

|

ServiceName |

Specifies the service for which the alarm is generated. |

|

RoleName |

Specifies the role for which the alarm is generated. |

|

HostName |

Specifies the host for which the alarm is generated. |

|

NameServiceName |

Specifies the NameService service for which the alarm is generated. |

|

Trigger condition |

Specifies the threshold for triggering the alarm. If the current indicator value exceeds this threshold, the alarm is generated. |

Impact on the System

NameNode cannot process the RPC requests from HDFS clients, upper-layer services that depend on HDFS, and DataNode in a timely manner. Specifically, the services that access HDFS run slowly or the HDFS service is unavailable.

Possible Causes

- The CPU performance of NameNode nodes is insufficient and therefore NameNode nodes cannot process messages in a timely manner.

- The configured NameNode memory is too small and frame freezing occurs on the JVM due to frequent full garbage collection.

- NameNode parameters are not configured properly, so NameNode cannot make full use of system performance.

- The volume of services that access HDFS is too large and therefore NameNode is overloaded.

Handling Procedure

Obtain alarm information.

- On the FusionInsight Manager portal, choose O&M > Alarm > Alarms. In the alarm list, click the alarm.

- Check the alarm. Obtain the alarm generation time from Generated. Obtain the host name of the NameNode node involved in this alarm from the HostName information of Location. Then obtain the name of the NameService node involved in this alarm from the NameServiceName information of Location.

Check whether the threshold is too small.

- Check the status of the services that depend on HDFS. Check whether the services run slowly or task execution times out.

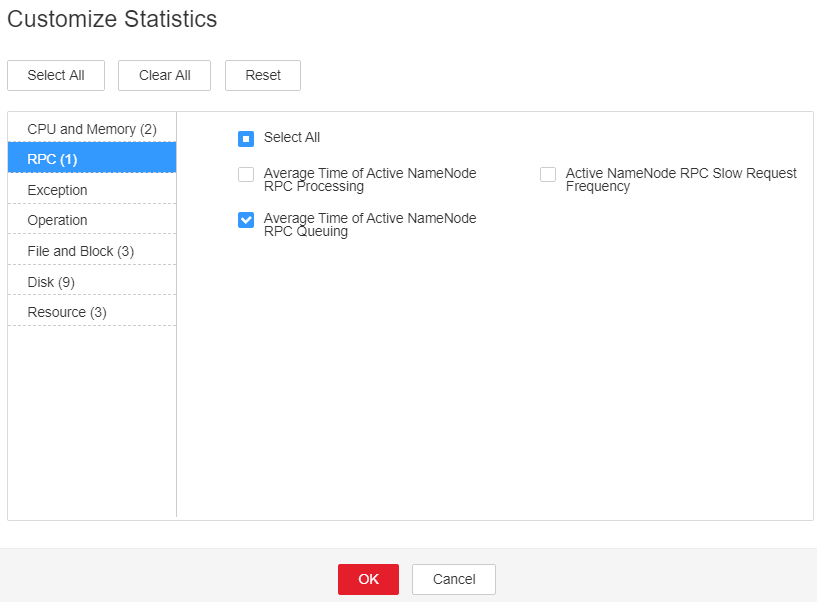

- On the FusionInsight Manager homepage, choose Cluster > Services > HDFS. Click the drop-down menu in the upper right corner of Chart, choose Customize, select Average Time of Active NameNode RPC Queuing, and click OK.

Figure 1 Average Time of Active NameNode RPC Queuing

- On the Average Time of Active NameNode RPC Queuing monitoring page, obtain the value of the NameService node involved in this alarm.

- On the FusionInsight Manager homepage, choose O&M > Alarm > Thresholds > HDFS. Locate Average Time of Active NameNode RPC Queuing and click the Modify in the Operation column of the default rule. The Modify Rule page is displayed. Change Threshold to 150% of the monitored value. Click OK to save the new threshold.

- Wait for 1 minute and then check whether the alarm is automatically cleared.

- If yes, no further action is required.

- If no, go to Step 8.

Check whether the CPU performance of the NameNode node is sufficient.

- On the FusionInsight Manager portal, click O&M > Alarm > Alarms and check whether ALM-12016 CPU Usage Exceeds the Threshold is generated.

- Rectify the fault by following the handling procedure of ALM-12016 CPU Usage Exceeds the Threshold.

- Wait for 10 minutes and check whether alarm 14022 is automatically cleared.

- If yes, no further action is required.

- If no, go to Step 11.

Check whether the memory of the NameNode node is too small.

- On the FusionInsight Manager portal, click O&M > Alarm > Alarms and check whether ALM-14007 HDFS NameNode Memory Usage Exceeds the Threshold is generated.

- Rectify the fault by following the handling procedure of ALM-14007 NameNode Heap Memory Usage Exceeds the Threshold.

- Wait for 10 minutes and check whether alarm is automatically cleared.

- If yes, no further action is required.

- If no, go to Step 14.

Check whether NameNode parameters are configured properly.

- On the FusionInsight Manager homepage, choose Cluster > Services > HDFS > Configurations > All Configurations. Search for parameter dfs.namenode.handler.count and view its value.

If the value is lower than or equal to 128, change it to 128. If the value is greater than 128 but less than 192, change it to 192.

- Search for parameter ipc.server.read.threadpool.size and view its value.

If the value is less than 5, change it to 5.

- Click Save and then OK to save the settings.

- On the Instance page of HDFS, select the standby NameNode of NameService involved in this alarm and choose More > Restart Instance. Enter the required password and click OK . Wait until the standby NameNode is started up.

Services are not affected after the standby NameNode is restarted.

- On the Instance page of HDFS, select the active NameNode of NameService involved in this alarm and choose More > Restart Instance. Enter the required password and click OK. Wait until the active NameNode is started up.

- Wait for 1 hour and then check whether the alarm is automatically cleared.

- If yes, no further action is required.

- If no, go to Step 20.

Check whether the HDFS workload changes and reduce the workload properly.

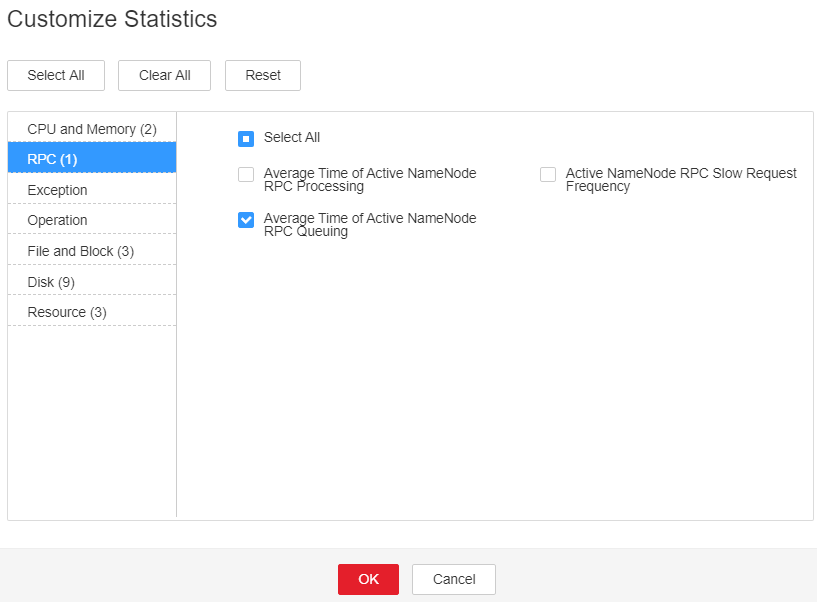

- On the FusionInsight Manager homepage, choose Cluster > Services > HDFS. Click the drop-down menu in the upper right corner of the Chart area, choose Customize > RPC, select Average Time of Active NameNode RPC Queuing, and click OK.

Figure 2 Average Time of Active NameNode RPC Queuing

- Click

. The Details page is displayed.

. The Details page is displayed. - Set the monitoring data display period, from 5 days before the alarm generation time to the alarm generation time. Click OK.

- On the Average RPC Queuing Time monitoring page, check whether the point in time when the queuing time increases abruptly exists.

- Confirm and check the point in time. Check whether a new task frequently accesses HDFS and whether the access frequency can be reduced.

- If a Balancer task starts at the point in time, stop the task or specify a node for the task to reduce the HDFS workload.

- Wait for 1 hour and then check whether the alarm is automatically cleared.

- If yes, no further action is required.

- If no, go to Step 27.

Collect fault information.

- On the FusionInsight Manager portal, choose O&M > Log > Download.

- Select HDFS in the required cluster from the Service.

- Click

in the upper right corner, and select a time span starting 10 minutes before and ending 10 minutes after when the alarm was generated. Then, click Download to collect the logs.

in the upper right corner, and select a time span starting 10 minutes before and ending 10 minutes after when the alarm was generated. Then, click Download to collect the logs. - Contact the O&M personnel and send the collected logs.

Alarm Clearance

After the fault is rectified, the system automatically clears this alarm.

Related Information

None

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot