Horizontal Pod Autoscaler (HPA)

A HorizontalPodAutoscaler (HPA) is a resource object that can dynamically scale the number of pods running a Deployment based on certain metrics so that services running on the Deployment can adapt to metric changes. HPA automatically increases or decreases the number of pods based on the configured policy to meet your requirements.

Constraints

Each pod in CCI 2.0 runs in an environment where system components are installed. These system components occupy some resources, which may cause the resource usages of the pod to be lower than the expected limits. As a result, the triggering conditions of the HPA policy you configure may be affected. To avoid this, refer to Reserved System Overhead.

Creating and Managing an HPA Policy on the Console

- Log in to the CCI 2.0 console.

- In the navigation pane, choose Workloads. On the Deployments tab, locate the target Deployment and click its name.

- On the Deployment details page, click the Auto Scaling tab.

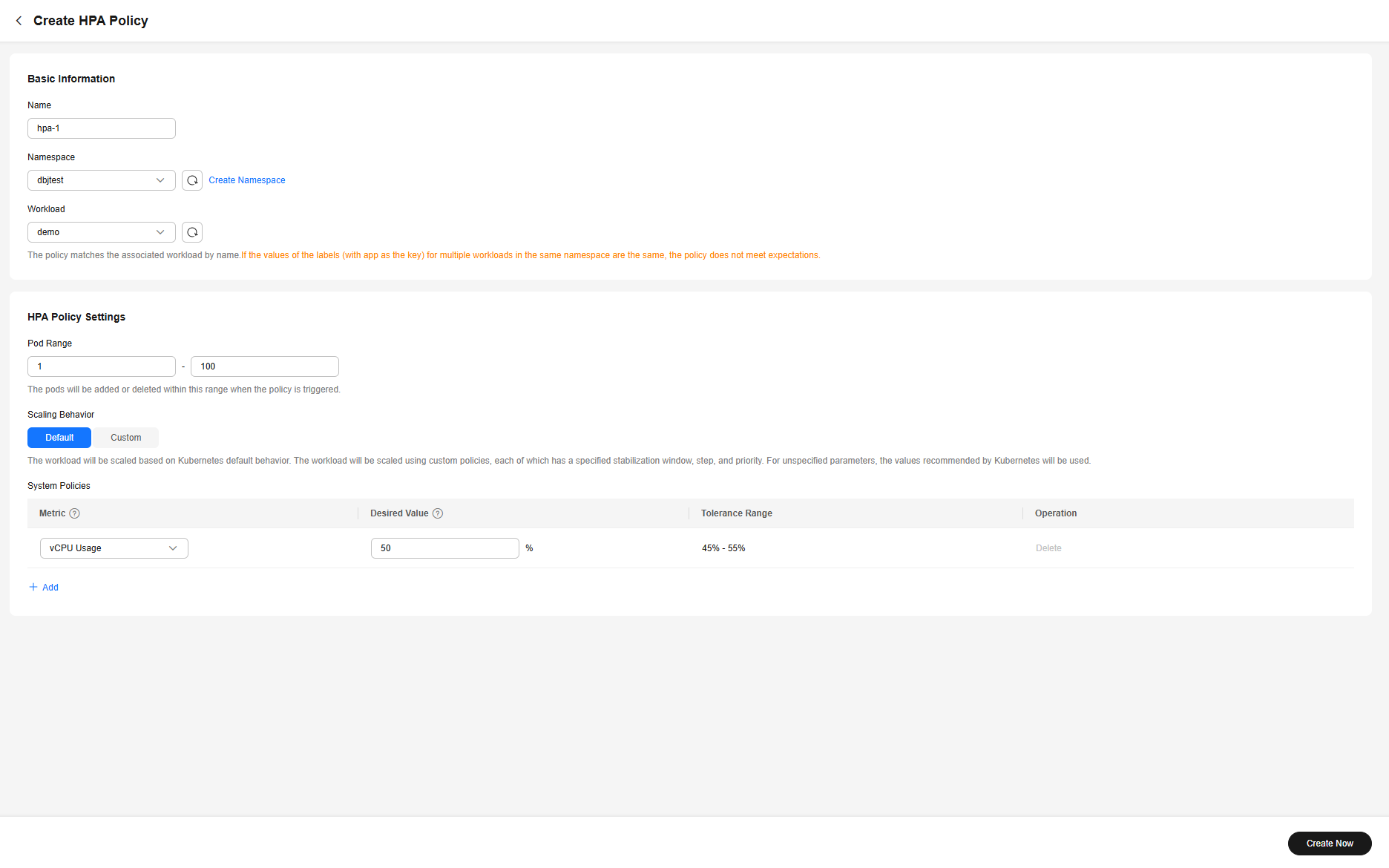

- Click Create HPA Policy and supplement related information as prompted. For details about the parameters, see Table 1.

Table 1 Parameters for creating an HPA policy Parameter

Description

Name

Enter an HPA policy name.

Namespace

Select a namespace. If you need to create a namespace, click Create Namespace.

Workload

CCI automatically matches the associated workload.

Pod Range

Enter the maximum and minimum numbers of pods that can be scaled by HPA.

Scaling Behavior

- Default

Workloads will be scaled using the Kubernetes default behavior.

- Custom

Workloads will be scaled using custom policies such as the stabilization window, steps, and priorities. Unspecified parameters use the values recommended by Kubernetes.

System Policies

Select the required metrics and set the desired values.

- Default

- Optional: Click Update HPA Policy or Delete HPA Policy to update or delete a created HPA policy.

Creating an HPA Policy Using a YAML File

- If spec.metrics.resource.target.type is set to Utilization, you need to specify the resource requests value when creating a workload.

- When spec.metrics.resource.target.type is set to Utilization, the resource usage is calculated as follows: Resource usage = Used resource/Available pod flavor. You can determine the actual flavor of a pod by referring to Pod Flavor.

A properly configured auto scaling policy includes the metric, threshold, and step. It eliminates the need to manually adjust resources in response to service changes and traffic bursts, thus helping you reduce workforce and resource consumption. Currently, CCI supports only one type of auto scaling policy:

- Log in to the CCI 2.0 console.

- In the navigation pane, choose Workloads. On the Deployments tab, locate the target Deployment and click its name.

- On the Auto Scaling tab, click Create from YAML to configure a policy.

The following describes the auto scaling policy file format:

- Resource description in the hpa.yaml file

kind: HorizontalPodAutoscaler apiVersion: cci/v2 metadata: name: nginx # HPA name namespace: test # HPA namespace spec: scaleTargetRef: # Reference the resource to be automatically scaled. kind: Deployment # Type of the target resource, for example, Deployment name: nginx # Name of the target resource apiVersion: cci/v2 # Version of the target resource minReplicas: 1 # Minimum number of replicas for HPA scaling maxReplicas: 5 # Maximum number of replicas for HPA scaling metrics: - type: Resource # Resource metrics are used. resource: name: memory # Resource name, for example, cpu or memory target: type: Utilization # Metric type. The value can be Utilization (percentage) or AverageValue (absolute value). averageUtilization: 50 # Resource usage. For example, when the CPU usage reaches 50%, scale-out is triggered. behavior: scaleUp: stabilizationWindowSeconds: 30 # Scale-out stabilization duration, in seconds policies: - type: Pods # Number of pods to be scaled value: 1 periodSeconds: 30 # The check is performed once every 30 seconds. scaleDown: stabilizationWindowSeconds: 30 # Scale-in stabilization duration, in seconds policies: - type: Percent # The resource is scaled in or out based on the percentage of existing pods. value: 50 periodSeconds: 30 # The check is performed once every 30 seconds. - Resource description in the hpa.json file

{ "kind": "HorizontalPodAutoscaler", "apiVersion": "cci/v2", "metadata": { "name": "nginx", # HPA name "namespace": "test" # HPA namespace }, "spec": { "scaleTargetRef": { # Reference the resource to be automatically scaled. "kind": "Deployment", # Type of the target resource, for example, Deployment "name": "nginx", # Name of the target resource "apiVersion": "cci/v2" # Version of the target resource }, "minReplicas": 1, # Minimum number of replicas for HPA scaling "maxReplicas": 5, # Maximum number of replicas for HPA scaling "metrics": [ { "type": "Resource", # Resource metrics are used. "resource": { "name": "memory", # Resource name, for example, cpu or memory "target": { "type": "Utilization", # Metric type. The value can be Utilization (percentage) or AverageValue (absolute value). "averageUtilization": 50 # Resource usage. For example, when the CPU usage reaches 50%, scale-out is triggered. } } } ], "behavior": { "scaleUp": { "stabilizationWindowSeconds": 30, "policies": [ { "type": "Pods", "value": 1, "periodSeconds": 30 } ] }, "scaleDown": { "stabilizationWindowSeconds": 30, "policies": [ { "type": "Percent", "value": 50, "periodSeconds": 30 } ] } } } }

- Resource description in the hpa.yaml file

- Click OK.

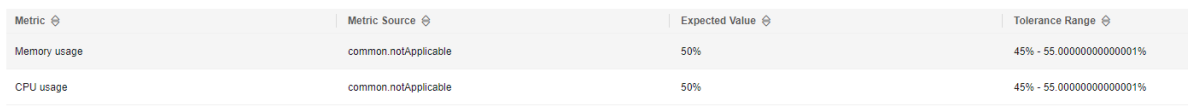

You can view the policy on the Auto Scaling tab.Figure 1 Auto scaling policy

When the trigger condition is met, the auto scaling policy will be executed.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot