FAQ

Symptom 1: Pods cannot be scheduled to CCI. After the kubectl get node command is executed on the CCE cluster console, the output showed that the virtual-kubelet node is in the SchedulingDisabled state.

Cause: CCI resources are sold out. As a result, scheduling to CCI failed, and the bursting node will be locked (in the SchedulingDisabled state) for half an hour, during which the pods cannot be scheduled to CCI.

Solution: Use kubectl to check the status of the bursting node on the CCE cluster console. If the bursting node is locked, you can manually unlock it.

Symptom 2: Elastic scheduling to CCI is unavailable.

Cause: The subnet where the CCE cluster resides overlaps with 10.247.0.0/16, which is the CIDR block reserved for the Service in the CCI namespace.

Solution: Reset a subnet for the CCE cluster.

Symptom 3: After the bursting add-on is rolled back from 1.5.18 or later to a version earlier than 1.5.18, pods cannot be accessed through the Service.

Cause: Once the add-on is upgraded to 1.5.18 or later, the sidecar in each pod that is newly scaled to CCI is incompatible with the add-on of a version earlier than 1.5.18. So, after the add-on is rolled back, the access to the pods is abnormal. If the add-on version is earlier than 1.5.18, pods scaled to CCI are not affected.

Solutions:

- Solution 1: Upgrade the add-on to 1.5.18 or later again.

- Solution 2: Delete the pods that failed to be accessed through the Service and create pods. The new pods scaled to CCI can be accessed normally.

Symptom 4: The add-on cannot be uninstalled.

Scenario: The add-on fails to be uninstalled due to incorrect modification of swr_addr and swr_user.

Cause: The add-on uninstallation depends on gc-jobs. If the image fails to be pulled, gc-jobs cannot be executed successfully. As a result, the uninstallation fails.

- If the add-on fails to be uninstalled, log in to the node where kubectl is configured in the CCE cluster and click Uninstall.

- Run the following commands within 210 seconds.

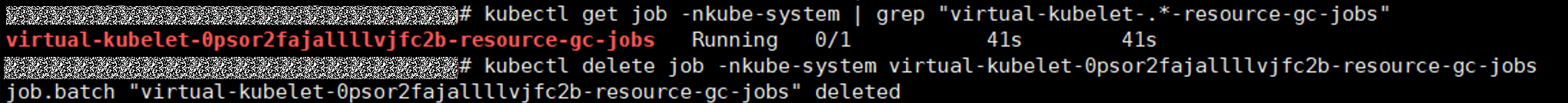

- Delete resource-gc-jobs.

kubectl get job -nkube-system | grep "virtual-kubelet-.*-resource-gc-jobs" kubectl delete job -nkube-system xxx

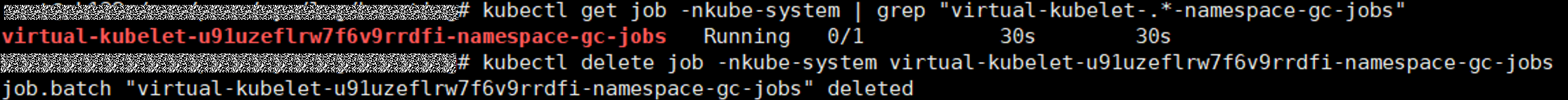

- Delete namespace-gc-jobs.

kubectl get job -nkube-system | grep "virtual-kubelet-.*-namespace-gc-jobs" kubectl delete job -nkube-system yyy

- Delete resource-gc-jobs.

- For other exceptions, submit a service ticket.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot