What Should I Do If Error "HoodieException: Duplicate fileID" Occurs During Data Synchronization to Hudi?

Symptom

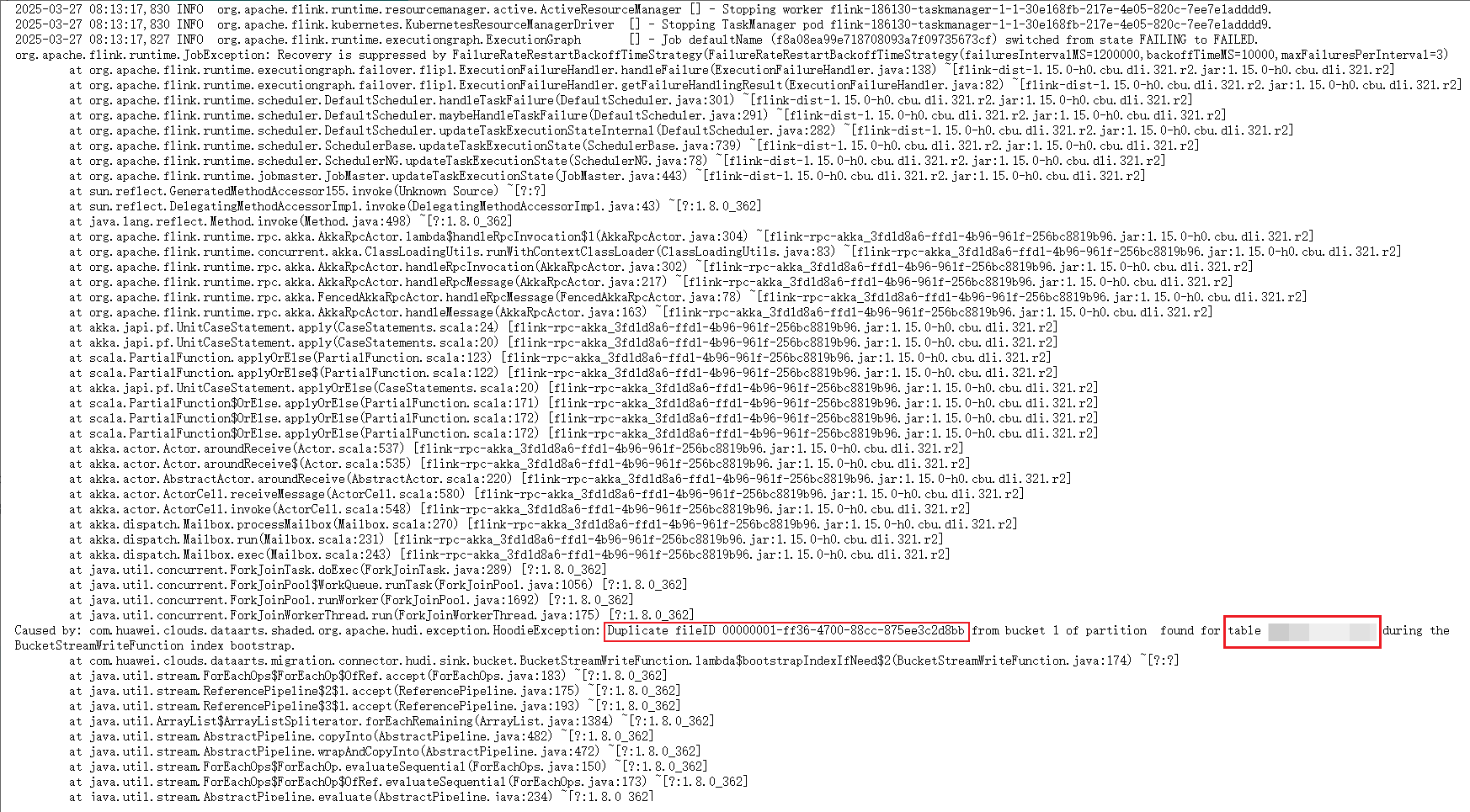

An error occurs for a job that migrates data to a Hudi table whose index type is BUCKET. Error keyword "HoodieException: Duplicate fileID" can be found in both JobManager and TaskManager.

In the Hudi table directory, the same bucket ID in the faulty partition corresponds to different file IDs.

Possible Causes

The Hudi FileGroupId of the BUCKET index consists of the bucket ID and UUID. The bucket ID and UUID correspond to each other one by one. When new data is written to the partition, if one bucket ID corresponds to multiple UUIDs, error "Duplicate fileID" is reported, indicating that there is dirty data in the Hudi table directory.

Possible causes of dirty data:

- Multiple Spark or Flink processes write the Hudi table at the same time.

- For an MRS cluster of an early version, when the insert overwrite or insert into xxx select * from Spark SQL statement is used to write data to a Hudi table, dirty data files may be generated in the directory.

- There are residual historical files in the directory, or dirty data files are migrated to the directory by a file migration job.

Solution

- Back up data.

Back up data in the partition using OBS and manually delete dirty data in the Hudi table path.

- Confirm the dirty data.

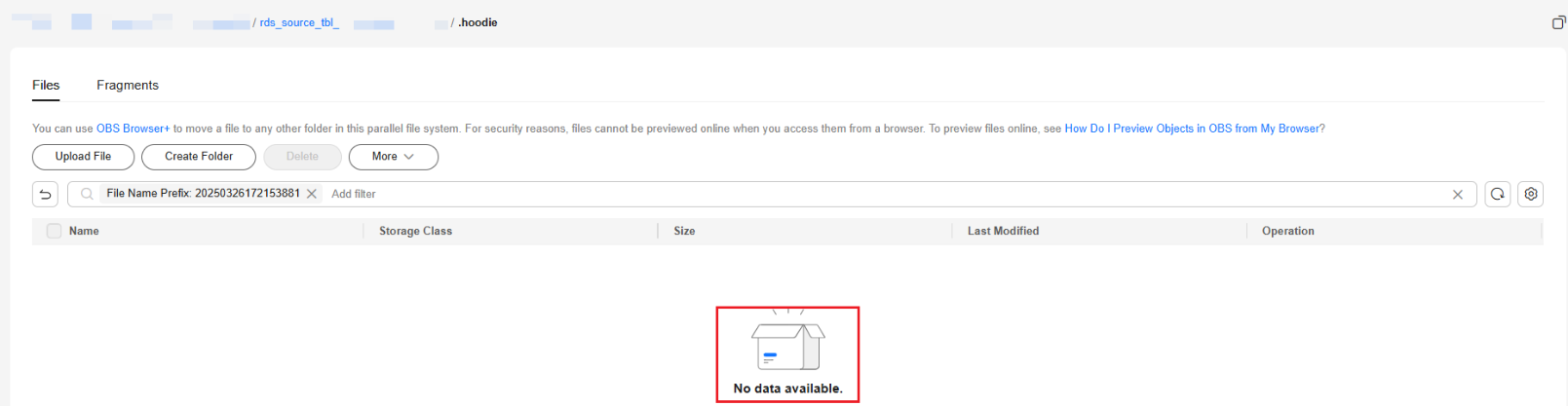

- Search for the commit time of the two conflicting files shown in Figure 3 in the .hoodie directory of the Hudi table, that is, the timestamps [20250326094529742] and [20250326172153881] at the end of the file names.

The deltacommit file that can be found is a normal data file. The timestamp that cannot be found corresponds to the dirty data file, which you can delete.Figure 4 Checking whether to delete conflicting files

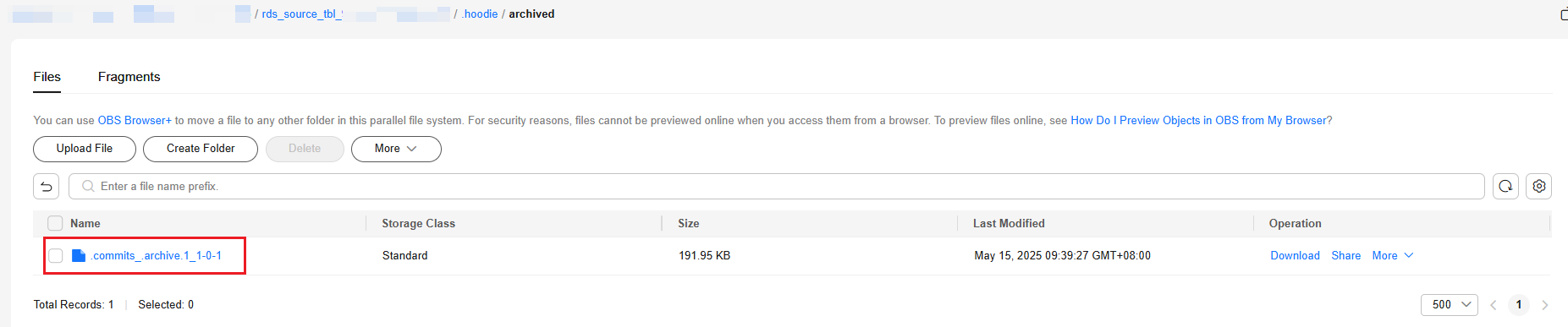

- If no commit record can be found for either of the two timestamps, the commit files may have been archived. In this case, download the archived file in the .hoodie/archive directory and run the vi command to search for commit records. If a commit record can be found, the data file is normal. If no commit record can be found, the data file is dirty and needs to be manually deleted.

Figure 5 Viewing the Hudi commit archived file

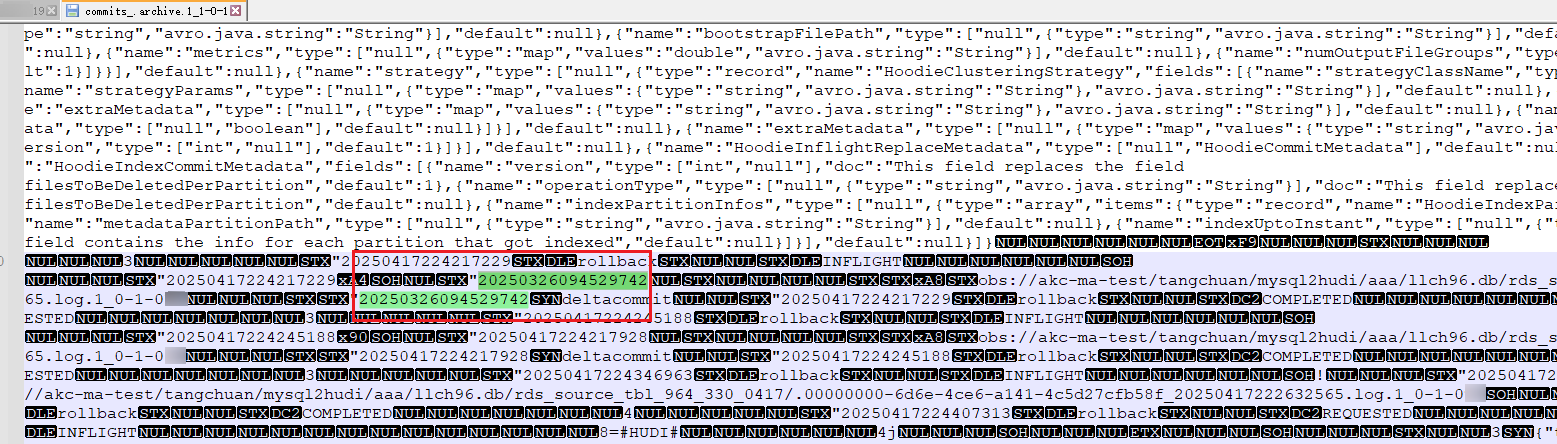

Figure 6 Viewing the Hudi commit archived file using the vi command

Figure 6 Viewing the Hudi commit archived file using the vi command

- Manually delete the dirty data file.

In this example, a commit record (20250326094529742) can be found for the 00000000-3d29-44d4-b603-47c36a23d406_1-12-45_20250326094529742.parquet file, which is a normal data file and needs to be reserved.

No commit record associated with timestamp 20250326172153881 can be found for the 00000000-419f-4468-a249-ca6c3c86b1db-00_0-6-16_20250326172153881.parquet file. This file is a dirty data file and needs to be deleted.

You can log in to OBS and manually delete the dirty data file.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot