Configuring Spark Dynamic Masking

Scenarios

Dynamic masking is a security feature that hides sensitive data from unauthorized users and works by partially or fully masking sensitive data based on user permissions or roles.

Enabling Spark dynamic masking allows data within masked columns to be used for computations while keeping the actual values concealed in the output. The cluster's masking policy is dynamically propagated based on data lineage, ensuring that sensitive information remains protected throughout the query lifecycle. This approach optimizes data utility without compromising privacy.

Notes and Constraints

- This section applies only to MRS 3.3.1-LTS or later.

- The dynamic data masking feature cannot be enabled if jobs are submitted on the console.

- Dynamic data masking is not applicable to Hudi tables.

- Dynamic data masking is not supported for non-SQL access methods.

- Dynamic data masking is not supported for direct HDFS read/write operations.

- Dynamic data masking does not support complex data types such as array, map, and struct.

- Dynamic data masking is supported only for Spark jobs submitted via spark-beeline using a JDBC connection.

- During masking policy transfer, if the incoming policy conflicts with an existing policy on the target table, the existing policy will be overridden and marked as Custom: ***.

- Dynamic data masking is supported only for the following data types: int, char, varchar, date, decimal, float, bigint, timestamp, tinyint, smallint, double, string, and binary. When masking policies are applied to data types such as int, date, decimal, float, bigint, timestamp, tinyint, smallint, and double, query results from Spark Beeline may not behave as expected. In some cases, the output may not accurately reflect the original values, leading to inconsistencies. To ensure that query results align with masking policy expectations, it is recommended that you use the Nullify masking policy.

- For data types not supported by dynamic data masking policies, or when masking logic is transferred to an output column, the system defaults to the Nullify masking policy.

Procedure

- Modify JDBCServer instance parameters. Log in to FusionInsight Manager and choose Cluster > Services > Spark. On the displayed page, click Configurations and then All Configurations, choose JDBCServer(Role), modify the following parameters, save the settings, and restart the Spark service.

- If Ranger is used for authentication, add the following custom parameters in the custom area.

Parameter

Example Value

Description

spark.dynamic.masked.enabled

true

Whether to enable dynamic data masking. Enabling this function can enhance data security.

- true: Dynamic data masking is enabled.

- false: Dynamic data masking is disabled.

spark.ranger.plugin.authorization.enable

true

Whether to enable Ranger authentication. Enabling this function can enhance data access security. If you require fine-grained access control, it is strongly recommended to enable and configure this feature.

- true: Ranger authentication is enabled.

- false: Ranger authentication is disabled.

Modify the following parameters.

Parameter

Example Value

Description

spark.ranger.plugin.masking.enable

true

Whether to enable the column masking function of the Ranger plugin. Enabling this function can enhance data security. However, you need to ensure that the Ranger service has been correctly configured and a proper masking policy has been defined.

- true: The column masking function of the Ranger plugin is enabled.

- false: The column masking function of the Ranger plugin is disabled.

spark.sql.authorization.enabled

true

Whether authorization is enabled for Spark SQL operations. After this function is enabled, Spark executes SQL queries based on user roles and permissions to enforce fine-grained access control.

- true: Authentication for Spark SQL operations is enabled.

- false: Authentication for Spark SQL operations is disabled.

- If you use Hive metadata authentication instead of Ranger authentication, add the following custom parameters in the custom area.

Parameter

Example Value

Description

spark.ranger.plugin.use.hive.acl.enable

true

Whether to enable Spark to use Hive ACLs for authorization checks.

- true: enables Spark to use Hive ACLs for authorization checks.

- false: disables Spark from using Hive ACLs for authorization checks.

spark.dynamic.masked.enabled

true

Whether to enable dynamic data masking. Enabling this function can enhance data security.

- true: Dynamic data masking is enabled.

- false: Dynamic data masking is disabled.

spark.ranger.plugin.authorization.enable

true

Whether to enable Ranger authentication. Enabling this function can enhance data access security. If you require fine-grained access control, it is strongly recommended to enable and configure this feature.

- true: Ranger authentication is enabled.

- false: Ranger authentication is disabled.

Modify the following parameters.

Parameter

Example Value

Description

spark.ranger.plugin.masking.enable

true

Whether to enable the column masking function of the Ranger plugin.

- true: The column masking function of the Ranger plugin is enabled.

- false: The column masking function of the Ranger plugin is disabled.

If you use Hive metadata authentication instead of Ranger authentication and Hive policy initialization has not been completed in Ranger, perform the following operations:- Enable the Ranger authentication function for Hive and restart Hive and Spark.

- Enable the Ranger authentication function for Spark and restart Spark.

- Disable the Ranger authentication function on Hive and restart Hive.

- Disable the Ranger authentication function on Spark and restart Spark.

- Log in to the Ranger web UI. If the Hive component exists under Hadoop SQL, the Hive policy has been initialized. Otherwise, the Hive policy has not been initialized.

- If your cluster also includes HetuEngine, and you want the corresponding dynamic masking policies in Ranger and HetuEngine to automatically update when Spark transfers a dynamic masking policy, set spark.dynamic.masked.hetu.policy.sync.update.enable to true and change the Ranger user type of built-in user Spark to admin.

- If Ranger is used for authentication, add the following custom parameters in the custom area.

- Log in to the Spark client node and run the following commands.

Navigate to the directory where the client is installed.

cd Client installation directoryLoad the environment variables.

source bigdata_env

Load the component environment variables.

source Spark/component_env

In security mode, additionally perform the security authentication. Authentication is not required in normal mode.

kinit testEnter the password for authentication. (Change the password upon your first login.)

- Submit a task via Spark Beeline to create a Spark table.

spark-beeline create table sparktest(a int, b string); insert into sparktest values (1,"test01"), (2,"test02");

- Configure a masking policy for the sparktest table and check whether the masking takes effect. For details, see Adding a Ranger Access Permission Policy for Spark2x.

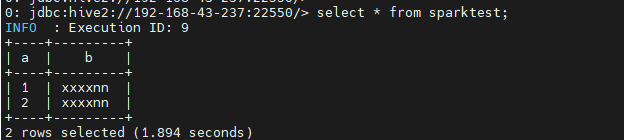

select * from sparktest;

- Verify the transfer of the data masking policy.

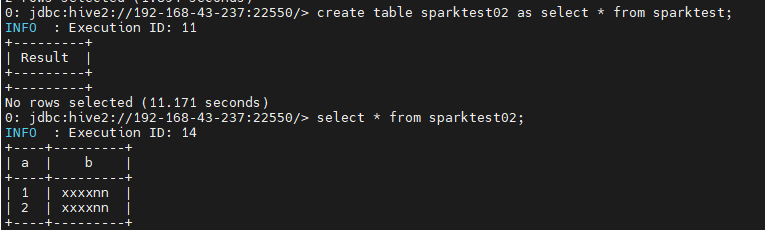

create table sparktest02 as select * from sparktest; select * from sparktest02;

The presence of the preceding information confirms that dynamic masking is successfully configured. After logging in to the Ranger masking policy management page, you can view the automatically generated masking policy for the sparktest02 table.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot