Enabling Dynamic Route Acceleration for Training Jobs

Distributed training faces performance issues because networks struggle with slow data transfer and poor bandwidth usage when exchanging information across multiple nodes. To address these challenges, ModelArts offers dynamic routing acceleration. It smartly optimizes network paths for training jobs, boosting overall performance. This guide explains how to enable this feature on ModelArts, covering both preset frameworks and custom images. It also includes setup instructions and tips to maximize your distributed training results.

Notes and Constraints

- Dynamic routing acceleration can only be enabled in the following training scenarios:

- The training job must use Python 3.7, 3.8, 3.9, or 3.10.

- Before enabling dynamic routing acceleration, contact ModelArts technical support to ensure that the cabinet plug-in and scheduling permissions of the cluster are enabled.

Scenario 1: Using the Ascend-Powered-Engine Preset Image, MindSpore, and NPUs for Training

When using the Ascend-Powered-Engine preset image to create a training job, refer to Table 1 to create a training job and enable dynamic routing acceleration. The table below describes only key parameters. Configure other parameters based on actual needs.

|

Step |

Parameter |

Description |

|---|---|---|

|

Environment settings |

Algorithm Type |

Select Custom algorithm. |

|

Boot Mode |

Select Preset image. |

|

|

Engine and Version |

Select Ascend-Powered-Engine and a MindSpore-related engine version. |

|

|

Code Directory |

Select the OBS directory where the training code file is stored. Dynamic routing acceleration improves network communication by adjusting the rank ID. To prevent communication issues, unify the rank usage in the code. |

|

|

Boot File |

Select the Python boot script of the training job in the code directory, |

|

|

Training settings |

Environment Variable |

Add the following environment variables: ROUTE_PLAN = true Do not configure the environment variable MA_RUN_METHOD. Ensure that the boot file of the training job is started using the rank table file. |

|

Resource settings |

Resource Pool |

Select a dedicated resource pool. |

|

Specifications |

Select instance specifications that meet the following requirements:

|

|

|

Compute Nodes |

Select at least three compute nodes. |

Scenario 2: Using a Custom Image, PyTorch, and NPUs for Training

When using a custom image and Ascend resource pool to create a training job, refer to Table 2 to create a training job and enable dynamic routing acceleration. The table below describes only key parameters. Configure other parameters based on actual needs.

|

Step |

Parameter |

Description |

|---|---|---|

|

Environment settings |

Algorithm Type |

Select Custom algorithm. |

|

Boot Mode |

Select Custom image. |

|

|

Image |

Select a custom image for training. The training image must use the PyTorch framework. |

|

|

Code Directory (Optional) |

Select the OBS directory where the training code file is stored. Dynamic routing acceleration improves network communication by adjusting the rank ID. To prevent communication issues, unify the rank usage in the code. |

|

|

Boot Command |

Enter the Python boot command of the image. Modify the following code in the training boot script. The values vary according to the NPU hardware.

|

|

|

Training settings |

Environment Variable |

Add the following environment variables: ROUTE_PLAN = true |

|

Resource settings |

Resource Pool |

Select a dedicated resource pool. |

|

Specifications |

Select instance specifications that meet the following requirements:

|

|

|

Compute Nodes |

Select at least three compute nodes. |

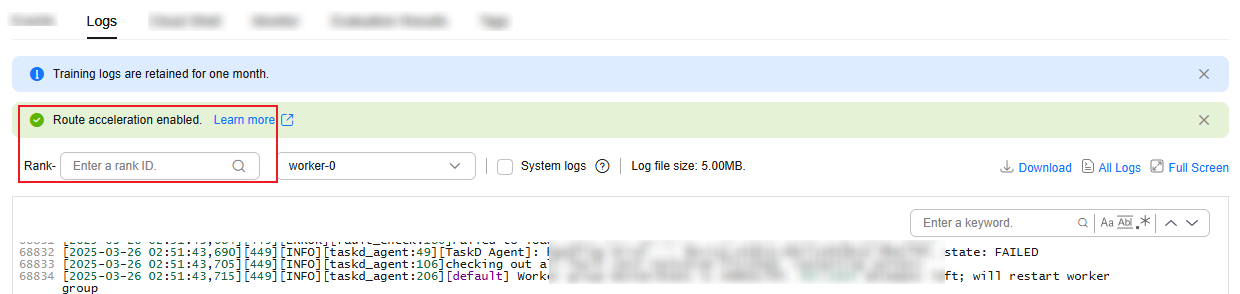

Viewing Training Logs of Dynamic Route Acceleration

When using an Ascend resource pool for training, you can check whether the route is enabled and query the log information of each rank in the Logs tab of the training job details page.

- Log in to the ModelArts console.

- In the navigation pane on the left, choose Model Training > Training Jobs.

- In the training job list, click the target job to access its details page.

- Click the Logs tab.

You can view that dynamic routing has been enabled for the training job and search for logs by rank ID.

Figure 1 Viewing dynamic route acceleration logs

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot