Using Ascend FaultDiag to Diagnose Logs in the ModelArts Lite Cluster Resource Pool

Description

This section describes how to use Ascend FaultDiag to diagnose logs in the ModelArts Lite environment, including log collection, log cleaning, and fault diagnosis.

The log data is collected by node and will be cleaned in the log directory of each node. The cleaning results will be summarized for fault diagnosis. For example, for a task running on a cluster with eight nodes and 64 cards, logs need to be collected on the eight nodes respectively. The collected logs are stored in eight directories from worker-0 to worker-7. Then, the logs are cleaned in the eight directories respectively. The cleaning results of each directory are stored in directories from output/worker-0 to output/worker-7. Finally, fault diagnosis is performed in the output directory, where you can obtain the diagnosis result.

To download Ascend FaultDiag, see Ascend Community.

Step 1: Collecting Logs

You need to collect the following types of logs: user training screen printing logs, host OS logs (host logs), device logs, CANN logs, host resource information, and NPU network port resource information.

- User training logs: log information output to the standard output (screen) during training sessions. Set environment variables to enable logging to the screen.

- Host OS logs: logs generated by user processes on the host during the execution of training jobs.

- Device logs: AI CPU and HCCP logs generated on the device when user processes run on the host. These logs are sent back to the host.

- CANN logs: runtime information from the Compute Architecture for Neural Networks (CANN) module within the Ascend computing architecture. They are useful for diagnosing issues during model conversion, such as errors like "Convert graph to om failed."

- Host resource information: statistics on resources used by AI applications or services running on the host.

- NPU network port resource information: statistics on resources used by AI applications or services running on the host.

If the log data has been output and dumped during training, for example, stored in OBS, and meets the file name and path requirements in Constraints, skip this step and go to step 2.

- Constraints

- The CANN logs must be stored in the process_log folder, for example, worker-0/…/process_log/.

- The device logs must be stored in the device_log folder, for example, worker-0/…/device_log/.

- The host resource information and NPU network port resource information must be stored in the environment_check folder, for example, worker-0/…/environment_check/.

- Logs collected on a single node are stored in a single worker directory. The total file size must be smaller than 5 GB and there cannot be more than one million files. Otherwise, the log cleaning efficiency will be affected.

- There is no size limit on the user training screen printing logs. By default, only the last 100 KB logs are read.

- A single CANN log file must be smaller than 20 MB.

- The NPU status monitoring indicator file, NPU network port monitoring indicator file, and host resource information file must be smaller than 512 MB.

- The host logs must be messages logs in the /var/log directory. The maximum size of a single file to be dumped must be less than 512 MB.

Step 2: Cleaning Logs

The collected logs should be organized by node path. For example, the logs collected on the worker-0 node must be stored in the worker-0 directory. Check whether the device_log, process_log, and environment_check subdirectories exist in the directory and whether the names are correct.

- Data mounting

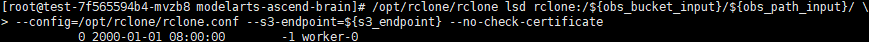

If the collected logs are stored on OBS, mount the log data in OBS using the rclone tool.

- Download and install rclone.

- Configure the credentials required for accessing OBS.

# Hard-coded or plaintext AK/SK is risky. For security, encrypt your AK/SK and store them in the configuration file or environment variables. # In this example, the AK/SK is stored in environment variables for identity authentication. Before running this example, set environment variables HUAWEICLOUD_SDK_AK and HUAWEICLOUD_SDK_SK. export AWS_ACCESS_KEY=${HUAWEICLOUD_SDK_AK} export AWS_SECRET_KEY=${HUAWEICLOUD_SDK_AK} export AWS_SESSION_TOKEN=${TOKEN} - Fill the rclone configuration file rclone.conf.

[rclone] type = s3 provider = huaweiOBS env_auth = true acl = private

- Run the lsd command to view the directory in the log path and check whether the configuration is successful.

rclone lsd rclone:/${obs_bucket_name}/${path_to_logs} --config=${path_to_rclone.config} --s3-endpoint=${obs_endpoint} –no-check-certificate - The following shows a log directory example. The task only has one node worker-0.

- Run the mount command to mount the log directory to the local host.

rclone mount rclone:/${obs_bucket_name}/${path_to_logs} /${path_to_local_dir} --config=${path_to_rclone.config} --s3-endpoint=${obs_endpoint} –no-check-certificate

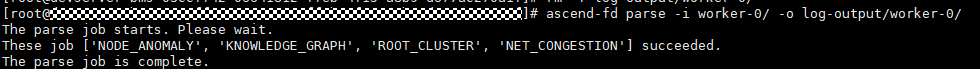

- Node log cleaning

Specify the log path of a single node as the input and the log cleaning storage path as output. The output path must be empty. Run the ascend-fd parse command to clean the logs of each node.

ascend-fd parse -i ${path_to_worker_logs} -o ${path_to_parse_output}

The cleaning result is similar to the log. Different nodes need to be stored in different worker directories.

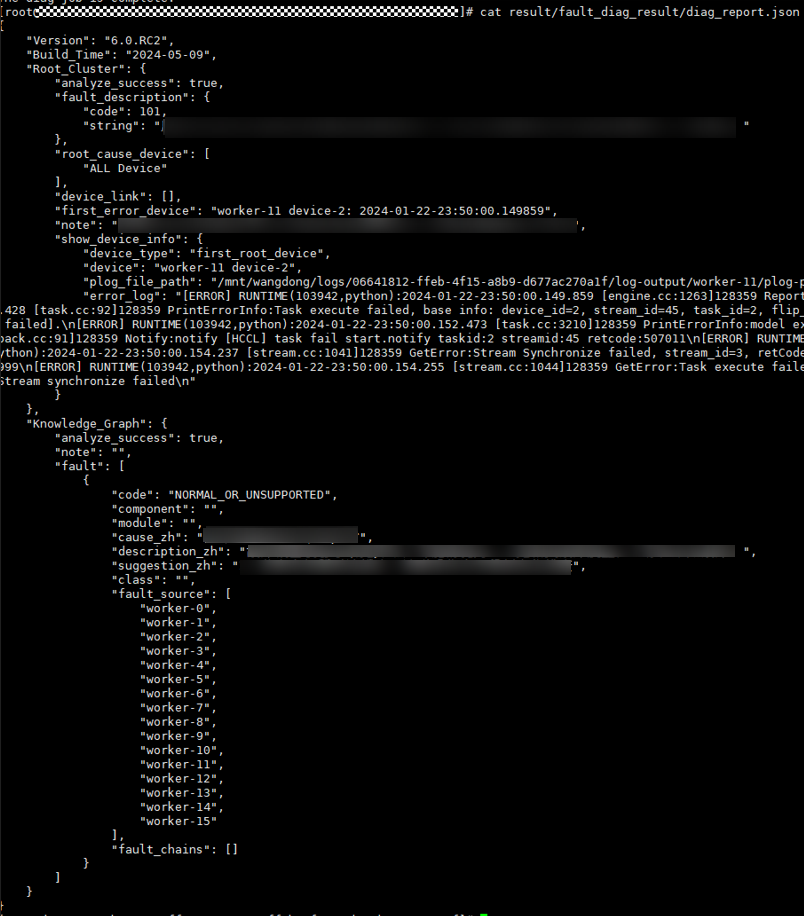

Step 3: Fault Diagnosis

There is a limit on the maximum number of processes (1,024 by default) in the Linux system. Therefore, there should be no more than 128 servers (1,024 cards) in a cluster. If the number of servers exceeds the limit, run the ulimit -n ${num} command to adjust the upper limit of the file descriptor. The value of ${num} should be greater than the number of cards, for example, for a cluster with 6,000 cards, set the value to 8192.

To perform fault diagnosis, you need to specify the path for storing the cleaning results of all nodes. The output path must be empty. Run the ascend-fd diag command to perform fault diagnosis.

ascend-fd diag -i ${path_to_parse_outputs} -o ${path_to_diag_output}

The diagnosis results are displayed in the following two ways.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot