Service Verification

Service verification is crucial for cloud migration. Service verification primarily includes function verification and performance verification. During the cloud migration process, there are two stages where service verification is necessary. Firstly, upon completion of service deployment, function and performance verifications must be conducted prior to switching over. Secondly, during the service transition, once the service traffic has been redirected to the target end, post-switch function and performance verifications should also be carried out.

Function Verification

- Function testing content

Function tests ensure that the application system runs properly prior to launch. Below are the details of function tests:

Table 1 Function testing content Testing Content

Description

Application functions

The test content heavily relies on the functions of the application system. For example, in an e-commerce system, the core function test cases must encompass online and offline browsing, shopping, placing orders and making payments (via various payment methods and using coupons), printing bills, issuing invoices, promotional activities, inventory synchronization, new member registration, existing member cancellation, order refunds, and returning coupons among other essential features.

Peripheral system integration functions

The test content significantly depends on the integration capabilities of the application system. For example, a major retail e-commerce platform collaborates with a group-buying platform, a food delivery platform, a home-service platform, and a short-video platform. The integration scenarios should minimally verify functions such as placing orders, utilizing coupons, notifying shipments, and leaving reviews across these integrated platforms.

- Function testing purposes

- Verify that the application functions are normal after replacing technical components following the migration of the application system to Huawei Cloud.

- Confirm that the integration between the application and surrounding systems is functioning properly post-migration to the target environment, ensuring all necessary modifications identified for collaboration with external systems have been implemented appropriately.

- Function testing methods

- Smoke testing: Smoke testing is a straightforward form of function testing aimed at verifying the system's availability through the execution of a minimal set of critical test cases. Upon completion of deployment on the target end, conducting a smoke test first ensures the fundamental functions of the system are operational.

- Full-business function testing: This thorough examination verifies the proper functioning of all system features by running test cases tailored to diverse business workflows, thereby ensuring the integrity of every function module.

- Log analysis: Post-deployment of services on the destination end, it is necessary to analyze system logs for any signs of errors. Log analysis serves as a proactive measure to unearth latent issues and vulnerabilities, facilitating prompt rectification and optimization efforts.

- DNS hijacking test: Since services deployed on the cloud are typically configured according to the domain names of the production environment, when testing service functions using a mobile app or browser, it is necessary to employ DNS hijacking methods for testing. This can involve utilizing an internal WiFi along with APISIX modified for operations, coordinating with the DNS resolution on the WiFi to redirect traffic towards the test environment for conducting internal network tests.

- Function testing process

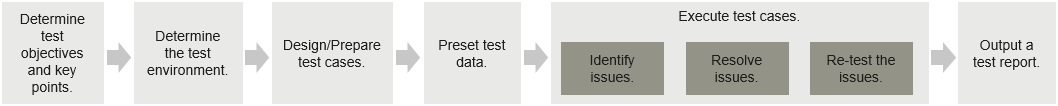

Figure 1 Function testing workflow

- Determine test objectives and key points: Specify the application functions and scenarios to be tested, as well as the key points and focuses of the test.

- System functions: such as promotion activities, coupon payments, returns, and refunds of coupons.

- Batch processing job function: If the migration involves multiple batch processing jobs, focus on their execution during testing, for example, inventory data pushing.

- Third-party service integration function: Verify integrations like those with a group-buying platform, food delivery platform, home service platform, short-video platform, and in-store POS payment functionality.

- Determine the test environment: Identify the environment to use for testing and ensure it does not impact production services. Note: For services calling third-party APIs, isolate external networks to avoid contaminating production data. Set up a dedicated internal WiFi for testers to simulate third-party function tests securely.

Table 2 Comparison and analysis of test environments Scenario

Suggestion on Test Environment Selection

Advantage

Disadvantage

Some applications have been brought online in the Huawei Cloud production environment at the destination end.

Solution 1: Use the destination Huawei Cloud production environment for testing.

1. After testing, the test environment is directly transitioned to production, saving workload.

2. All parameters have been adjusted to the optimal values during the test.

Network isolation is required; there are risks that may affect live-network services.

Solution 2: Set up a new test environment on the target Huawei Cloud for testing.

No impact on the live network

1. Setting up an environment incurs certain costs.

2. The configuration parameters fine-tuned in the test environment need to be replicated exactly (1:1) into the production environment, involving some amount of work.

The destination Huawei Cloud production environment is a brand-new environment.

Use the destination Huawei Cloud production environment for testing.

1. After testing, the test environment is directly transitioned to production, saving workload.

2. All parameters have been adjusted to the optimal values during the test.

None

- Design test cases: Design and prepare test cases based on testing objectives. The test cases before the switchover should achieve maximum coverage; during the switchover, due to limited testing time, it is advisable to categorize the test cases into three priority levels: P0, P1, and P2.

- P0 definition: core function test cases. If the cases pass the test, rollback is not required.

- P1 definition: critical function test cases. If the cases pass the test, all fundamental functions are available. Once these test cases pass, the switchover is successful, allowing the cancellation of external maintenance notifications.

- P2 definition: supplementary test cases. If the switchover time window is sufficient, the cases can be tested on the night of the switchover. If the switchover time window is insufficient, the cases can be tested on the next day.

Table 3 Test case execution descriptions Phase

Test Case

Coverage Rate

Pre-switchover test

All

Test all application functions and third-party integration functions. Special scenarios where tests cannot be performed need to be discussed separately to create a simulation test solution.

Test during the switchover

P0, P1, and P2 levels.

At least P0 and P1 test cases must be tested in the switchover time window.

According to the switchover time window, if the time window is sufficient, all test cases are completed. If the time window is insufficient, at least P0 and P1 test cases are completed.

For selecting test cases in the test environment, enterprises need to assess whether testing conditions are met based on the application scenarios. For example, if using a third-party inventory synchronization test case where only the production environments of both parties can connect but not their respective test environments, this test case cannot be executed. It is essential then to identify such unfeasible test cases, evaluate the overall test case coverage, and discuss the simulation test method for test cases that cannot be covered. The table below lists the scenarios.

Scenario

Test Condition

Solution

Order placement in a third-party system

The third-party system cannot connect to the test environment, so testing cannot be conducted in the test environment.

For scenarios that cannot be tested, discuss mitigation solutions such as directly calling the inventory synchronization interface to simulate testing.

Inventory synchronization

Third-party inventory cannot connect to the inventory system in the test environment, so testing cannot be conducted in the test environment.

For scenarios that cannot be tested, discuss mitigation solutions such as directly calling the inventory synchronization interface to simulate testing.

Payment

Online payment can be tested.

Offline POS payment cannot be tested as the network cannot be connected to the test environment.

For scenarios that cannot be tested, discuss mitigation solutions such as directly calling interfaces to simulate testing.

And more

And more

And more

- Preset test data: To ensure the authenticity and effectiveness of the tests, it is necessary to preset the test data in advance. You can use data from the source test environment or use masked production data.

- Execute test cases: Some enterprises start test automation late, and a large number of test cases still need to be manually executed. During manual test case execution, the implementer needs to record related information such as the test time, testers, and test case execution results in detail. Some enterprises already have automated testing capabilities. During cloud migration, they only need to add new test cases to the automation platform for automatic execution.

- Output a test report: After all test cases are tested, output a test report. In general, functional testing needs to ensure that the test environment is as consistent as possible with the production environment, with 100% test case coverage, to guarantee the proper functionality of applications after they are migrated to the cloud.

- Determine test objectives and key points: Specify the application functions and scenarios to be tested, as well as the key points and focuses of the test.

Performance Verification

- Performance verification

After an application system is migrated to the cloud, the underlying technical components are replaced. The default parameters of the cloud technical components may differ from those of the source technical components, or the implementation mechanisms of the source and destination technical components may vary. As a result, performance issues may occur during cloud migration. Therefore, performance testing is required, which includes the following three categories:

Table 4 Performance testing content Testing Content

Description

Cloud service performance

Perform performance tests on cloud services, such as databases, HBase, and storage system IOPS.

API performance

API performance is an aspect of system performance evaluation, with targeted stress tests conducted on specific APIs.

Overall application performance

Perform overall performance tests based on application scenarios, such as during a promotion when thousands of users simultaneously browse and purchase a product.

The following table lists the purposes of the three types of performance tests.

Table 5 Performance test purposes Test Content

Purpose

Cloud service performance

Evaluate whether the specifications of cloud services meet the performance requirements under high concurrency and whether the parameters are set to the optimal values.

API performance

Evaluate the maximum load capability of specific APIs.

Overall application performance

- Determine the maximum load capacity of the cloud-based business system: Through high concurrency and high load testing, determine the maximum load that the cloud-based business system can handle, along with its performance and response times at peak load. As the stress incrementally escalates, observe how the cloud-based business system performs under equivalent stress compared to the original setup, comparing gathered metrics to pinpoint potential issues.

- Verify system stability and reliability: Perform long-term, high-load tests to verify the stability and reliability of the cloud-based business system across diverse scenarios, including system resource management, data transfer, and exception handling.

- Assess system scalability: As the system load gradually increases, testing the scalability of the cloud-based business system determines whether it can expand to a larger scale and support more users and service requirements.

- Identify system performance bottlenecks: Conduct stress tests on the cloud-based business system to identify system bottlenecks and determine performance issues arising from environmental changes post-migration, thus enabling targeted optimizations.

The methods for testing the preceding three types of performance are as follows:

- Cloud service performance testing (using databases as an example)

For most application systems, the bottleneck often lies in the database. While other components like network bandwidth, load balancers, application servers, and middleware can be scaled horizontally relatively easily, databases typically rely on primary-standby configurations due to high data consistency requirements, rather than adopting a distributed architecture.

Common database-related metrics include:

- TPS/QPS: Transactions per second and queries per second, used to measure database throughput.

- Response time: includes the average response time, minimum response time, maximum response time, and time percentage. The time percentage is of significant reference value, such as the maximum response time of the first 95% of requests.

- Concurrency: the number of query requests being processed simultaneously.

- Success rate: the proportion of requests successfully returned within a specified period.

Huawei Cloud RDS offers standard performance baselines like TPS and QPS, allowing enterprises to conduct stress tests tailored to their business data. A widely-used database stress testing tool is Sysbench, supporting multi-threading and various databases. The tests include:- CPU performance

- Disk I/O performance

- Scheduler performance

- Memory allocation and transfer speeds

- POSIX thread performance

- Database performance (OLTP benchmark tests)

- API performance stress testing

You can use either of following methods to test the performance of APIs: one is using Huawei Cloud's cloud-native performance testing tool, CodeArts PerfTest; the other is using GoReplay. The advantages and disadvantages of both methods are listed in the table below.

Table 6 Comparison of API performance stress test methods Test Tool

Stress Test Method

Advantage

Disadvantage

CodeArts PerfTest

Complete the API pressure test using the Huawei Cloud performance test tool.

- Supports tests in multi-protocol, high-concurrency, and complex scenarios.

- Provides professional performance test reports, making application performance clear at a glance.

- Does not interact with core services in the production environment, ensuring no impact on the live network.

- API tests have minimal dependency on other services, allowing them to be conducted based on a single service system.

- High execution costs due to extensive initial business analysis and script compilation.

- Requires highly skilled test personnel who are proficient in using test tools and possess relevant test knowledge; otherwise, the test results may be suboptimal.

GoReplay

Deploy GoReplay on the service gateway to replicate live network traffic and replay it at the destination end.

- Low cost and high efficiency: No need to sort out the interfaces and service logic of each system; testing can be conducted based on actual traffic.

- On the one hand, a large volume of live real traffic ensures comprehensive coverage. On the other hand, it supports intermediate process verification, such as comparing and verifying all objects in the content of sent messages and intermediate calculations, which is difficult to achieve through traditional manual verification point creation.

- Plugins must be installed on the traffic entry gateway in the production environment, consuming certain CPU and storage resources.

- In batch migration scenarios, since traffic recording is based on all service requests, if some services are not deployed at the destination end, it may lead to 404 errors, requiring manual fault localization, which is labor-intensive.

- Only HTTP traffic can be recorded; HTTPS, TCP, UDP, and other traffic cannot be captured.

For details about how to use CodeArts PerfTest to perform a performance stress test, refer to the CodeArts PerfTest documentation. The following describes how to perform performance stress tests through GoReplay traffic replication.

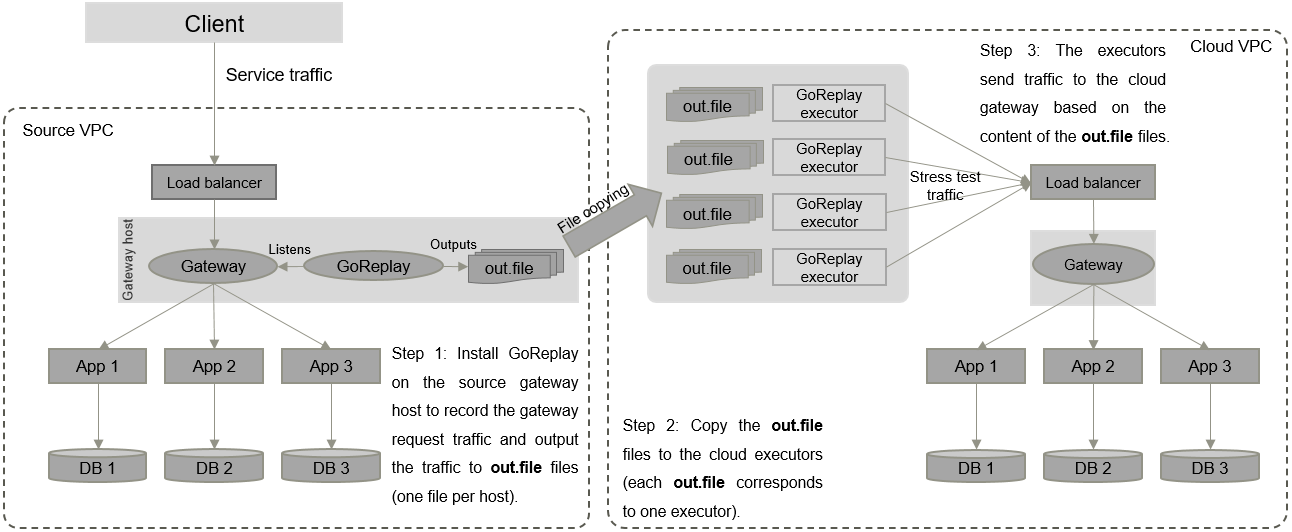

GoReplay is an open-source tool for replicating, replaying, and manipulating HTTP traffic. It captures real-time traffic and sends it to one or more target servers to achieve traffic replication and replay. Using GoReplay, actual HTTP request and response traffic can be replicated to test, development, or production environments for testing, monitoring, and analysis. During the lift and shift migration, we can use GoReplay to replicate request data from the traffic entry point of the live network's service gateway at the source end and replay the service requests on the target cloud executors to test the load on relevant service interfaces on the cloud. The detailed solution is shown in the following figure.

Figure 2 GoReplay traffic replication stress test solution

When using GoReplay for performance stress testing, pay attention to the following points:

- When GoReplay is used to record request traffic on the source gateway, monitor its impact on host performance and observe relevant host metrics in real time, such as CPU usage and memory usage. Additionally, the out.file files generated by GoReplay consume a significant amount of disk space. Monitor disk usage to prevent the gateway application from becoming unavailable due to full disk space. It is advisable to use network storage for storing output files.

- During traffic replay, if the destination service requires access to a third-party interface, it may impact production services. Ensure proper network isolation is in place.

- Overall application performance stress testing

Overall application performance stress testing involves comprehensive stress testing of all business processes and functions of the service system to assess its stability and performance in real production environments. During the testing, real user behaviors are simulated, and high-load scenarios are generated to evaluate the system's performance and stability under heavy loads, confirming that the service system meets actual user needs.

Common scenarios for overall application performance stress testing include:

- Normal service load: Simulates the system's business load under normal usage conditions, including the quantity, frequency, and types of user requests. Verifies the system's performance under normal load to ensure it meets user requirements.

- Peak load: Simulates the highest load the system may face, typically during periods when user requests reach their peak. This scenario determines if the system's scalability can handle peak-hour requests and ensures no performance bottlenecks or crashes occur.

- Burst load: Simulates exceptional situations, such as sudden surges in user requests or large-scale data processing. Evaluates the system's stability and fault tolerance under sudden increased stress, ensuring graceful handling of abnormal loads.

- Long-term load: Simulates extended system operation, often lasting several hours or more. Tests for issues like memory leaks and resource depletion after prolonged operation to ensure system stability and reliability.

- Exceptional scenarios: Simulates various system anomalies, such as network failures, server outages, and database disconnections. Tests the system's fault tolerance and recovery capabilities under these conditions to ensure correct exception handling and continued availability.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot