Data Disk Space Allocation

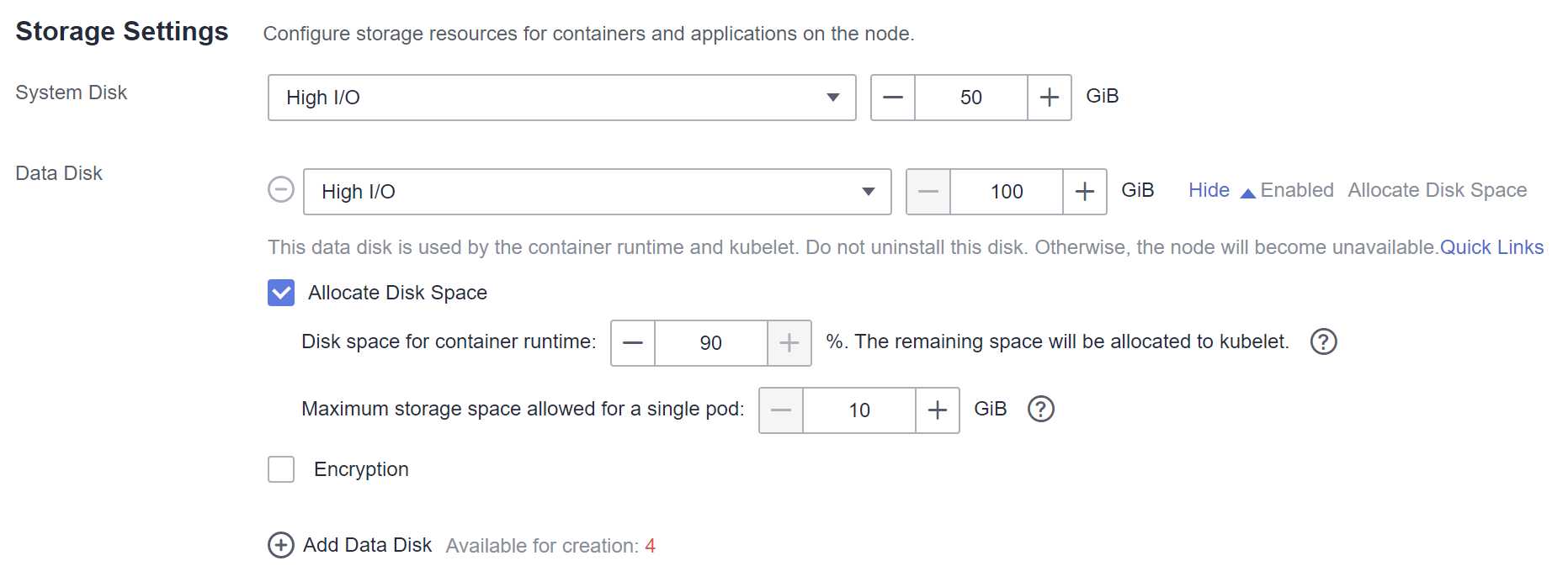

When creating a node, you need to configure data disks for the node.

A node requires one data disk whose size is greater than or equal to 100 GB. This data disk is allocated to the container runtime and kubelet.

- Container runtime space (90% by default): functions as the container runtime working directory and stores container image data and image metadata.

- kubelet space (10% by default): stores pod configuration files, secrets, and mounted storage such as emptyDir volumes.

The size of the container runtime space affects image download and container startup and running. This section describes how the container runtime space is used so that you can configure the data disk space accordingly.

Container Runtime Space Size

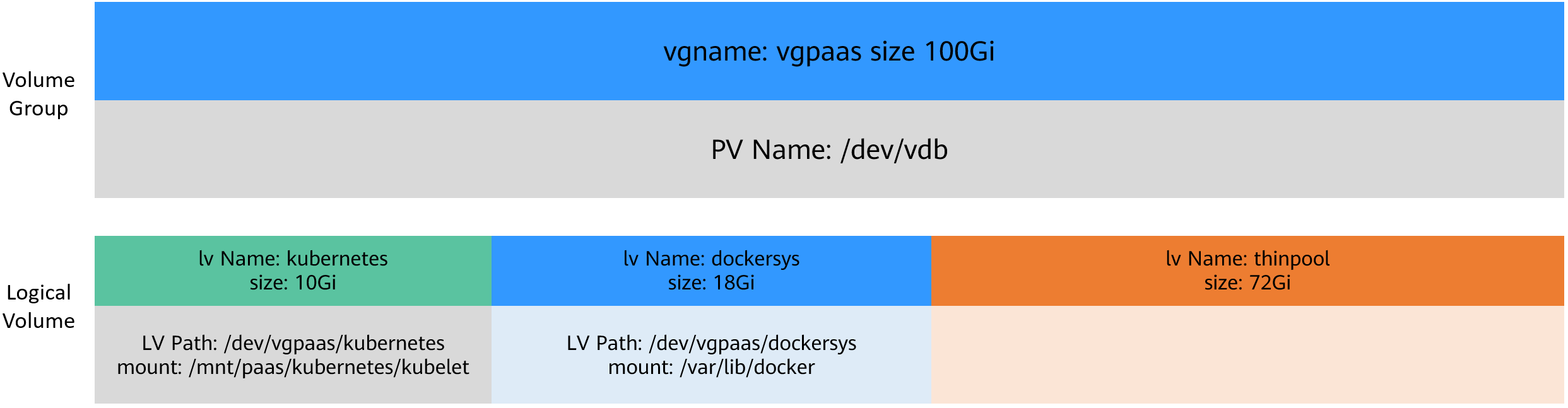

By default, a data disk, 100 GB for example, is divided as follows (depending on the container storage Rootfs:

- Rootfs (Device Mapper)

- The /var/lib/docker directory is used as the Docker working directory and occupies 20% of the container runtime space by default. (Space size of the /var/lib/docker directory = Data disk space x 90% x 20%)

- The thin pool is used to store container image data, image metadata, and container data, and occupies 80% of the container runtime space by default. (Thin pool space = Data disk space x 90% x 80%)

The thin pool is dynamically mounted. You can view it by running the lsblk command on a node, but not the df -h command.

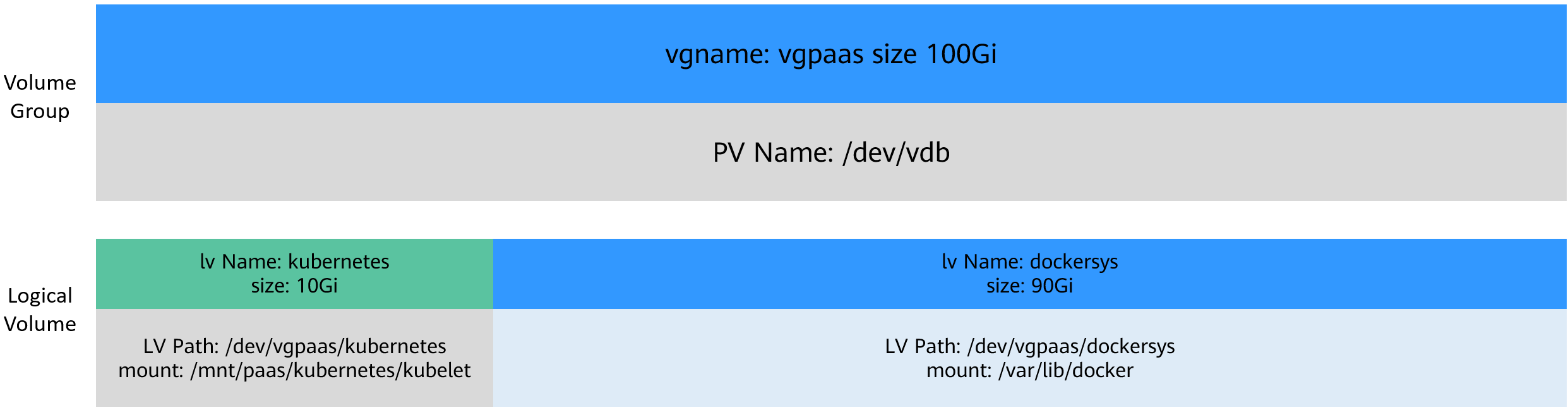

- Rootfs (OverlayFS)

No separate thinpool. The entire container runtime space is in the /var/lib/docker directory.

Using rootfs for container storage in CCE

- CCE cluster: EulerOS 2.5 nodes use Device Mapper, and Ubuntu 18.04 and EulerOS 2.9 nodes use OverlayFS. CentOS 7.6 nodes in clusters earlier than v1.21 use Device Mapper, and use OverlayFS in clusters of v.1.21 and later. For EulerOS 2.8 nodes, use Device Mapper in clusters of v1.19.16-r2 and earlier, and use OverlayFS in clusters of v1.19.16-r2 and later.

- When Device Mapper is used, the available data space (basesize) of a single container is enabled and defaults to 10 GB. When OverlayFS is used, basesize is not enabled by default. In clusters of latest versions (1.19.16, 1.21.3, 1.23.3, and later), EulerOS 2.9 supports basesize if the Docker engine is used.

- In the case of using Docker on EulerOS 2.9 nodes, basesize will not take effect if CAP_SYS_RESOURCE or privileged is configured for a container.

- CCE Turbo cluster: BMSs use Device Mapper, and ECSs (CentOS 7.6 and Ubuntu 18.04) use OverlayFS.

You can log in to the node and run the docker info command to view the storage engine type.

# docker info

Containers: 20

Running: 17

Paused: 0

Stopped: 3

Images: 16

Server Version: 18.09.0

Storage Driver: devicemapper

Relationship Between the Container Runtime Space and the Number of Containers

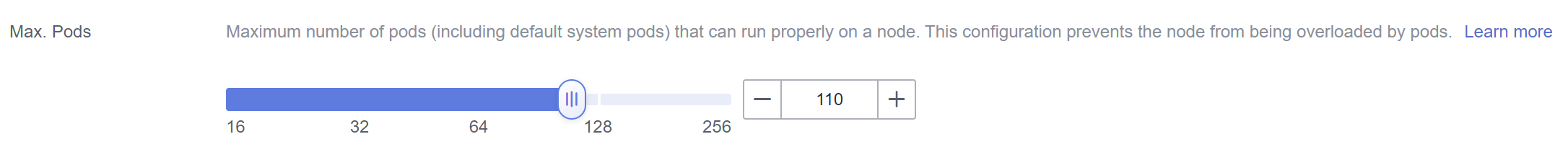

The number of pods and the space configured for each container determine whether the container runtime space of a node is sufficient.

The container runtime space should be greater than the total disk space used by containers. Formula: Container runtime space > Number of containers x Available data space for a single container (basesize)

For nodes that support basesize, when Device Mapper is used, although you can limit the size of the /home directory of a single container (to 10 GB by default), all containers on the node still share the thin pool of the node for storage. They are not completely isolated. When the sum of the thin pool space used by certain containers reaches the upper limit, other containers cannot run properly.

In addition, after a file is deleted in the /home directory of the container, the thin pool space occupied by the file is not released immediately. Therefore, even if basesize is set to 10 GB, the thin pool space occupied by files keeps increasing until 10 GB when files are created in the container. The space released after file deletion will be reused but after a while. If the number of containers on the node multiplied by basesize is greater than the thin pool space size of the node, there is a possibility that the thin pool space has been used up.

Garbage Collection Policies for Container Images

When the container runtime space is insufficient, image garbage collection is triggered.

The policy for garbage collecting images takes two factors into consideration: HighThresholdPercent and LowThresholdPercent. Disk usage above the high threshold (default: 85%) will trigger garbage collection. The garbage collection will delete least recently used images until the low threshold (default: 80%) has been met.

Recommended Configuration for the Container Runtime Space

- The container runtime space should be greater than the total disk space used by containers. Formula: Container runtime space > Number of containers x Available data space for a single container (basesize)

- You are advised to create and delete files of containerized services in local storage volumes (such as emptyDir and hostPath volumes) or cloud storage directories mounted to the containers. In this way, the thin pool space is not occupied. emptyDir volumes occupy the kubelet space. Therefore, properly plan the size of the kubelet space.

- If OverlayFS is used by CentOS 7.6 and Ubuntu 18.04 ECS nodes in CCE Turbo clusters, CentOS 7.6 nodes in clusters of v1.19.16 or later, and Ubuntu 18.04 nodes in CCE clusters, you can deploy services on these nodes so that the disk space occupied by files created or deleted in containers can be released immediately.