Selecting a Network Model

CCE uses proprietary, high-performance container networking add-ons to support the tunnel, Cloud Native 2.0, and VPC network models.

After a cluster is created, the network model cannot be changed.

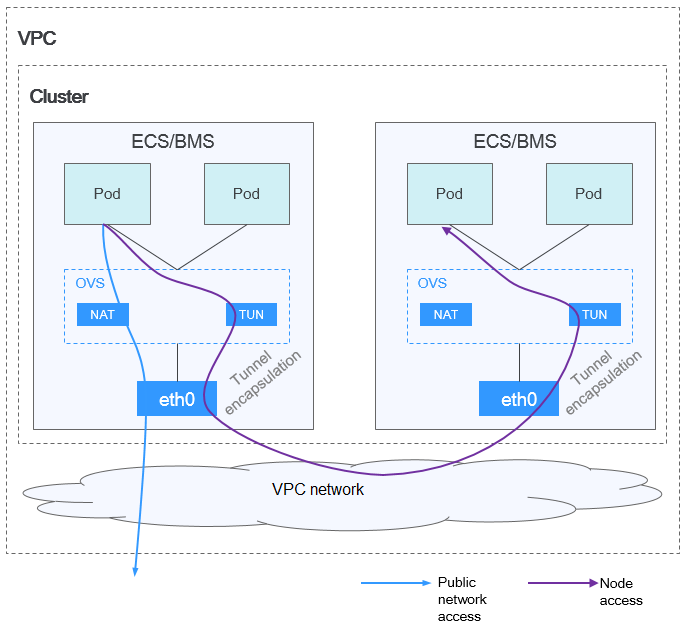

- Tunnel network: The container network is an overlay tunnel network on top of a VPC network and uses the VXLAN technology. This network model is applicable when there is no high requirements on performance. VXLAN encapsulates Ethernet packets as UDP packets for tunnel transmission. Though at some cost of performance, the tunnel encapsulation enables higher interoperability and compatibility with advanced features (such as network policy-based isolation), meeting the requirements of most applications.

Figure 1 Container tunnel network

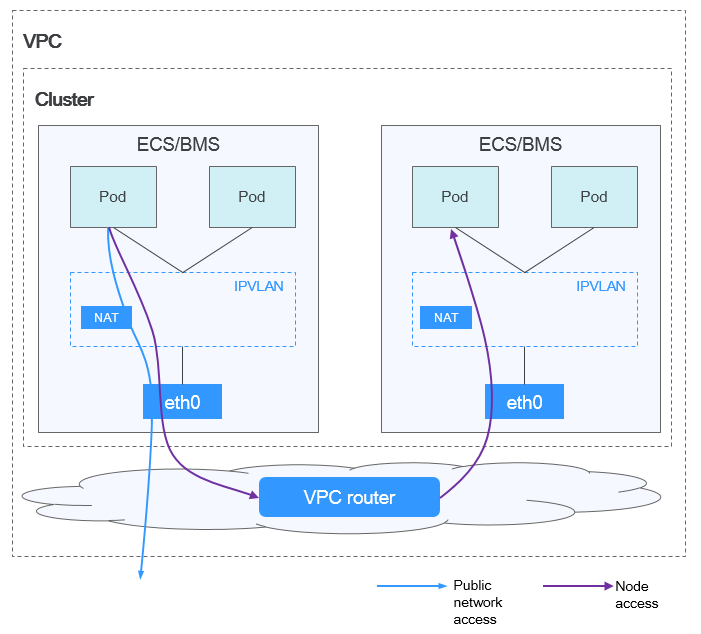

- VPC network: The container network uses VPC routing to integrate with the underlying network. This network model is applicable to performance-intensive scenarios. The maximum number of nodes allowed in a cluster depends on the route quota in a VPC network. Each node is assigned a CIDR block of a fixed size. VPC networks are free from tunnel encapsulation overhead and outperform container tunnel networks. In addition, as VPC routing includes routes to node IP addresses and container network segment, container pods in the cluster can be directly accessed from outside the cluster.

Figure 2 VPC network

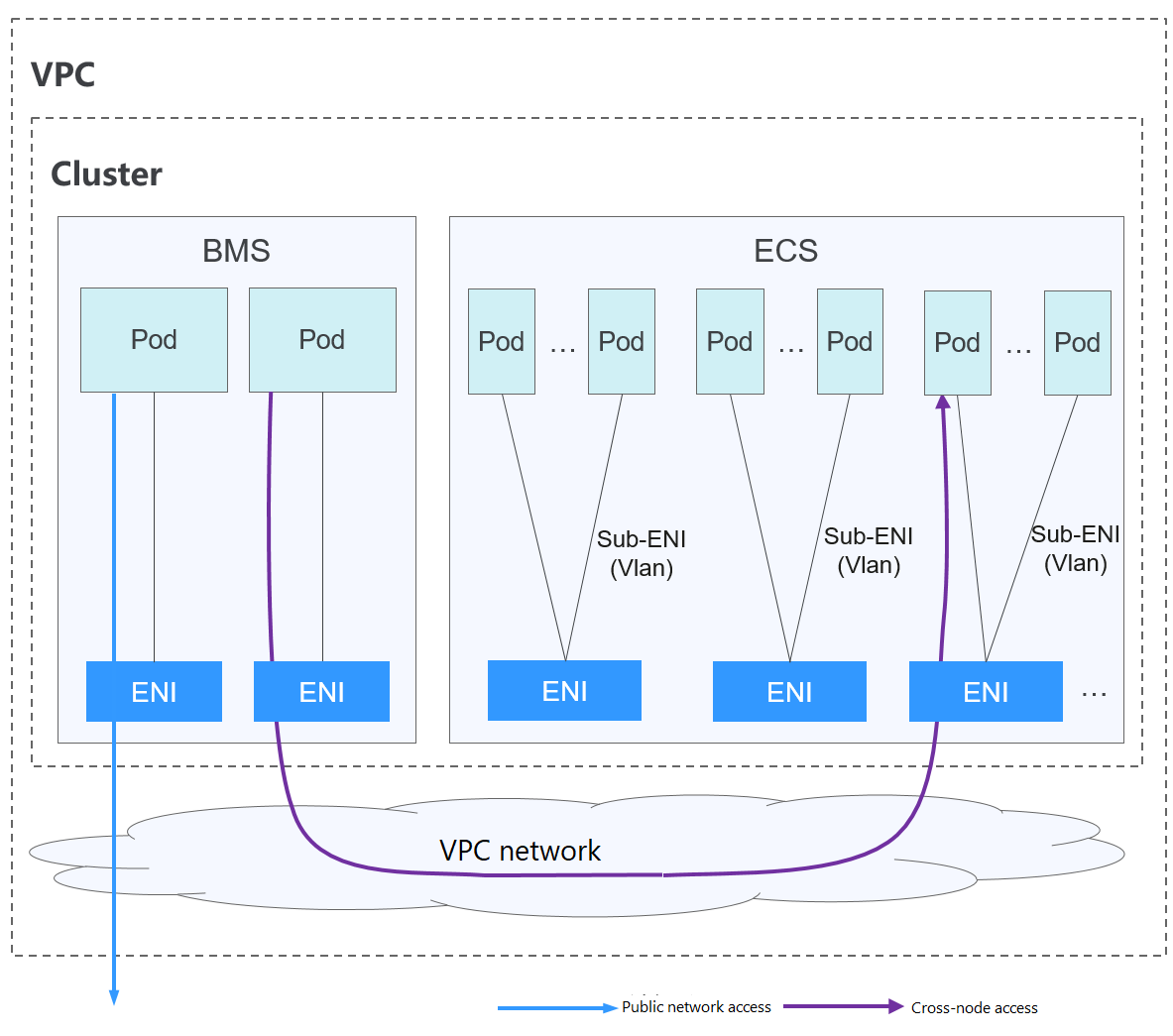

- Cloud Native Network 2.0: The container network deeply integrates the elastic network interface (ENI) capability of VPC, uses the VPC CIDR block to allocate container addresses, and supports passthrough networking to containers through a load balancer.

Figure 3 Cloud Native 2.0 network

The following table lists the differences between the network models.

|

Dimension |

Tunnel Network |

VPC Network |

Cloud Native Network 2.0 |

|---|---|---|---|

|

Application scenarios |

|

|

|

|

Core technology |

OVS |

IPvlan and VPC route |

VPC ENI/sub-ENI |

|

Applicable clusters |

CCE standard cluster |

CCE standard cluster |

CCE Turbo cluster |

|

Container network isolation |

Kubernetes native NetworkPolicy for pods |

No |

Pods support security group isolation. |

|

Interconnecting pods to a load balancer |

Interconnected through a NodePort |

Interconnected through a NodePort |

Directly interconnected using a dedicated load balancer Interconnected using a shared load balancer through a NodePort |

|

Managing container IP addresses |

|

|

Container CIDR blocks divided from a VPC subnet (You do not need to configure separate container CIDR blocks.) |

|

Network performance |

Performance loss due to VXLAN encapsulation |

No tunnel encapsulation, and cross-node traffic forwarded through VPC routers (The performance is so good that is comparable to that of the host network, but there is a loss caused by NAT.) |

Container network integrated with VPC network, eliminating performance loss |

|

Networking scale |

A maximum of 2000 nodes are supported. |

Suitable for small- and medium-scale networks due to the limitation on VPC route tables. It is recommended that the number of nodes be less than or equal to 1000. Each time a node is added to the cluster, a route is added to the VPC route tables. Evaluate the cluster scale that is limited by the VPC route tables before creating the cluster. |

A maximum of 2000 nodes are supported. In a cloud-native network 2.0 cluster, containers' IP addresses are assigned from VPC CIDR blocks, and the number of containers supported is restricted by these blocks. Evaluate the cluster's scale limitations before creating it. |

- The scale of a cluster that uses the VPC network model is limited by the custom routes of the VPC. Therefore, you need to estimate the number of required nodes before creating a cluster.

- By default, VPC routing network supports direct communication between containers and hosts in the same VPC. If a peering connection policy is configured between the VPC and another VPC, the containers can directly communicate with hosts on the peer VPC. In addition, in hybrid networking scenarios such as Direct Connect and VPN, communication between containers and hosts on the peer end can also be achieved with proper planning.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot