Cilium Overview

Why Cilium?

Cilium is a high-performance and high-reliability solution for securing network connectivity between containers. At the foundation of Cilium lies a technology rooted in the Linux kernel, namely the extended Berkley Packet Filter (eBPF). Cilium supports multiple transport layer protocols, such as TCP, UDP, and HTTP, and provides multiple security features, such as access control at the application layer and support for service meshes. Cilium also supports Kubernetes network policies and provides global networking and service discovery to help administrators better manage and deploy cloud-native applications.

Cilium uses eBPF to monitor network traffic inside the kernel in real time, which enables efficient, secure packet exchange. eBPF shines in many scenarios such as network functions virtualization, container networks, and edge computing. It helps enterprises improve network performance and security and provides better infrastructure support for cloud-native applications.

Basic Functions

- Network connectivity: Cilium allocates a unique IP address to each container for communications between containers. Cilium also supports multiple network protocols.

- Network intrusion detection: Cilium can integrate third-party network intrusion detection services, such as Snort, to detect network traffic.

- Automatic security policy management: Cilium automatically creates security policies for each container using the Kubernetes custom resource definition (CRD) mechanism to ensure container security.

- Load balancing: Cilium provides multiple load balancing algorithms to route traffic across containers.

- Service discovery: Cilium uses the Kubernetes service detection mechanism to automatically detect services in containers and register the services with Kubernetes APIs for other containers to access.

Constraints

Only new on-premises clusters support Cilium. Existing on-premises clusters do not support Cilium even after they are upgraded.

Cilium Underlay

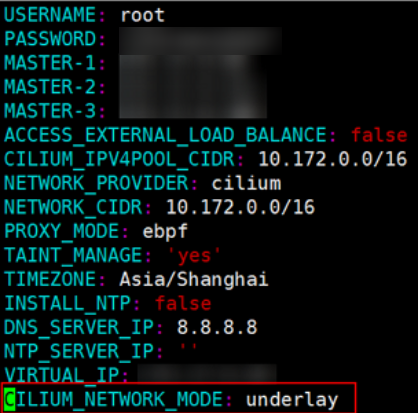

Add the following settings to the on-premises cluster configuration file cluster-[Cluster name].yaml.

CILIUM_NETWORK_MODE: underlay

Example:

Advantages

- If Cilium works with underlay, Cilium sends all packets that are not sent to other containers to the routing system of the Linux kernel. This means that the packets will be forwarded by a route, as if the local process sends the data packets, reducing the encapsulation and conversion of the packets. This method is better used when the traffic is heavy.

- ipv4-native-routing-cidr is automatically configured so that Cilium automatically enables IP forwarding in the Linux kernel.

Dependency

The network of the host running Cilium can use the IP address allocated to the pod or other workloads to forward traffic. The source and destination address check of the node must be disabled, and the security group of the node must allow traffic from and to the container CIDR block over the port and using the protocol of the node.

Enabling BGP for Cilium

Use Cilium BGP Control Plane to manage the cluster network. For details, see Cilium BGP Control Plane.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot