Overview

On-premises clusters refer to Kubernetes clusters that are provisioned by UCS but running on your on-premises data center. You only need to prepare the required physical resources. The cloud platform will be responsible for installing Kubernetes software and connecting your clusters to UCS.

On-premises clusters (BMSs)

On-premises clusters can be deployed on BMSs to run workloads, helping you modernize applications.

Deploying applications on BMSs speeds up computing, reduces network latency, cuts virtualization overheads, and improves resource utilization. Containerized applications can directly use hardware of various types with optimized performance on BMSs, such as GPUs, NPUs, and local disks.

On-premises clusters (VMs)

On-premises clusters are compatible with multiple underlying infrastructures. They can be deployed on virtualized platforms such as VMware and OpenStack. The container network can be connected to the underlying network. CSI can be used to access multiple underlying storage services (such as VMware vSphere) for persistent storage.

Figure 1 shows the on-premises cluster management process.

Advantages

Compatible with various infrastructures and oriented to diverse service scenarios

- On-premises clusters can be deployed on BMSs to reduce virtualization overheads and improve the performance.

- On-premises clusters can be deployed on mainstream virtualized platforms such as OpenStack and VMware.

- On-premises clusters are compatible with multiple OSs, including Huawei Cloud EulerOS, Ubuntu, Kylin, UOS, CentOS, and FusionOS.

- KubeVirt is used to deploy, run, and manage VMs.

- On-premises clusters are compatible with mainstream high-speed hardware. Containers can use high-speed hardware such as GPUs, NPUs, and InfiniBand network interfaces for high-performance.

- On-premises clusters support multiple heterogeneous processors, including x86 and Kunpeng.

Cloud-native, secure container network

- Flexible application access: Popular load balancers in the industry are supported for ingresses.

- Network QoS: Refined traffic control prevents network congestion.

- Network policies control and isolate network access.

Fully compatible with the community ecosystem and Huawei Cloud software

- The native PVC mechanism of the community ensures persistent storage of stateful applications and no data loss.

- The comprehensive support for cloud container storage standards allows diverse storage solutions.

Diverse, refined O&M capabilities

- Prometheus ecosystem: Cloud-native standard monitoring and log collection APIs support custom extension, covering metrics, logs, alarms, and traces of clusters, containers, and applications.

- Online O&M: Routine system inspection can be performed online. The O&M personnel in IDC can quickly access the system to rectify faults.

Network Access Methods

UCS uses the cluster network agent for cluster connection, as shown in Figure 2. You do not need to enable any inbound port on the firewall. Instead, only the cluster agent program is required to establish sessions with UCS in the outbound direction.

- Public network access: flexibility, cost-effectiveness, and easy access

- Private network access: high speed, low latency, stability, and security

Offline Mode

UCS on-premises clusters can run offline (also called CCE Agile). They can be disconnected from Huawei Cloud and run on the local control plane. They are suitable for scenarios that have strict security requirements and require data localization under regulatory and compliance requirements.

The local control plane provides:

- Lifecycle management for clusters, add-ons, and templates locally.

- A local console with a similar experience as the public cloud console.

- Local image repositories for you to save and obtain container images and artifacts locally.

- A Keycloak-based local system authentication and authorization solution that offers identity authentication, user management, fine-grained authorization, and other functions.

- An O&M center for local cloud native observability. It tracks applications and resources in real time. It also collects metrics, logs, and events to analyze application health and generates alarms and multidimensional data that is comprehensive and easy to understand.

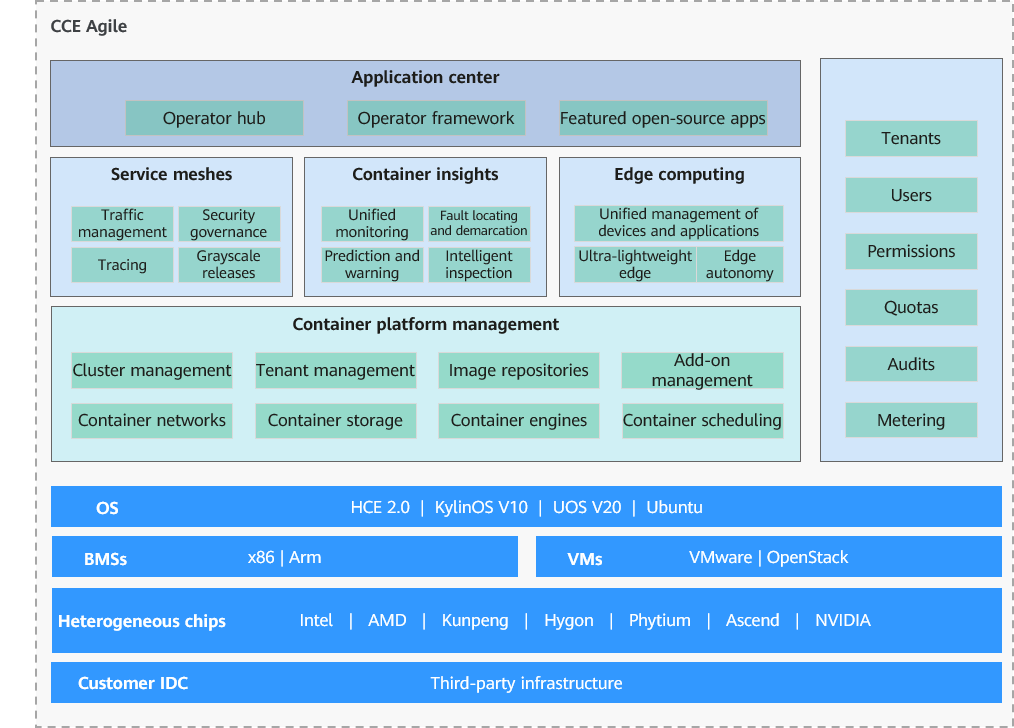

Figure 3 CCE Agile architecture

Scenarios

- AI computing

CCE Agile provides a container platform for AI computing. With Volcano intelligent scheduling, GPU virtualization, and Huawei Ascend processors, AI computing costs can be greatly reduced.

- DevOps

CCE Agile automates coding, image build, grayscale releases, and deployment based on code sources to containerize legacy applications.

- Traffic management

CCE Agile provides service traffic monitoring and management capabilities, such as load balancing, outlier detection, and fault injection, allow non-intrusive microservice governance. Grayscale release approaches such as canary releases and blue-green deployments enable one-stop, automated application release.

- Edge computing

CCE Agile uses KubeEdge as the kernel to build a platform with cloud-edge-device synergy. This platform extends cloud applications to edge nodes and associates edge and device data to enable remote control, data processing, analysis and decision making, and intelligence of edge computing resources.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot