Viewing Training Job Logs

Overview

Training logs record the runtime process and exception information of training jobs and provide useful details for fault location. The standard output and standard error information in your code are displayed in training logs. If you encounter an issue during the execution of a ModelArts training job, view logs first. In most scenarios, you can locate the issue based on the error information reported in logs.

Training logs include common training logs and Ascend logs.

- Common Logs: When resources other than Ascend are used for training, only common training logs are generated. Common logs include the logs for pip-requirement.txt, training process, and ModelArts.

- Ascend Logs: When Ascend resources are used for training, device logs, plog logs, proc log for single-card training logs, MindSpore logs, and common logs are generated.

Separate MindSpore logs are generated only in the MindSpore+Ascend training scenario. Logs of other AI engines are contained in common logs.

Retention Period

Logs are classified into the following types based on the retention period:

- Real-time logs: generated during training job running and can be viewed on the ModelArts training job details page.

- Historical logs: After a training job is completed, you can view its historical logs on the ModelArts training job details page. ModelArts automatically stores the logs for 30 days.

- Permanent logs: These logs are dumped to your OBS bucket. When creating a training job, you can enable persistent log saving and set a job log path for dumping.

Figure 2 Enabling persistent log saving

Real-time logs and historical logs have no difference in content. In the Ascend training scenario, permanent logs contain Ascend logs, which are not displayed on ModelArts.

Common Logs

Common logs include the logs for pip-requirement.txt, training process, and ModelArts Standard.

|

Type |

Description |

|---|---|

|

Training process log |

Standard output of your training code. |

|

Installation logs for pip-requirement.txt |

If pip-requirement.txt is defined in training code, pip package installation logs are generated. |

|

ModelArts logs |

ModelArts logs are used by O&M personnel to locate service faults. |

The format of a common log file is as follows. task id is the node ID of a training job.

Unified log format: modelarts-job-[job id]-[task id].log Example: log/modelarts-job-95f661bd-1527-41b8-971c-eca55e513254-worker-0.log

- Single-node training jobs generate a log file, and task id defaults to worker-0.

- Distributed training generates multiple node log files, which are distinguished by task id, such as worker-0 and worker-1.

Common logs include the logs for pip-requirement.txt, training process, and ModelArts.

ModelArts logs can be filtered in the common log file modelarts-job-[job id]-[task id].log using the following keywords: [ModelArts Service Log] or Platform=ModelArts-Service.

- Type 1: [ModelArts Service Log] xxx

[ModelArts Service Log][init] download code_url: s3://dgg-test-user/snt9-test-cases/mindspore/lenet/

- Type 2: time="xxx" level="xxx" msg="xxx" file="xxx" Command=xxx Component=xxx Platform=xxx

time="2021-07-26T19:24:11+08:00" level=info msg="start the periodic upload task, upload period = 5 seconds " file="upload.go:46" Command=obs/upload Component=ma-training-toolkit Platform=ModelArts-Service

Ascend Logs

Ascend logs are generated when Ascend resources are used to for training. When Ascend resources are used for training, device logs, plog logs, proc logs for single-card training logs, MindSpore logs, and common logs are generated.

Common logs in the Ascend training scenario include the logs for pip-requirement.txt, ma-pre-start, davincirun, training process, and ModelArts.

obs://dgg-test-user/snt9-test-cases/log-out/ # Job log path

├──modelarts-job-9ccf15f2-6610-42f9-ab99-059ba049a41e

├── ascend

├── process_log

├── rank_0

├── plog # Plog logs

...

├── device-0 # Device logs

...

├── mindspore # MindSpore logs

├──modelarts-job-95f661bd-1527-41b8-971c-eca55e513254-worker-0.log # Common logs

├──modelarts-job-95f661bd-1527-41b8-971c-eca55e513254-proc-rank-0-device-0.txt # proc log for single-card training logs

|

Type |

Description |

Name |

|---|---|---|

|

Device logs |

User process AICPU and HCCP logs generated on the device and sent back to the host (training container). If any of the following situations occur, device logs cannot be obtained:

After the training process ends, the log is generated in the training container. |

~/ascend/log/device-{device-id}/device-{pid}_{timestamp}.log In the preceding command, pid indicates the user process ID on the host. Example: device-166_20220718191853764.log |

|

Plog logs |

User process logs, for example, ACL/GE. Plog logs are generated in the training container. |

~/ascend/log/plog/plog-{pid}_{timestamp}.log In the preceding command, pid indicates the user process ID on the host. Example: plog-166_20220718191843620.log |

|

proc log |

proc log is a redirection file of single-node training logs, helping you quickly obtain logs of a compute node. proc log for training using a preset MindSpore image and ranktable is generated in the training container and automatically saved in OBS. Training jobs using custom MindSpore images or other frameworks do not involve proc log. |

[modelarts-job-uuid]-proc-rank-[rank id]-device-[device logic id].txt

Example: modelarts-job-95f661bd-1527-41b8-971c-eca55e513254-proc-rank-0-device-0.txt |

|

MindSpore logs |

Separate MindSpore logs are generated in the MindSpore+Ascend training scenario. MindSpore logs are generated in the training container. |

For details about MindSpore logs, visit the MindSpore official website. |

|

Common training logs |

Common training logs are generated in the /home/ma-user/modelarts/log directory of the training container and automatically uploaded to OBS. The common training logs include these types:

|

Contained in the modelarts-job-[job id]-[task id].log file. task id indicates the instance ID. If a single node is used, the value is worker-0. If multiple nodes are used, the value is worker-0, worker-1, ..., or worker-{n-1}. n indicates the number of instances. Example: modelarts-job-95f661bd-1527-41b8-971c-eca55e513254-worker-0.log |

In the Ascend training scenario, after the training process exits, ModelArts uploads the log files in the training container to the OBS directory specified by Job Log Path. On the job details page, you can obtain the job log path and click the OBS address to go to the OBS console to check logs.

You can run the ma-pre-start script to modify the default environment variable configurations.

ASCEND_GLOBAL_LOG_LEVEL=3 # Log level, 0 for debug, 1 for info, 2 for warning, and 3 for error. ASCEND_SLOG_PRINT_TO_STDOUT=1 # Whether to display plog logs. The value 1 indicates that plog logs are displayed by default. ASCEND_GLOBAL_EVENT_ENABLE=1 # Event log level, 0 for disabling event logging and 1 for enabling event logging.

Place the ma-pre-start.sh or ma-pre-start.py script in the directory at the same level as the training boot file.

Before the training boot file is executed, the system executes the ma-pre-start script in /home/work/user-job-dir/. This method can be used to update the Ascend RUN package installed in the container image or set some additional global environment variables required for training.

Viewing Training Job Logs

On the training job details page, you can preview logs, download logs, search for logs by keyword, and filter system logs in the log pane.

- Previewing logs

You can preview training logs on the system log pane. If multiple compute nodes are used, you can choose the target node from the drop-down list on the right.

Figure 4 Viewing logs of different compute nodes

If a log file is oversized, the system displays only the latest logs in the log pane. To view all logs, click the link in the upper part of the log pane, which will direct you to a new page. Then you will be redirected to a new page.

Figure 5 Viewing all logs

- If the total size of all logs exceeds 500 MB, the log page may be frozen. In this case, download the logs to view them locally.

- A log preview link can be accessed by anyone within one hour after it is generated. You can share the link with others.

- Ensure that no privacy information is contained in the logs. Otherwise, information leakage may occur.

- Downloading logs

Training logs are retained for only 30 days. To permanently store logs, click the download icon in the upper right corner of the log pane. You can download the logs of multiple compute nodes in a batch. You can also enable Persistent Log Saving and set a log path when you create a training job. In this way, the logs will be automatically stored in the specified OBS path.

If a training job is created on Ascend compute nodes, certain system logs cannot be downloaded in the training log pane. To obtain these logs, go to the Job Log Path you set when you created the training job.

Figure 6 Downloading logs

- Searching for logs by keyword

In the upper right corner of the log pane, enter a keyword in the search box to search for logs, as shown in Figure 7.

The system will highlight the keyword and redirect you between search results. Only the logs loaded in the log pane can be searched for. If the logs are not fully displayed (see the message displayed on the page), obtain all the logs by downloading them or clicking the full log link and then search for the logs. On the page redirected by the full log link, press Ctrl+F to search for logs.

- Filtering system logs

Figure 8 System logs

If System logs is selected, system logs and user logs are displayed. If System logs is deselected, only user logs are displayed.

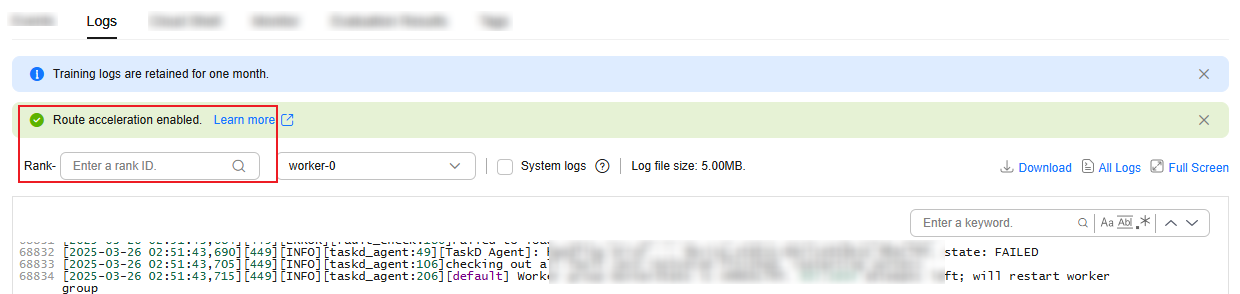

- Viewing dynamic route acceleration logs

If your training job has three or more instances, the ROUTE_PLAN environment variable is set to true, and you use Ascend resources, you can view the dynamic route acceleration logs by rank ID and check if dynamic route acceleration is enabled.

Figure 9 Viewing dynamic route acceleration logs

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot