Using Cloud Eye to Monitor NPU Resources of Lite Server

Scenario

You need Cloud Eye to monitor Lite Server. This section describes how to interconnect with Cloud Eye to monitor resources and events on Lite Server.

Constraints

- The Cloud Eye Agent plug-in, which has strict resource usage restrictions, is required for monitoring. When the resource usage exceeds the threshold, the Agent circuit breaker occurs. For details about the resource usage, see Cloud Eye Server Monitoring.

- If you run the NPU pressure test command using Ascend-dmi, some NPU metric data may be lost.

- You have fully tested the monitoring agent in the public image provided by Lite Server. If you use your own image, perform the test before deploying the image in the production environment to prevent information errors.

Prerequisites

The Cloud Eye Agent has been installed on the Lite Server. For details about how to check whether the Cloud Eye Agent is installed and how to install it, see Installing Cloud Eye Agent Monitoring Plug-ins.

Overview

For details, see Bare Metal Server (BMS) Server Monitoring. In addition to the images listed in the document, Ubuntu 20.04 is also supported.

The sampling period of monitoring metrics is 1 minute. Do not change it. Otherwise, the function may be abnormal. The current monitoring metrics include the CPU, memory, disk, and network. After the accelerator PU driver is installed on the host, the related metrics can be collected.

The NPU metric collection function depends on the Linux system tool lspci. Some events depend on the blkid and grub2-editenv system tools. Ensure that these tools are normal.

|

Tool |

Check Method |

Installation Method |

|---|---|---|

|

lspci |

Run lspci in the shell environment. The PCI device in the system can be queried. The following shows an example: $ sudo lspci 00:00.0 PCI bridge: Huawei Technologies Co., Ltd. HiSilicon PCIe Root Port with Gen4 (rev 21) 00:08.0 PCI bridge: Huawei Technologies Co., Ltd. HiSilicon PCIe Root Port with Gen4 (rev 21) 00:10.0 PCI bridge: Huawei Technologies Co., Ltd. HiSilicon PCIe Root Port with Gen4 (rev 21) |

lspci is a tool used to display PCI device information. It is usually included in the pciutils software package. This software package is installed by default in most Linux versions. Generally, lspci is pre-installed. If lspci is not installed, you can use the package manager to install pciutils. Run the following commands in Debian/Ubuntu: sudo apt-get update sudo apt-get install pciutils Run the following command in Red Hat/CentOS/EulerOS: sudo yum install pciutils |

|

blkid |

Run blkid in the shell environment. The block device in the system can be queried. The following shows an example: $ sudo blkid /dev/sda1: UUID="123e4567-e89b-12d3-a456-426614174000" TYPE="vfat" PARTUUID="56789abc-def0-1234-5678-9abcd3f2c0a1" /dev/sda2: UUID="a1b2c3d4-e5f6-789a-bcde-f0123456789a" TYPE="swap" PARTUUID="edcba98-7654-3210-fedc-ba9876543210" /dev/sda3: UUID="01234567-89ab-cdef-0123-456789abcdef" TYPE="ext4" PARTUUID="fedcba09-8765-4321-fedc-ba0987654321" |

blkid is a tool used to display block device attributes in Linux. It is usually included in the util-linux software package. This software package is installed by default in most Linux versions. Generally, blkid is pre-installed. If blkid is not installed, you can use the package manager to install util-linux. Run the following commands in Debian/Ubuntu: sudo apt-get update sudo apt-get install util-linux Run the following command in Red Hat/CentOS/EulerOS: sudo yum install util-linux |

|

grub2-editenv (required only for Red Hat, CentOS, and EulerOS) |

Run blkid in the shell environment. The block device in the system can be queried. The following shows an example: 1 2 3 4 $ sudo grub2-editenv list timeout=5default=0saved_entry=Red Hat Enterprise Linux Server, with Linux 4.18.0-305.el8.x86_64 |

grub2-editenv is part of GRUB2 and is used to manage GRUB environment variables. GRUB2 is installed by default in most Linux versions. Generally, grub2-editenv is pre-installed. If grub2-editenv is not installed, you can use the package manager to install it. Run the following commands in Debian/Ubuntu: sudo apt-get update sudo apt-get install grub2 Run the following command in Red Hat/CentOS/EulerOS: sudo yum install grub2 |

Installing Cloud Eye Agent Monitoring Plug-ins

OS-level, proactive, and fine-grained server monitoring is provided after the Cloud Eye Agent is installed on the Lite Server (ECS or BMS).

Cloud Eye Agent is installed in the preset OS on the Lite Server by default. You can check the Agent status and version on the Cloud Eye console.

If Cloud Eye Agent is not installed or the Cloud Eye Agent version does not meet the requirements, use either of the following methods:

Method 1: Install or upgrade the Cloud Eye Agent plug-in on a Lite Server.

Method 2: Manually install Cloud Eye Agent.

- Create an agency for Cloud Eye. For details, see Creating a User and Granting Permissions. If you have enabled Cloud Eye host monitoring authorization when creating a Lite Server, skip this step.

- Currently, one-click monitoring installation is not supported on the Cloud Eye page. You need to log in to the server and run the following commands to install and configure the agent. For details about how to install the agent in other regions, see Installing the Agent on a Linux Server.

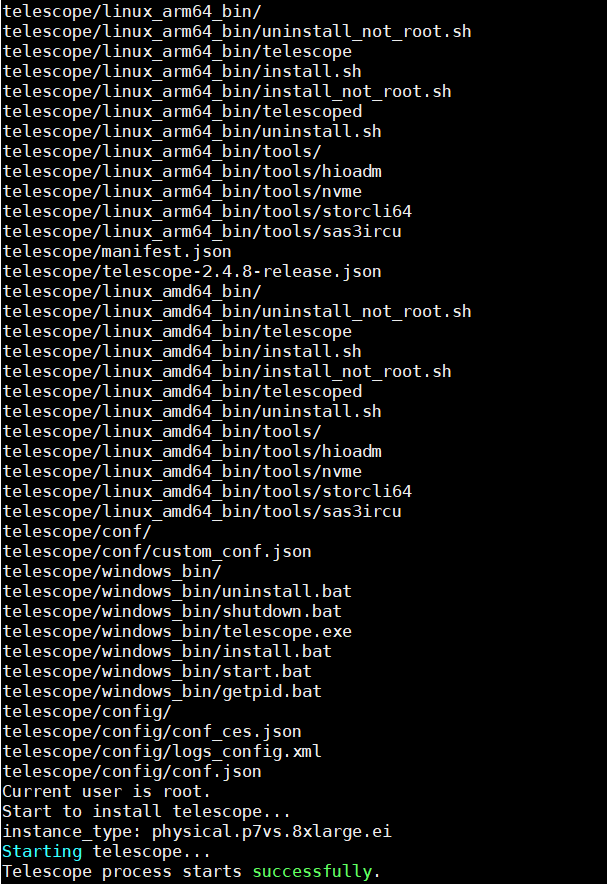

cd /usr/local && curl -k -O https://obs.cn-north-4.myhuaweicloud.com/uniagent-cn-north-4/script/agent_install.sh && bash agent_install.sh

If the following information is displayed, the installation is successful.

Figure 1 Installation succeeded

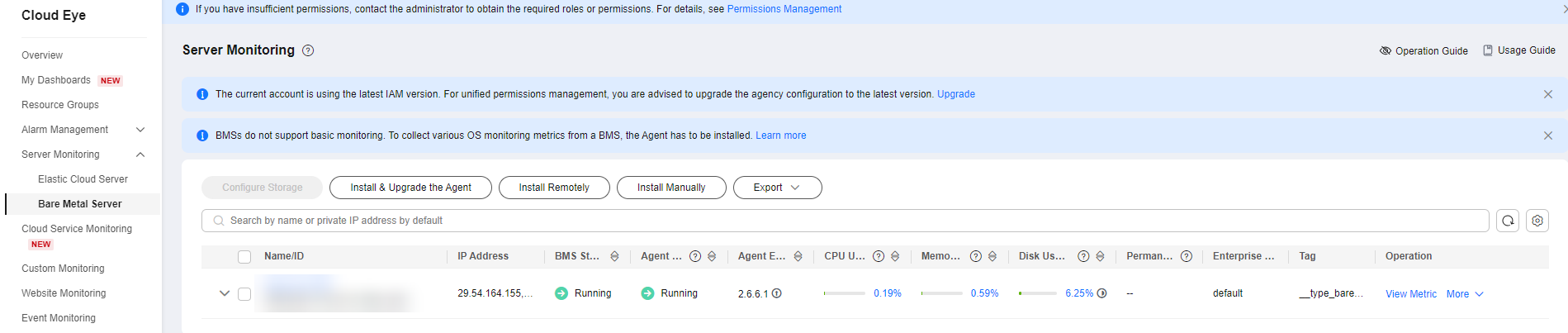

- View the monitoring items on Cloud Eye page. Accelerator PU monitoring items are available only after the accelerator PU driver is installed on the host.

Figure 2 Monitoring page

The monitoring plug-in is now installed. You can view the collected metrics on the UI or configure alarms based on the metric values.

Metric Namespace

AGT.ECS and SERVICE.BMS

Lite Server Monitoring Metrics

The table below lists only NPU metrics. For details about other metrics, see What Metrics Are Supported by the Agent?

|

No. |

Category |

Metric |

Display Name |

Description |

Unit |

Conversion Rule |

Value Range |

Dimension |

Supported Model |

Supported Cloud Eye Agent Version |

|---|---|---|---|---|---|---|---|---|---|---|

|

1 |

Overall |

npu_device_health |

NPU Health Status |

Health status of the NPU |

- |

N/A |

0: normal 1: minor alarm 2: major alarm 3: critical alarm |

instance_id, npu |

Snt3P 300IDuo Snt9b Snt9b23 |

telescope: 2.7.4.3 2.7.5.3 2.7.5.4 2.7.5.9 or later |

|

2 |

npu_driver_health |

NPU Driver Health Status |

Health status of the NPU driver |

- |

N/A |

0: normal 3: critical alarm |

instance_id, npu |

|||

|

3 |

npu_power |

NPU Power |

NPU power |

W |

N/A |

>0 |

instance_id, npu |

|||

|

4 |

npu_temperature |

NPU Temperature |

NPU temperature |

°C |

N/A |

Natural number |

instance_id, npu |

|||

|

5 |

npu_voltage |

NPU Voltage |

NPU voltage |

V |

N/A |

Natural number |

instance_id, npu |

|||

|

6 |

npu_util_rate_general |

Overall NPU usage |

Overall NPU usage, which covers AI Core and Vector Core statistics. |

% |

N/A |

0%–100% |

instance_id, npu |

Snt9b Snt9b23 |

|

No. |

Category |

Metric |

Display Name |

Description |

Unit |

Conversion Rule |

Value Range |

Dimension |

Supported Model |

Supported Cloud Eye Agent Version |

|---|---|---|---|---|---|---|---|---|---|---|

|

1 |

HBM |

npu_util_rate_hbm |

NPU HBM Usage |

HBM usage of the NPU |

% |

N/A |

0%–100% |

instance_id, npu |

Snt9b Snt9b23 |

telescope: 2.7.4.3 2.7.5.3 2.7.5.4 2.7.5.9 or later |

|

2 |

npu_hbm_freq |

HBM Frequency |

NPU HBM frequency |

MHz |

N/A |

>0 |

instance_id, npu |

|||

|

3 |

npu_freq_hbm |

HBM Frequency |

NPU HBM frequency |

MHz |

N/A |

>0 |

instance_id, npu |

|||

|

4 |

npu_hbm_usage |

HBM Usage |

NPU HBM usage |

MB |

N/A |

≥0 |

instance_id, npu |

|||

|

5 |

npu_hbm_temperature |

HBM Temperature |

NPU HBM temperature |

°C |

N/A |

Natural number |

instance_id, npu |

|||

|

6 |

npu_hbm_bandwidth_util |

HBM Bandwidth Usage |

NPU HBM bandwidth usage (old version) |

% |

N/A |

0%–100% |

instance_id, npu |

|||

|

7 |

npu_util_rate_hbm_bw |

HBM Bandwidth Usage |

NPU HBM bandwidth usage (new version) |

% |

N/A |

0%–100% |

instance_id, npu |

|||

|

8 |

npu_hbm_mem_capacity |

NPU HBM Memory Capacity |

HBM memory capacity of the NPU |

MB |

N/A |

≥0 |

instance_id, npu |

|||

|

9 |

npu_hbm_ecc_enable |

HBM ECC Status |

NPU HBM ECC status |

- |

N/A |

0: ECC detection is disabled. 1: ECC detection is enabled. |

instance_id, npu |

|||

|

10 |

npu_hbm_single_bit_error_cnt |

Single-bit Errors on HBM |

Current number of single-bit errors on the NPU HBM |

count |

N/A |

≥0 |

instance_id, npu |

|||

|

11 |

npu_hbm_double_bit_error_cnt |

Double-bit Errors on HBM |

Current number of double-bit errors on the NPU HBM |

count |

N/A |

≥0 |

instance_id, npu |

|||

|

12 |

npu_hbm_total_single_bit_error_cnt |

Single-bit Errors in HBM Lifecycle |

Number of single-bit errors in the NPU HBM lifecycle |

count |

N/A |

≥0 |

instance_id, npu |

|||

|

13 |

npu_hbm_total_double_bit_error_cnt |

Double-bit Errors in HBM Lifecycle |

Number of double-bit errors in the NPU HBM lifecycle |

count |

N/A |

≥0 |

instance_id, npu |

|||

|

14 |

npu_hbm_single_bit_isolated_pages_cnt |

Isolated NPU Memory Pages with HBM Single-bit Errors |

Number of isolated NPU memory pages with HBM single-bit errors |

count |

N/A |

≥0 |

instance_id, npu |

|||

|

15 |

npu_hbm_double_bit_isolated_pages_cnt |

Isolated NPU Memory Pages with HBM Multi-bit Errors |

Number of isolated NPU memory pages with HBM double-bit errors |

count |

N/A |

≥0 |

instance_id, npu |

|

No. |

Category |

Metric |

Display Name |

Description |

Unit |

Conversion Rule |

Value Range |

Dimension |

Supported Model |

Supported Cloud Eye Agent Version |

|---|---|---|---|---|---|---|---|---|---|---|

|

1 |

DDR |

npu_usage_mem |

Used NPU Memory |

Used NPU memory |

MB |

N/A |

≥0 |

instance_id, npu |

Snt3P 300IDuo |

telescope: 2.7.4.3 2.7.5.3 2.7.5.4 2.7.5.9 or later |

|

2 |

npu_util_rate_mem |

NPU Memory Usage |

NPU memory usage |

% |

N/A |

0%–100% |

instance_id, npu |

|||

|

3 |

npu_freq_mem |

NPU Memory Frequency |

NPU memory frequency |

MHz |

N/A |

>0 |

instance_id, npu |

|||

|

4 |

npu_util_rate_mem_bandwidth |

NPU Memory Bandwidth Usage |

NPU memory bandwidth usage |

% |

N/A |

0%–100% |

instance_id, npu |

|||

|

5 |

npu_sbe |

NPU Single-bit Errors |

Number of single-bit errors on the NPU |

count |

N/A |

≥0 |

instance_id, npu |

|||

|

6 |

npu_dbe |

NPU Double-bit Errors |

Number of double-bit errors on the NPU |

count |

N/A |

≥0 |

instance_id, npu |

|

No. |

Category |

Metric |

Display Name |

Description |

Unit |

Conversion Rule |

Value Range |

Dimension |

Supported Model |

Supported Cloud Eye Agent Version |

|---|---|---|---|---|---|---|---|---|---|---|

|

1 |

AI Core |

npu_freq_ai_core |

AI Core Frequency of the NPU |

AI core frequency of the NPU |

MHz |

N/A |

>0 |

instance_id, npu |

Snt3P 300IDuo Snt9b Snt9b23 |

telescope: 2.7.4.3 2.7.5.3 2.7.5.4 2.7.5.9 or later |

|

2 |

npu_freq_ai_core_rated |

Rated Frequency of the NPU AI Core |

Rated frequency of the NPU AI core |

MHz |

N/A |

>0 |

instance_id, npu |

|||

|

3 |

npu_util_rate_ai_core |

AI Core Usage of the NPU |

AI core usage of the NPU |

% |

N/A |

0%–100% |

instance_id, npu |

|

No. |

Category |

Metric |

Display Name |

Description |

Unit |

Conversion Rule |

Value Range |

Dimension |

Supported Model |

Supported Cloud Eye Agent Version |

|---|---|---|---|---|---|---|---|---|---|---|

|

1 |

AI Vector |

npu_util_rate_vector_core |

NPU Vector Core Usage |

NPU Vector Core Usage |

% |

N/A |

0%–100% |

instance_id, npu |

Snt3P 300IDuo Snt9b Snt9b23 |

telescope: 2.7.5.9 or later |

|

No. |

Category |

Metric |

Display Name |

Description |

Unit |

Conversion Rule |

Value Range |

Dimension |

Supported Model |

Supported Cloud Eye Agent Version |

|---|---|---|---|---|---|---|---|---|---|---|

|

1 |

AI CPU |

npu_aicpu_num |

Number of AI CPUs of the NPU |

Number of AI CPUs of the NPU |

count |

N/A |

≥0 |

instance_id, npu |

Snt3P 300IDuo Snt9b Snt9b23 |

telescope: 2.7.4.3 2.7.5.3 2.7.5.4 2.7.5.9 or later |

|

2 |

npu_util_rate_ai_cpu |

NPU AI CPU Usage |

AI CPU usage of the NPU |

% |

N/A |

0%–100% |

instance_id, npu |

|||

|

3 |

npu_aicpu_avg_util_rate |

Average AI CPU Usage of the NPU |

Average AI CPU usage of the NPU |

% |

N/A |

0%–100% |

instance_id, npu |

|||

|

4 |

npu_aicpu_max_freq |

Maximum AI CPU Frequency of the NPU |

Maximum AI CPU frequency of the NPU |

MHz |

N/A |

>0 |

instance_id, npu |

|||

|

5 |

npu_aicpu_cur_freq |

AI CPU Frequency of the NPU |

AI CPU frequency of the NPU |

MHz |

N/A |

>0 |

instance_id, npu |

|

No. |

Category |

Metric |

Display Name |

Description |

Unit |

Conversion Rule |

Value Range |

Dimension |

Supported Model |

Supported Cloud Eye Agent Version |

|---|---|---|---|---|---|---|---|---|---|---|

|

1 |

CTRL CPU |

npu_util_rate_ctrl_cpu |

Control CPU Usage of the NPU |

Control CPU usage of the NPU |

% |

N/A |

0%–100% |

instance_id, npu |

Snt3P 300IDuo Snt9b Snt9b23 |

telescope: 2.7.4.3 2.7.5.3 2.7.5.4 2.7.5.9 or later |

|

2 |

npu_freq_ctrl_cpu |

Control CPU Frequency of the NPU |

Control CPU frequency of the NPU |

MHz |

N/A |

>0 |

instance_id, npu |

|

No. |

Category |

Metric |

Display Name |

Description |

Unit |

Conversion Rule |

Value Range |

Dimension |

Supported Model |

Supported Cloud Eye Agent Version |

|---|---|---|---|---|---|---|---|---|---|---|

|

1 |

PCIe link |

npu_link_cap_speed |

Max. NPU Link Speed |

Maximum link speed of the NPU |

GT/s |

N/A |

≥0 |

instance_id, npu |

310P 300IDuo Snt9b Snt9b23 |

telescope: 2.7.4.3 2.7.5.3 2.7.5.4 2.7.5.9 or later |

|

2 |

npu_link_cap_width |

Max. NPU Link Width |

Maximum link width of the NPU |

count |

N/A |

≥0 |

instance_id, npu |

|||

|

3 |

npu_link_status_speed |

NPU Link Speed |

Link speed of the NPU |

GT/s |

N/A |

≥0 |

instance_id, npu |

|||

|

4 |

npu_link_status_width |

NPU Link Width |

Link width of the NPU |

count |

N/A |

≥0 |

instance_id, npu |

|

No. |

Category |

Metric |

Display Name |

Description |

Unit |

Conversion Rule |

Value Range |

Dimension |

Supported Model |

Supported Cloud Eye Agent Version |

|---|---|---|---|---|---|---|---|---|---|---|

|

1 |

RoCE network |

npu_device_network_health |

NPU Network Health Status |

Connectivity of the IP address of the RoCE NIC on the NPU |

- |

N/A |

0: The network health status is normal. Other values: The network status is abnormal. |

instance_id, npu |

Snt9b Snt9b23 |

telescope: 2.7.4.3 2.7.5.3 2.7.5.4 2.7.5.9 or later |

|

2 |

npu_network_port_link_status |

NPU Network Port Link Status |

Link status of the NPU network port |

- |

N/A |

0: up 1: down |

instance_id, npu |

|||

|

3 |

npu_roce_tx_rate |

NPU NIC Uplink Rate |

Uplink rate of the NPU NIC |

MB/s |

N/A |

≥0 |

instance_id, npu |

|||

|

4 |

npu_roce_rx_rate |

NPU NIC Downlink Rate |

Downlink rate of the NPU NIC |

MB/s |

N/A |

≥0 |

instance_id, npu |

|||

|

5 |

npu_mac_tx_mac_pause_num |

PAUSE Frames Sent from MAC |

Total number of PAUSE frames sent from the MAC address corresponding to the NPU |

count |

N/A |

≥0 |

instance_id, npu |

|||

|

6 |

npu_mac_rx_mac_pause_num |

PAUSE Frames Received by MAC |

Total number of PAUSE frames received by the MAC address corresponding to the NPU |

count |

N/A |

≥0 |

instance_id, npu |

|||

|

7 |

npu_mac_tx_pfc_pkt_num |

PFC Frames Sent from MAC |

Total number of PFC frames sent from the MAC address corresponding to the NPU |

count |

N/A |

≥0 |

instance_id, npu |

|||

|

8 |

npu_mac_rx_pfc_pkt_num |

PFC Frames Received by MAC |

Total number of PFC frames received by the MAC address corresponding to the NPU |

count |

N/A |

≥0 |

instance_id, npu |

|||

|

9 |

npu_mac_tx_bad_pkt_num |

Bad Packets Sent from MAC |

Total number of bad packets sent from the MAC address corresponding to the NPU |

count |

N/A |

≥0 |

instance_id, npu |

|||

|

10 |

npu_mac_rx_bad_pkt_num |

Bad Packets Received by MAC |

Total number of bad packets received by the MAC address corresponding to the NPU |

count |

N/A |

≥0 |

instance_id, npu |

|||

|

11 |

npu_roce_tx_err_pkt_num |

Bad Packets Sent by RoCE |

Total number of bad packets sent by the RoCE NIC on the NPU |

count |

N/A |

≥0 |

instance_id, npu |

|||

|

12 |

npu_roce_rx_err_pkt_num |

Bad Packets Received by RoCE |

Total number of bad packets received by the RoCE NIC on the NPU |

count |

N/A |

≥0 |

instance_id, npu |

|||

|

13 |

npu_roce_tx_all_pkt_num |

Packets Transmitted by NPU RoCE |

The number of packets transmitted by the NPU's RoCE. |

count |

N/A |

≥0 |

instance_id, npu |

telescope: 2.7.5.9 or later |

||

|

14 |

npu_roce_rx_all_pkt_num |

Packets Received by NPU RoCE |

The number of packets received by the NPU's RoCE. |

count |

N/A |

≥0 |

instance_id, npu |

|||

|

15 |

npu_roce_new_pkt_rty_num |

Packets Retransmitted by NPU RoCE |

The number of packets retransmitted by the NPU's RoCE. |

count |

N/A |

≥0 |

instance_id, npu |

|||

|

16 |

npu_roce_out_of_order_num |

Abnormal PSN Packets Received by NPU RoCE |

This metric indicates that number of PSN packets received by NPU RoCE is greater than that of expected or duplicate PSN packets. If packets are out of order or lost, retransmission is triggered. |

count |

N/A |

≥0 |

instance_id, npu |

|||

|

17 |

npu_roce_rx_cnp_pkt_num |

CNP Packets Received by NPU RoCE |

The number of CNP packets received by the NPU's RoCE. |

count |

N/A |

≥0 |

instance_id, npu |

|||

|

18 |

npu_roce_tx_cnp_pkt_num |

CNP Packets Transmitted by NPU RoCE |

The number of CNP packets transmitted by the NPU's RoCE. |

count |

N/A |

≥0 |

instance_id, npu |

|

No. |

Category |

Metric |

Display Name |

Description |

Unit |

Conversion Rule |

Value Range |

Dimension |

Supported Model |

Supported Cloud Eye Agent Version |

|---|---|---|---|---|---|---|---|---|---|---|

|

1 |

RoCE optical module |

npu_opt_temperature |

NPU Optical Module Temperature |

NPU optical module temperature |

°C |

N/A |

Natural number |

instance_id, npu |

Snt9b Snt9b23 |

telescope: 2.7.4.3 2.7.5.3 2.7.5.4 2.7.5.9 or later |

|

2 |

npu_opt_temperature_high_thres |

Upper Limit of the NPU Optical Module Temperature |

Upper limit of the NPU optical module temperature |

°C |

N/A |

Natural number |

instance_id, npu |

|||

|

3 |

npu_opt_temperature_low_thres |

Lower Limit of the NPU Optical Module Temperature |

Lower limit of the NPU optical module temperature |

°C |

N/A |

Natural number |

instance_id, npu |

|||

|

4 |

npu_opt_voltage |

NPU Optical Module Voltage |

NPU optical module voltage |

mV |

N/A |

Natural number |

instance_id, npu |

|||

|

5 |

npu_opt_voltage_high_thres |

Upper Limit of the NPU Optical Module Voltage |

Upper limit of the NPU optical module voltage |

mV |

N/A |

Natural number |

instance_id, npu |

|||

|

6 |

npu_opt_voltage_low_thres |

Lower Limit of the NPU Optical Module Voltage |

Lower limit of the NPU optical module voltage |

mV |

N/A |

Natural number |

instance_id, npu |

|||

|

7 |

npu_opt_tx_power_lane0 |

TX Power of the NPU Optical Module in Channel 0 |

Transmit power of the NPU optical module in channel 0 |

mW |

N/A |

≥0 |

instance_id, npu |

|||

|

8 |

npu_opt_tx_power_lane1 |

TX Power of the NPU Optical Module in Channel 1 |

Transmit power of the NPU optical module in channel 1 |

mW |

N/A |

≥0 |

instance_id, npu |

|||

|

9 |

npu_opt_tx_power_lane2 |

TX Power of the NPU Optical Module in Channel 2 |

Transmit power of the NPU optical module in channel 2 |

mW |

N/A |

≥0 |

instance_id, npu |

|||

|

10 |

npu_opt_tx_power_lane3 |

TX Power of the NPU Optical Module in Channel 3 |

Transmit power of the NPU optical module in channel 3 |

mW |

N/A |

≥0 |

instance_id, npu |

|||

|

11 |

npu_opt_rx_power_lane0 |

RX Power of the NPU Optical Module in Channel 0 |

Receive power of the NPU optical module in channel 0 |

mW |

N/A |

≥0 |

instance_id, npu |

|||

|

12 |

npu_opt_rx_power_lane1 |

RX Power of the NPU Optical Module in Channel 1 |

Receive power of the NPU optical module in channel 1 |

mW |

N/A |

≥0 |

instance_id, npu |

|||

|

13 |

npu_opt_rx_power_lane2 |

RX Power of the NPU Optical Module in Channel 2 |

Receive power of the NPU optical module in channel 2 |

mW |

N/A |

≥0 |

instance_id, npu |

|||

|

14 |

npu_opt_rx_power_lane3 |

RX Power of the NPU Optical Module in Channel 3 |

Receive power of the NPU optical module in channel 3 |

mW |

N/A |

≥0 |

instance_id, npu |

|||

|

15 |

npu_opt_tx_bias_lane0 |

TX Bias Current of the NPU Optical Module in Channel 0 |

Transmitted bias current of the NPU optical module in channel 0 |

mA |

N/A |

≥0 |

instance_id, npu |

|||

|

16 |

npu_opt_tx_bias_lane1 |

TX Bias Current of the NPU Optical Module in Channel 1 |

Transmitted bias current of the NPU optical module in channel 1 |

mA |

N/A |

≥0 |

instance_id, npu |

|||

|

17 |

npu_opt_tx_bias_lane2 |

TX Bias Current of the NPU Optical Module in Channel 2 |

Transmitted bias current of the NPU optical module in channel 2 |

mA |

N/A |

≥0 |

instance_id, npu |

|||

|

18 |

npu_opt_tx_bias_lane3 |

TX Bias Current of the NPU Optical Module in Channel 3 |

Transmitted bias current of the NPU optical module in channel 3 |

mA |

N/A |

≥0 |

instance_id, npu |

|||

|

19 |

npu_opt_tx_los |

TX Los of the NPU Optical Module |

TX Los flag of the NPU optical module |

count |

N/A |

≥0 |

instance_id, npu |

|||

|

20 |

npu_opt_rx_los |

RX Los of the NPU Optical Module |

RX Los flag of the NPU optical module |

count |

N/A |

≥0 |

instance_id, npu |

|||

|

21 |

npu_opt_media_snr_lane0 |

NPU Optical Module Channel 0 Optical SNR |

The signal-to-noise ratio (SNR) on the media (optical) side of channel 0 in the NPU optical module |

dB |

N/A |

Natural number |

instance_id, npu |

telescope: 2.7.5.9 or later |

||

|

22 |

npu_opt_media_snr_lane1 |

NPU Optical Module Channel 1 Optical SNR |

The signal-to-noise ratio (SNR) on the media (optical) side of channel 1 in the NPU optical module |

dB |

N/A |

Natural number |

instance_id, npu |

|||

|

23 |

npu_opt_media_snr_lane2 |

NPU Optical Module Channel 2 Optical SNR |

The signal-to-noise ratio (SNR) on the media (optical) side of channel 2 in the NPU optical module |

dB |

N/A |

Natural number |

instance_id, npu |

|||

|

24 |

npu_opt_media_snr_lane3 |

NPU Optical Module Channel 3 Optical SNR |

The signal-to-noise ratio (SNR) on the media (optical) side of channel 3 in the NPU optical module |

dB |

N/A |

Natural number |

instance_id, npu |

|

No. |

Category |

Metric |

Display Name |

Description |

Unit |

Conversion Rule |

Value Range |

Dimension |

Supported Model |

Supported Cloud Eye Agent Version |

|---|---|---|---|---|---|---|---|---|---|---|

|

1 |

HCCS Lane mode |

npu_macro1_0lane_max_consec_sec |

Maximum Duration of NPU Macro1 in Lane 0 Mode |

The maximum time NPU Macro1 operates in Lane 0 mode during a detection period |

s |

N/A |

≥0 |

instance_id, npu |

Snt9b Snt9b23 |

telescope: 2.7.5.9 or later |

|

2 |

npu_macro2_0lane_max_consec_sec |

Maximum Duration of NPU Macro2 in Lane 0 Mode |

The maximum time NPU Macro2 operates in Lane 0 mode during a detection period |

s |

N/A |

≥0 |

instance_id, npu |

|||

|

3 |

npu_macro3_0lane_max_consec_sec |

Maximum Duration of NPU Macro3 in Lane 0 Mode |

The maximum time NPU Macro3 operates in Lane 0 mode during a detection period |

s |

N/A |

≥0 |

instance_id, npu |

|||

|

4 |

npu_macro4_0lane_max_consec_sec |

Maximum Duration of NPU Macro4 in Lane 0 Mode |

The maximum time NPU Macro4 operates in Lane 0 mode during a detection period |

s |

N/A |

≥0 |

instance_id, npu |

|||

|

5 |

npu_macro5_0lane_max_consec_sec |

Maximum Duration of NPU Macro5 in Lane 0 Mode |

The maximum time NPU Macro5 operates in Lane 0 mode during a detection period |

s |

N/A |

≥0 |

instance_id, npu |

|||

|

6 |

npu_macro6_0lane_max_consec_sec |

Maximum Duration of NPU Macro6 in Lane 0 Mode |

The maximum time NPU Macro6 operates in Lane 0 mode during a detection period |

s |

N/A |

≥0 |

instance_id, npu |

|||

|

7 |

npu_macro7_0lane_max_consec_sec |

Maximum Duration of NPU Macro7 in Lane 0 Mode |

The maximum time NPU Macro7 operates in Lane 0 mode during a detection period |

s |

N/A |

≥0 |

instance_id, npu |

|||

|

8 |

npu_macro1_0lane_total_sec |

Total Duration of NPU Macro1 in Lane 0 Mode |

The total time NPU Macro1 operates in Lane 0 mode during a detection period |

s |

N/A |

≥0 |

instance_id, npu |

|||

|

9 |

npu_macro2_0lane_total_sec |

Total Duration of NPU Macro2 in Lane 0 Mode |

The total time NPU Macro2 operates in Lane 0 mode during a detection period |

s |

N/A |

≥0 |

instance_id, npu |

|||

|

10 |

npu_macro3_0lane_total_sec |

Total Duration of NPU Macro3 in Lane 0 Mode |

The total time NPU Macro3 operates in Lane 0 mode during a detection period |

s |

N/A |

≥0 |

instance_id, npu |

|||

|

11 |

npu_macro4_0lane_total_sec |

Total Duration of NPU Macro4 in Lane 0 Mode |

The total time NPU Macro4 operates in Lane 0 mode during a detection period |

s |

N/A |

≥0 |

instance_id, npu |

|||

|

12 |

npu_macro5_0lane_total_sec |

Total Duration of NPU Macro5 in Lane 0 Mode |

The total time NPU Macro5 operates in Lane 0 mode during a detection period |

s |

N/A |

≥0 |

instance_id, npu |

|||

|

13 |

npu_macro6_0lane_total_sec |

Total Duration of NPU Macro6 in Lane 0 Mode |

The total time NPU Macro6 operates in Lane 0 mode during a detection period |

s |

N/A |

≥0 |

instance_id, npu |

|||

|

14 |

npu_macro7_0lane_total_sec |

Total Duration of NPU Macro7 in Lane 0 Mode |

The total time NPU Macro7 operates in Lane 0 mode during a detection period |

s |

N/A |

≥0 |

instance_id, npu |

|

No. |

Category |

Metric |

Display Name |

Description |

Unit |

Conversion Rule |

Value Range |

Dimension |

Supported Model |

Supported Cloud Eye Agent Version |

|---|---|---|---|---|---|---|---|---|---|---|

|

1 |

HCCS Serdes SNR |

npu_macro1_serdes_lane0_snr |

NPU Macro1 SerDes Lane 0 SNR |

The SNR for SerDes Lane 0 in NPU Macro1 |

dB |

N/A |

Natural number |

instance_id, npu |

Snt9b Snt9b23 |

telescope: 2.7.5.9 or later |

|

2 |

npu_macro1_serdes_lane1_snr |

NPU Macro1 SerDes Lane 1 SNR |

The SNR for SerDes Lane 1 in NPU Macro1 |

dB |

N/A |

Natural number |

instance_id, npu |

|||

|

3 |

npu_macro1_serdes_lane2_snr |

NPU Macro1 SerDes Lane 2 SNR |

The SNR for SerDes Lane 2 in NPU Macro1 |

dB |

N/A |

Natural number |

instance_id, npu |

|||

|

4 |

npu_macro1_serdes_lane3_snr |

NPU Macro1 SerDes Lane 3 SNR |

The SNR for SerDes Lane 3 in NPU Macro1 |

dB |

N/A |

Natural number |

instance_id, npu |

|||

|

5 |

npu_macro2_serdes_lane0_snr |

NPU Macro2 SerDes Lane 0 SNR |

The SNR for SerDes Lane 0 in NPU Macro2 |

dB |

N/A |

Natural number |

instance_id, npu |

|||

|

6 |

npu_macro2_serdes_lane1_snr |

NPU Macro2 SerDes Lane 1 SNR |

The SNR for SerDes Lane 1 in NPU Macro2 |

dB |

N/A |

Natural number |

instance_id, npu |

|||

|

7 |

npu_macro2_serdes_lane2_snr |

NPU Macro2 SerDes Lane 2 SNR |

The SNR for SerDes Lane 2 in NPU Macro2 |

dB |

N/A |

Natural number |

instance_id, npu |

|||

|

8 |

npu_macro2_serdes_lane3_snr |

NPU Macro2 SerDes Lane 3 SNR |

The SNR for SerDes Lane 3 in NPU Macro2 |

dB |

N/A |

Natural number |

instance_id, npu |

|||

|

9 |

npu_macro3_serdes_lane0_snr |

NPU Macro3 SerDes Lane 0 SNR |

The SNR for SerDes Lane 0 in NPU Macro3 |

dB |

N/A |

Natural number |

instance_id, npu |

|||

|

10 |

npu_macro3_serdes_lane1_snr |

NPU Macro3 SerDes Lane 1 SNR |

The SNR for SerDes Lane 1 in NPU Macro3 |

dB |

N/A |

Natural number |

instance_id, npu |

|||

|

11 |

npu_macro3_serdes_lane2_snr |

NPU Macro3 SerDes Lane 2 SNR |

The SNR for SerDes Lane 2 in NPU Macro3 |

dB |

N/A |

Natural number |

instance_id, npu |

|||

|

12 |

npu_macro3_serdes_lane3_snr |

NPU Macro3 SerDes Lane 3 SNR |

The SNR for SerDes Lane 3 in NPU Macro3 |

dB |

N/A |

Natural number |

instance_id, npu |

|||

|

13 |

npu_macro4_serdes_lane0_snr |

NPU Macro4 SerDes Lane 0 SNR |

The SNR for SerDes Lane 0 in NPU Macro4 |

dB |

N/A |

Natural number |

instance_id, npu |

|||

|

14 |

npu_macro4_serdes_lane1_snr |

NPU Macro4 SerDes Lane 1 SNR |

The SNR for SerDes Lane 1 in NPU Macro4 |

dB |

N/A |

Natural number |

instance_id, npu |

|||

|

15 |

npu_macro4_serdes_lane2_snr |

NPU Macro4 SerDes Lane 2 SNR |

The SNR for SerDes Lane 2 in NPU Macro4 |

dB |

N/A |

Natural number |

instance_id, npu |

|||

|

16 |

npu_macro4_serdes_lane3_snr |

NPU Macro4 SerDes Lane 3 SNR |

The SNR for SerDes Lane 3 in NPU Macro4 |

dB |

N/A |

Natural number |

instance_id, npu |

|||

|

17 |

npu_macro5_serdes_lane0_snr |

NPU Macro5 SerDes Lane 0 SNR |

The SNR for SerDes Lane 0 in NPU Macro5 |

dB |

N/A |

Natural number |

instance_id, npu |

|||

|

18 |

npu_macro5_serdes_lane1_snr |

NPU Macro5 SerDes Lane 1 SNR |

The SNR for SerDes Lane 1 in NPU Macro5 |

dB |

N/A |

Natural number |

instance_id, npu |

|||

|

19 |

npu_macro5_serdes_lane2_snr |

NPU Macro5 SerDes Lane 2 SNR |

The SNR for SerDes Lane 2 in NPU Macro5 |

dB |

N/A |

Natural number |

instance_id, npu |

|||

|

20 |

npu_macro5_serdes_lane3_snr |

NPU Macro5 SerDes Lane 3 SNR |

The SNR for SerDes Lane 3 in NPU Macro5 |

dB |

N/A |

Natural number |

instance_id, npu |

|||

|

21 |

npu_macro6_serdes_lane0_snr |

NPU Macro6 SerDes Lane 0 SNR |

The SNR for SerDes Lane 0 in NPU Macro6 |

dB |

N/A |

Natural number |

instance_id, npu |

|||

|

22 |

npu_macro6_serdes_lane1_snr |

NPU Macro6 SerDes Lane 1 SNR |

The SNR for SerDes Lane 1 in NPU Macro6 |

dB |

N/A |

Natural number |

instance_id, npu |

|||

|

23 |

npu_macro6_serdes_lane2_snr |

NPU Macro6 SerDes Lane 2 SNR |

The SNR for SerDes Lane 2 in NPU Macro6 |

dB |

N/A |

Natural number |

instance_id, npu |

|||

|

24 |

npu_macro6_serdes_lane3_snr |

NPU Macro6 SerDes Lane 3 SNR |

The SNR for SerDes Lane 3 in NPU Macro6 |

dB |

N/A |

Natural number |

instance_id, npu |

|||

|

25 |

npu_macro7_serdes_lane0_snr |

NPU Macro7 SerDes Lane 0 SNR |

The SNR for SerDes Lane 0 in NPU Macro7 |

dB |

N/A |

Natural number |

instance_id, npu |

|||

|

26 |

npu_macro7_serdes_lane1_snr |

NPU Macro7 SerDes Lane 1 SNR |

The SNR for SerDes Lane 1 in NPU Macro7 |

dB |

N/A |

Natural number |

instance_id, npu |

|||

|

27 |

npu_macro7_serdes_lane2_snr |

NPU Macro7 SerDes Lane 2 SNR |

The SNR for SerDes Lane 2 in NPU Macro7 |

dB |

N/A |

Natural number |

instance_id, npu |

|||

|

28 |

npu_macro7_serdes_lane3_snr |

NPU Macro7 SerDes Lane 3 SNR |

The SNR for SerDes Lane 3 in NPU Macro7 |

dB |

N/A |

Natural number |

instance_id, npu |

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot