Accessing OBS Using Hudi Through Guardian

After interconnecting Guardian with OBS by referring to Disabling Ranger OBS Path Authentication for Guardian or Enabling Ranger OBS Path Authentication for Guardian, you can create a Hudi COW table in spark-shell and store it to OBS.

Prerequisites

If you interconnected Guardian with OBS by referring to Enabling Ranger OBS Path Authentication for Guardian, ensure that you have the read and write permissions on the OBS path in Ranger. For details about how to obtain the permissions, see Configuring Ranger Permissions.

Interconnecting Hive with OBS

- Log in to the node where the client is installed as the client installation user.

- Run the following commands to configure environment variables:

Load the environment variables.

source Client installation directory/bigdata_envLoad the component environment variables.

source Client installation directory/Hudi/component_env - Modify the configuration file.

vim Client installation directory/Hudi/hudi/conf/hdfs-site.xmlModify the following content, where the dfs.namenode.acls.enabled parameter specifies whether to enable the HDFS ACL function.

<property> <name>dfs.namenode.acls.enabled</name> <value>false</value> </property>

- If Kerberos authentication has been enabled (security mode) for the cluster, run the following command to perform authentication as a user who has the Read and Write permissions on the corresponding OBS path. If Kerberos authentication has not been enabled (normal mode) for the cluster, you do not need to run this command.

kinit Username - Start spark-shell and run the following commands to create a COW table and store it to OBS:

spark-shell --master yarn import org.apache.hudi.QuickstartUtils._ import scala.collection.JavaConversions._ import org.apache.spark.sql.SaveMode._ import org.apache.hudi.DataSourceReadOptions._ import org.apache.hudi.DataSourceWriteOptions._ import org.apache.hudi.config.HoodieWriteConfig._ val tableName = "hudi_cow_table" val basePath = "obs://testhudi/cow_table/" val dataGen = new DataGenerator val inserts = convertToStringList(dataGen.generateInserts(10)) val df = spark.read.json(spark.sparkContext.parallelize(inserts, 2)) df.write.format("org.apache.hudi"). options(getQuickstartWriteConfigs). option(PRECOMBINE_FIELD_OPT_KEY, "ts"). option(RECORDKEY_FIELD_OPT_KEY, "uuid"). option(PARTITIONPATH_FIELD_OPT_KEY, "partitionpath"). option(TABLE_NAME, tableName). mode(Overwrite). save(basePath);In the preceding command, obs://testhudi/cow_table/ indicates the OBS path, and testhudi indicates the OBS parallel system file name. Change them as required.

- Use DataSource to check whether the table is created and whether the data is normal.

val roViewDF = spark. read. format("org.apache.hudi"). load(basePath + "/*/*/*/*") roViewDF.createOrReplaceTempView("hudi_ro_table") spark.sql("select * from hudi_ro_table").show() - Exit the spark-shell CLI.

:q

Configuring Ranger Permissions

- Log in to FusionInsight Manager and choose System > Permission > User Group. On the displayed page, click Create User Group.

For details about how to log in to MRS Manager, see Accessing MRS Manager.

- Create a user group without a role, for example, obs_hudi, and bind the user group to the corresponding user.

- Log in to the Ranger management page as the rangeradmin user.

- On the home page, click component plug-in name OBS in the EXTERNAL AUTHORIZATION area.

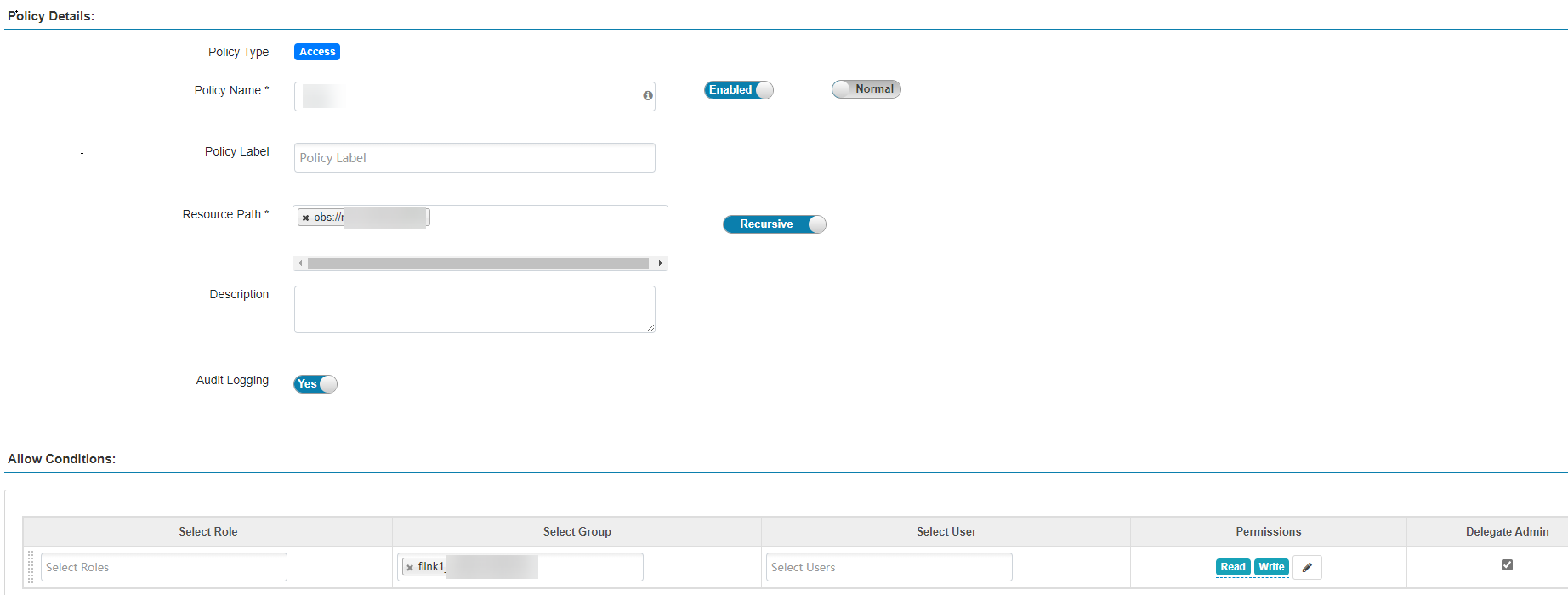

- Click Add New Policy to add the Read and Write permissions on OBS paths to the user group created in Step 2. If there are no OBS paths, create one in advance (wildcard character * is not allowed).

Figure 1 Granting the Hudi user group permissions for reading and writing OBS paths

Before configuring permission policies for OBS paths on Ranger, ensure that the AccessLabel function has been enabled for OBS. For how to enable it, contact OBS O&M personnel.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot