Interconnecting HDFS with OBS Using an IAM Agency

After configuring decoupled storage and compute for a cluster by referring to Interconnecting an MRS Cluster with OBS Using an IAM Agency, you can view and create OBS file directories on the HDFS client.

Interconnecting HDFS with OBS

- Log in to the node where the HDFS client is installed as the client installation user.

For details about how to download and install the cluster client, see Installing an MRS Cluster Client.

- Run the following command to switch to the client installation directory.

cd Client installation directory - Run the following command to configure environment variables:

source bigdata_env

- Authenticate the user of the cluster with Kerberos authentication enabled. Skip this step for clusters with Kerberos authentication disabled.

kinit Component service user - Add the target OBS file system in the HDFS CLI.

For example:

- Run the following command to access the OBS file system:

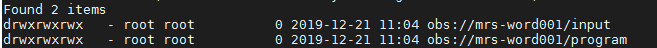

hdfs dfs -ls obs://OBS parallel file system name/pathFor example, run the following command to access the mrs-word001 parallel file system. If the file list is returned, OBS is successfully accessed.

hadoop fs -ls obs://mrs-word001/

Figure 1 Returned file list

- Run the following command to upload the /opt/test.txt file from the client node to the OBS file system path:

hdfs dfs -put /opt/test.txt obs://OBS parallel file system name/pathView the uploaded file in the corresponding OBS file system path.

- Run the following command to access the OBS file system:

Changing the Log Level of OBS Client

If a large number of logs are printed in the OBS file system, the read and write performance may be affected. You can adjust the log level of the OBS client as follows:

- Go to the hadoop directory.

cd Client installation directory/HDFS/hadoop/etc/hadoop - Edit the file log4j.properties.

vi log4j.properties

Add the following OBS log level configuration to the file and save it.

log4j.logger.org.apache.hadoop.fs.obs=WARN log4j.logger.com.obs=WARN

- Run the following command:

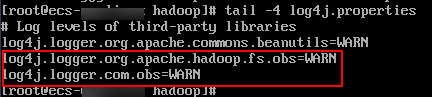

tail -4 log4j.properties

If the command output shown in Figure 2 is displayed, the log level has been changed.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot