Viewing MRS Job Details and Logs

MRS is a service for deploying and managing the Hadoop system on Huawei Cloud. You can manage, submit, and execute jobs, and view job status, detailed job configurations, and run logs on the MRS console. This helps you to quickly learn the job running status, optimize job configurations, and rectify faults.

Notes and Constraints

No logs are generated on the backend nodes for Spark SQL and DistCp jobs. Then, you cannot view the run logs of running Spark SQL and DistCp jobs on the console.

Viewing the Job Status

- Log in to the MRS console.

- On the Active Clusters page, select a running cluster and click its name to switch to the cluster details page.

- Click Job Management to view the list and status of jobs created in an MRS cluster.

By default, jobs in the job list are sorted by submission time. For details about the parameters in the job list, see Table 1. You can quickly filter jobs by job type and job status.

Table 1 Job list parameters Parameter

Description

Name/ID

Job name, which is set when a job is created.

ID is the unique identifier of a job. After a job is added, the system automatically assigns a value to ID.

Username

Name of the user who submits a job.

Job Type

Job type.

After importing and exporting files on the Files tab page, you can view the DistCp job on the Jobs page.

Status

Job status.

- Submitted: The job has been submitted.

- Accepted: Initial status of a job after it is successfully submitted.

- Running: The job is running.

- Completed: The job is executed.

- Terminated: The job execution is stopped.

- Abnormal: An error is reported during job execution, or the job execution is complete but fails.

Result

Execution result of a job.

- Undefined: indicates that the job is being executed.

- Successful: indicates that the job has been executed.

- Killed: indicates that the job is manually terminated during execution.

- Failed: indicates that the job fails to be executed.

After a job is successfully executed or fails to be executed, it cannot be executed again. You can only add a job again.

Queue Name

Name of the resource queue bound to the user who submits the job.

Submitted

Time when a job is submitted.

Ended

Time when a job is completed or manually stopped.

Operation

Operations can be performed on the job. For details, see Managing MRS Cluster Jobs.

Viewing Job Logs on the Console

- Log in to the MRS console.

- Choose Active Clusters in the navigation pane, select a running cluster, and click its name to switch to the cluster details page.

- Click .

- In the Operation column of the job to be viewed, choose More > View Details.

In the View Details window that is displayed, configuration of the selected job is displayed.

- Select a running job, and click View Log in the Operation column.

In the new page that is displayed, real-time log information of the job is displayed.

- Each tenant can submit and view 10 jobs concurrently.

- After a job is executed, the system will compress and save the logs to the corresponding path if you choose to save job logs to OBS or HDFS. Therefore, after a job execution of this type is completed, the job status is still Running. After the log is successfully stored, the job status changes to Completed. The log storage duration depends on the log size and takes several minutes.

- When you create, stop, or delete a job in an MRS cluster on the management console or using the APIs, the operation will not be recorded on the Operation Logs page. You can check the operation records on the CTS console.

Viewing Job Logs on the YARN Web UI

- Log in to FusionInsight Manager of the MRS cluster.

For details about how to log in to FusionInsight Manager, see Accessing MRS Manager.

- Choose Cluster > Services > Yarn and click the hyperlink on the right of ResourceManager Web UI.

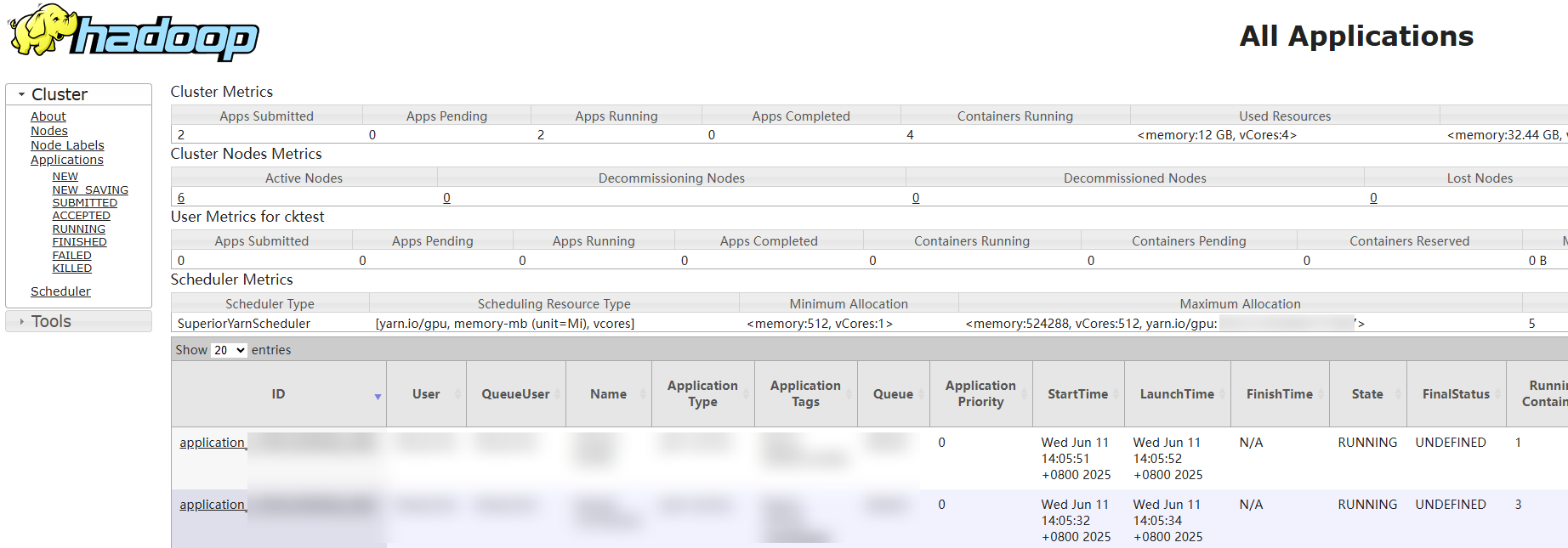

- On the displayed All Applications page, click Logout in the upper right corner. Then, log in again as a YARN administrator or a user who submits jobs.

To create a YARN administrator, perform the following steps:

- Create a role with the YARN administrator permissions. For details, see Creating YARN Roles.

- Create a human-machine user and associate the role created in 3.a with the user. For details, see Creating an MRS Cluster User.

After a user is created, you need to change the initial password as prompted after logging in to FusionInsight Manager as the user.

- On the All Applications page, click the application ID of a job to view the job running information, and click Logs to view the logs.

Figure 1 Viewing YARN job logs

- View the logs of a specified YARN job on the client.

- Log in to the node where the cluster client is located as user root.

- Run the following command to initialize environment variables:

source Client installation directory/bigdata_env - If Kerberos authentication is enabled for the current cluster, run the following command to authenticate the current user (YARN administrator or a user who submits jobs). If Kerberos authentication is disabled for the current cluster, skip this step.

kinit MRS cluster user - Run the following command to obtain the logs of a specified job:

yarn logs -applicationId Application ID of the required job

Helpful Links

- You can manually stop a running MRS job or delete a completed job on the management console. For details, see Stopping and Deleting an MRS Cluster Job.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot