Kafka-to-Kafka Data Synchronization

Constraints

- If the source is a Kafka instance:

- The source and target can only be DMS Kafka instances. The instance versions must be the same. Currently, 2.7 and 3.x are supported.

- The number of brokers, broker CPU, memory, and storage space of the source Kafka instance must be the same as those of the target Kafka instance.

- A topic occupying one partition will be created for both the source and target Kafka instances. Ensure sufficient partitions are available in source and target when creating the event stream job.

- If the source is a Kafka address:

- The target Kafka instance must be a DMS Kafka instance of version 2.7 or 3.x. The source Kafka instance must be a DMS Kafka instance of version 2.7 or 3.x, or a cloud vendor's or self-built Kafka instance that is compatible with open-source Kafka 2.7 or later.

- The target Kafka must maintain the same broker count as the source. Its broker CPU, memory, storage space, and topics must be greater than or equal to the source's specifications, while the partition count must be at least equal to the source's partitions plus two.

- The Kafka sync job creates two topics occupying two partitions total in the target Kafka. Ensure sufficient partitions exist before creating the event stream job.

Prerequisites

- The source and target Kafka instances are available.

- Ensure that the VPC, subnet, and Kafka topic partitions and storage space at the source and target are sufficient.

- Ensure that the source and target instances can communicate with the VPC selected when you create the event stream cluster.

Procedure

- Log in to the EG console.

- In the navigation pane, choose Event Streams > Professional Event Stream Jobs.

- Click Create Job in the upper right corner. The Basic Settings page is displayed.

- Configure the basic settings. The following uses a non-whitelisted account as an example.

Table 1 Basic information parameters Parameter

Description

Cluster

Select a created cluster. If no cluster is created, create one by referring to Professional Event Stream Clusters.

Job Name

Enter a job name.

Scenario

Sync is selected by default.

Data across sources can be synchronized in real time.

Description

Enter the description of the job.

- Click Next: Configure Source and Target. The Configure Source and Target page is displayed.

Table 2 Parameters for configuring source and target data Parameter

Description

Type

Select the configuration type. Options: Kafka instance and Kafka address. The target data type is Kafka instance by default.

Instance Alias

Enter an instance alias, which

identifies the source and target instance. You are advised to set only one alias for the same source or target instance.

Kafka Addresses

Required when Type is set to Kafka address.

Enter Kafka addresses.

Region

Select a region.

Project

Select a project.

Instance

Select a Kafka instance.

Access Mode

Plaintext or Ciphertext

Security Protocol

- If Plaintext access mode is selected, the security protocol is PLAINTEXT.

- If you select Ciphertext access mode, the security protocol can be SASL_SSL or SASL_PLAINTEXT.

Authentication Mechanism

This parameter is mandatory when Access Mode is set to Ciphertext.

The authentication mechanism can be SCRAM-SHA-512 or PLAIN.

Username

This parameter is mandatory when Access Mode is set to Ciphertext.

Enter a username.

Password

This parameter is mandatory when Access Mode is set to Ciphertext.

Enter a password.

- Click Test Connectivity. After confirming that the instance connectivity of the source and target is normal, click Next: Advanced Settings. The Advanced Settings page is displayed.

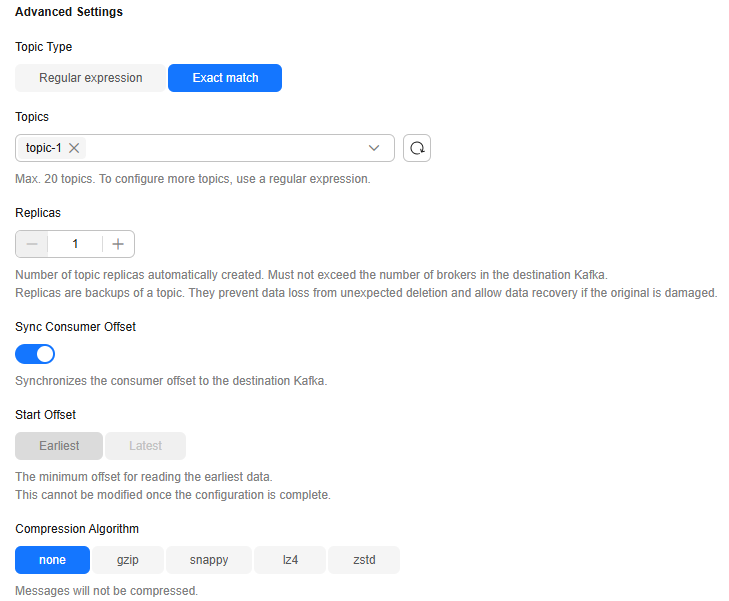

Figure 1 Advanced settings

Table 3 Configuration parameters Parameter

Description

Topic Type

Select Regular expression or Exact match.

NOTE:- If you select Regular expression, enter a regular expression in the Topics (Regular) text box. For example, .* indicates that all topics are matched, and topic.* indicates that all topics with the topic prefix are matched.

- If you select Exact match, you need to select topics.

Replicas

Set the number of replicas.

The number of replicas of the automatically created topic cannot exceed the number of brokers in the target Kafka.

Sync Consumer Offset

Select whether to enable this function.

If this function is enabled, the consumer offset will be synchronized to the target Kafka.

Start Offset

Select Earliest or Latest.

Compression Algorithm

Select none, gzip, snappy, lz4, or zstd as the compression algorithm.

- Click Next: Pre-check. On the displayed page, click Finish.

- Return to the professional event stream job list and click the name of the created event stream. Click Job Management to view the synchronization details.

Table 4 Parameter description Parameter

Description

Topic

Topic created when a Kafka instance is created.

Partitions

Number of partitions set when a topic is created. The larger the number of partitions, the higher the consumption concurrency.

Messages to Sync

Number of messages that have not been synchronized in the current topic partition.

Sync Rate: Rate at which messages are synchronized in the current job. You can click Limit Rate to configure flow control for the source Kafka instance.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot