Collecting Logs

Cloud Native Log Collection is an add-on based on Fluent Bit and OpenTelemetry for collecting logs and Kubernetes events. This add-on supports CRD-based log collection policies. It collects and forwards standard output logs, container file logs, and Kubernetes events in a cluster based on configured policies. It also reports all abnormal Kubernetes events and some normal Kubernetes events to AOM. The events then can be used to configure event alarms.

Constraints

- Up to 100 log collection policies can be created for each cluster.

- This add-on cannot collect .gz, .tar, and .zip logs or access symbolic links of logs.

- In each cluster, up to 10,000 single-line logs can be collected per second, and up to 2,000 multi-line logs can be collected per second.

- If a volume is attached to the data directory of a service container, this add-on cannot collect data from the parent directory. In this case, you need to configure a complete data directory.

Billing

LTS does not charge you for creating log groups and offers a free quota for log collection every month. You pay only for log volume that exceeds the quota. For details, see Price Calculator.

Log Collection

- Enable log collection.

Enabling log collection during cluster creation

- Log in to the CCE console.

- In the upper right corner, click Buy Cluster.

- In the Select Add-on step, select Cloud Native Log Collection.

- Click Next: Configure Add-on in the lower right corner and select the required logs.

- Container logs: A log collection policy named default-stdout will be created, and standard output logs in all namespaces will be reported to LTS.

- Kubernetes events: A log collection policy named default-event will be created, and Kubernetes events in all namespaces will be reported to LTS.

- Click Next: Confirm Settings. On the displayed page, click Submit.

Enabling log collection for an existing cluster

- Log in to the CCE console, click the cluster name to access the cluster console, and choose Logging in the navigation pane.

- (Optional) Complete the authorization if it you have not completed it.

In the displayed dialog box, click Authorize.

Figure 1 Adding authorization

- Click Enable and wait for about 30 seconds until the log page is automatically displayed.

- Standard output logs: A log collection policy named default-stdout will be created, and standard output logs in all namespaces will be reported to LTS.

- Kubernetes events: A log collection policy named default-event will be created, and Kubernetes events in all namespaces will be reported to LTS.

Figure 2 Enabling log collection

- View and configure log collection policies.

- Log in to the CCE console, click the cluster name to access the cluster console, and choose Logging in the navigation pane.

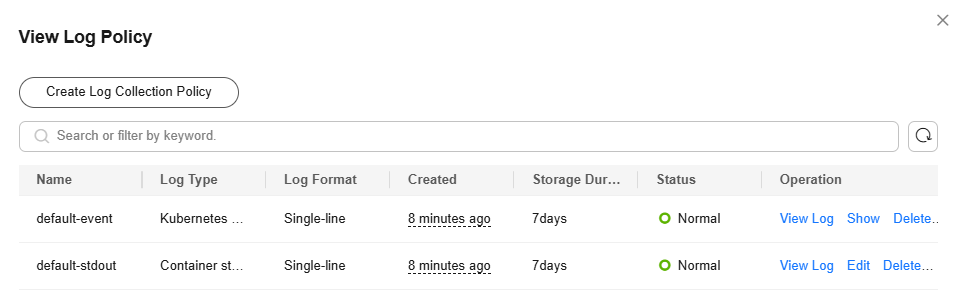

-

Click View Log Policy in the upper right corner.

All log collection policies that report logs to LTS are displayed.

If Container standard output and Kubernetes events are selected when you enable logging, two log collection policies will be created, and the collected logs will be reported to the default log group and log streams.

- default-stdout: collects standard output logs. Default log group: k8s-logs-{cluster-ID}; Default log stream: stdout-{cluster-ID}

- default-event: collects Kubernetes events. Default log group: k8s-logs-{cluster-ID}; Default log stream: event-{cluster-ID}

Figure 3 Viewing log collection policies

- Click Create Log Collection Policy and configure parameters as needed.

- Policy template: If Container standard output and Kubernetes events are not selected when you enable logging or their log collection policies are deleted, you can use this option to create default log collection policies.

- Custom Policy: You can use this option to create a log collection policy.

Figure 4 Custom policy

The following are requirements for configuring the container log paths:

The following are requirements for configuring the container log paths:- Log directory: Enter an absolute path, for example, /log. The path must start with a slash (/) and contain a maximum of 512 characters. Only uppercase letters, lowercase letters, digits, hyphens (-), underscores (_), slashes (/), asterisks (*), and question marks (?) are allowed.

- Log file name: It can contain only uppercase letters, lowercase letters, digits, hyphens (-), underscores (_), asterisks (*), question marks (?), and periods (.). Logs in the format of .gz, .tar, and .zip are not supported.

The directory name and file name can be complete names or contain wildcard characters. A maximum of three levels of directories can contain wildcard characters, and the first-level directory cannot use wildcard characters. Wildcard characters can only be asterisks (*) and question marks (?). An asterisk (*) can match multiple characters. A question mark (?) can match only one character. The following are two examples:

- If the directory is /var/logs/* and the file name is *.log, the match expression is /var/logs/*/*.log, indicating that any files with the extension .log in all level-1 directories in the /var/logs directory are matched. Note that this expression cannot match any files with the extension .log in the /var/logs directory and multi-level directories in the /var/logs directory.

- If the directory is /var/logs/app_* and the file name is *.log, any log files with the extension .log in all directories that match app_* in the /var/logs directory will be reported.

Table 1 Custom policy parameters Parameter

Container Standard Output

Container File Log

Log type description

Used to collect container standard output logs. You can create a log collection policy by namespace, workload name, or instance label.

Used to collect text logs. You can select Workload or Workload with target label when creating a log collection policy.

Log Source

- All containers: You can specify all containers in a namespace. If this parameter is not specified, logs of containers in all namespaces will be collected.

Blocklist: The Cloud Native Log Collection add-on v1.7.6 or later supports this function. You can exclude pods from log collection based on pod label groups. Labels in the same label group are in the AND relationship, and labels in different label groups are in the OR relationship. If a pod matches all labels in any label group, the pod is excluded. You can create up to 10 label groups, and each label group can contain up to five labels.

For example, if you want to exclude all pods of a workload in a namespace, enter the workload label, for example, app:test.

- Workload: You can specify a workload and its containers. If this parameter is not specified, logs of all containers running the workload will be collected.

- Workload with target label: You can specify a workload by label and its containers. If this parameter is not specified, logs of all containers running the workload will be collected.

- Workload: You can specify a workload and its containers. If this parameter is not specified, logs of all containers running the workload will be collected.

- Workload with target label: You can specify a workload by label and its containers. If this parameter is not specified, logs of all containers running the workload will be collected.

You also need to specify the log collection path. For details, see the log path configuration requirements.

Log Format

- Single-line

Each log contains only one line of text. The newline character \n denotes the start of a new log.

- Multi-line

Some programs (for example, Java program) print a log that occupies multiple lines. By default, logs are collected by line. If you want to display logs as a single message, you can enable multi-line logging and use the regular pattern. If you select the multi-line text, you need to enter the log matching format.

Example:

If logs need to be collected by line, enter \d{4}-\d{2}-\d{2} \d{2}\:\d{2}\:\d{2}.*.

The following three lines starting with the date are regarded as a log.2022-01-01 00:00:00 Exception in thread "main" java.lang.RuntimeException: Something has gone wrong, aborting! at com.myproject.module.MyProject.badMethod(MyProject.java:22) at com.myproject.module.MyProject.oneMoreMethod(MyProject.java:18)

Report to the Log Tank Service (LTS)

This parameter is used to configure the log group and log stream for log reporting.

- Default log groups or streams: The default log group (k8s-log-{cluster-ID}) and default log stream (stdout-{cluster-ID}) are automatically selected.

- Custom log groups or streams: You can select any log group and log stream.

- A log group is the basic unit for LTS to manage logs. If you do not have a log group, CCE prompts you to create one. The default name is k8s-log-{cluster-ID}, for example, k8s-log-bb7eaa87-07dd-11ed-ab6c-0255ac1001b3.

- A log stream is the basic unit for reading and writing logs. You can create log streams in a log group to store different types of logs for finer log management. When you install the add-on or create a log policy based on a template, the following log streams are automatically created:

stdout-{cluster-ID} for standard output logs, for example, stdout-bb7eaa87-07dd-11ed-ab6c-0255ac1001b3

event-{cluster-ID} for Kubernetes events, for example, event-bb7eaa87-07dd-11ed-ab6c-0255ac1001b3

- Click Edit to modify an existing log collection policy.

- Click Delete to delete an existing log collection policy.

- View the logs.

- On the CCE console, click the cluster name to access the cluster console and choose Logging in the navigation pane.

- View different types of logs:

- Container Logs: displays all logs in the default log stream stdout-{cluster-ID} of the default log group k8s-log-{cluster-ID}. You can search for logs by workload.

Figure 5 Querying container logs

- Kubernetes Events: displays all Kubernetes events in the default log stream event-{cluster-ID} of the default log group k8s-log-{cluster-ID}.

- Global Log Query: You can view logs in the log streams of all log groups. You can specify a log stream to view the logs. By default, the default log group k8s-log-{cluster-ID} is selected. You can click the edit icon on the right of Switching Log Groups to switch to another log group.

Figure 6 Global log query

- Container Logs: displays all logs in the default log stream stdout-{cluster-ID} of the default log group k8s-log-{cluster-ID}. You can search for logs by workload.

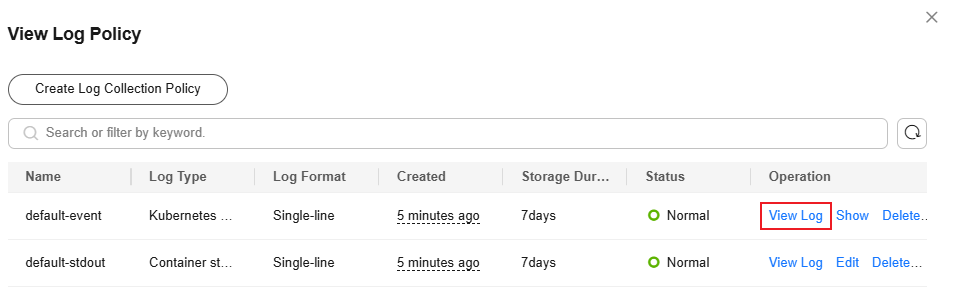

- Click View Log Policy in the upper right corner. Locate the log collection policy and click View Log to go to the log list.

Figure 7 Viewing logs

Troubleshooting

- All components except log-operator are not ready, and the volume failed to be attached to the node.

Solution: Check the logs of log-operator. During add-on installation, the configuration files required by other components are generated by log-operator. If the configuration files are invalid, all components cannot be started.

The log information is as follows:

MountVolume.SetUp failed for volume "otel-collector-config-vol":configmap "log-agent-otel-collector-config" not found

- "Failed to create log group, the number of log groups exceeds the quota" is reported in the standard output log of log-operator.

Example:

2023/05/05 12:17:20.799 [E] call 3 times failed, reason: create group failed, projectID: xxx, groupName: k8s-log-xxx, err: create groups status code: 400, response: {"error_code":"LTS.0104","error_msg":"Failed to create log group, the number of log groups exceeds the quota"}, url: https://lts.xxx.myhuaweicloud.com/v2/xxx/groups, process will retry after 45sSolution: On the LTS console, delete unnecessary log groups. For details about the log group quota, see Managing Log Groups..

- Logs cannot be reported, and "log's quota has full" is reported in the standard output log of the OTel component.

Solution:

LTS provides a free log quota. If the quota is used up, you will be charged for the excess log usage. If an error message is displayed, the free quota has been used up. To continue collecting logs, log in to the LTS console, choose Configuration Center in the navigation pane, and enable Continue to Collect Logs When the Free Quota Is Exceeded.

Figure 8 Quota configuration

- Text logs cannot be collected because wildcards are configured for the collection directory.

Troubleshooting: Check the volume mounting status in the workload configuration. If a volume is attached to the data directory of a service container, this add-on cannot collect data from the parent directory. In this case, you need to set the collection directory to a complete data directory. For example, if the data volume is attached to the /var/log/service directory, logs cannot be collected from the /var/log or /var/log/* directory. In this case, you need to set the collection directory to /var/log/service.

Solution: If the log generation directory is /application/logs/{application-name}/*.log, attach the data volume to the /application/logs directory and set the collection directory in the log collection policy to /application/logs/*/*.log.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot