Typical Deployment Architecture for Internal Knowledge Management Applications (99.9% Availability)

Internal knowledge management applications are proprietary and used internally. If these applications fail, they may cause disruptions in related operations and bring inconvenience to operators, but their RTO and RPO can be relatively long. These applications require an availability of 99.9%, that is, up to 8.76 hours of downtime per year.

The service interruption time includes the interruption time due to faults and the interruption time caused by upgrades, configuration, and maintenance. Assume that the interruption time is as follows:

- Fault-caused interruptions: Assume that there are four fault-caused interruptions each year. It takes 30 minutes to decide on the emergency recovery for each interruption and 30 minutes to recover application services. Thus, the total yearly interruption time is 240 minutes.

- Change-caused interruptions: Assume that an application is updated offline for eight times every year, and each update takes 30 minutes. Then the yearly update time is 240 minutes.

As estimated above, the application system will be unavailable for 480 minutes each year, which meets the availability design requirements.

An internal knowledge management application usually consists of two main parts: a stateless application layer at the frontend and a database at the backend. The frontend stateless application layer can be composed of ECS instances; while the backend typically uses RDS for MySQL, depending on the service type. Other common backend options include DDS and some middleware, such as DCS or Kafka. To meet the availability requirement, the recommended solution is as follows:

|

Item |

Solution |

|---|---|

|

Redundancy |

Deploy cloud service instances in HA mode, such as ELB, RDS, DCS, Kafka, and DDS instances. |

|

Backup |

Configure the RDS and DDS databases to automatically back up data, and configure the stateful ECS instances to automatically back up data through CBR. In case of a fault, the latest backup data can be used to restore services, meeting the availability requirements. |

|

DR |

Configure the applications to use services that support cross-AZ deployment. Deploy ELB and RDS across AZs. When a fault occurs in an AZ, services are automatically recovered. Configure the stateful ECS instances to use SDRS for cross-AZ DR. When a fault occurs in an AZ, a manual switchover can be performed. |

|

Monitoring metrics and alarms |

Monitor and check site statuses and instance workloads. If a site fails or an ECS or RDS instance is overloaded, an alarm is reported. |

|

Auto scaling |

Resources are sufficient for internal operations, making auto scaling unnecessary. For ECS instances, use ELB for fault detection and load balancing, and add or remove the ECS instances on demand based on the monitoring statistics, so as to enhance the application performance. For RDS, change the instance type or add read-only nodes during maintenance based on the workload monitoring statistics. |

|

Change error prevention |

Update software offline and replace it without changing its position. In addition, each application needs to be automatically deployed and rolled back as instructed by a runbook. The software is updated every one to two months. |

|

Emergency recovery |

Develop an emergency handling mechanism and designate related personnel to quickly make decisions and recover services. Provide solutions to common application and database problems as well as upgrade and deployment failures. |

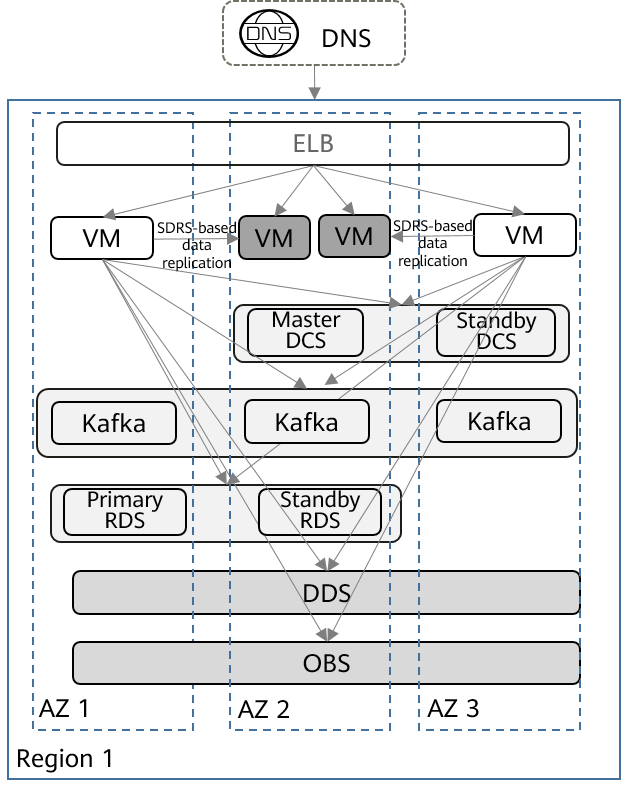

The typical deployment architecture is as follows:

This architecture has the following features:

- The application system is layered with stateful VMs and stateful databases.

- The application system is deployed across AZs in a single region of Huawei Cloud. Stateful VMs are deployed as active/standby pairs, with the active and standby ones in separate AZs and data replicated from the active one to the standby one. In this way, cross-DC application DR can be implemented on the cloud.

- Access layer (external DNS): The external DNS is used for domain name resolution and traffic load balancing. The failure of a single AZ does not affect services.

- Application layer (ELBs, application software, and VMs): For stateful applications, SDRS is used for cross-AZ data replication and DR failover between VMs, and CBR is used by applications for automatic data backup.

- Middleware layer: Redis and Kafka clusters are deployed across AZs in HA mode. The failure of a single AZ does not affect services.

- Data layer: RDS, DDS, and OBS are deployed across AZs in HA mode. The failure of a single AZ does not affect services.

- The RDS database data is automatically backed up to OBS periodically to ensure that data can be quickly restored in the case of data loss.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot