Basic Concepts

RCU

The ROMA Compute Unit (RCU) is the capability compute unit of the new edition ROMA Connect. Each RCU can be allocated to different integration capabilities, including FDI, APIC, MQS, LINK, and composable applications. The performance specifications of each integration capability depend on the number of RCUs allocated. More RCUs indicate higher specifications.

Connector

A connector is a custom data source plug-in. ROMA Connect supports common data source types, such as relational databases, big data storage, semi-structured storage, and message systems. If the data source types supported by ROMA Connect cannot meet your data integration requirements, you can develop a read/write plug-in to connect to ROMA Connect through a standard RESTful API to enable ROMA Connect to read and write these data sources.

Environment

An environment refers to the usage scope of an API. You can call an API only after you publish it in an environment. You can publish APIs in different custom environments, such as the development environment and test environment. RELEASE is the default environment for formal publishing.

Environment Variable

Environment variables are specific to environments. You can create environment variables in different environments to call different backend services by using the same API.

Load Balance Channel

A load balance channel allows ROMA Connect to access ECSs in the same VPC and use the backend services deployed on the ECSs to expose APIs. In addition, the load balance channel can balance access requests sent to backend services.

Producer

A producer is a party that publishes messages into topics. The messages will be then delivered to other systems for processing.

Consumer

A consumer is a party that subscribes to messages from topics. The ultimate purpose of subscribing to messages is to process the message content. For example, in a log integration scenario, the alarm monitoring platform functions as a consumer to subscribe to log messages from topics, identify alarm logs, and send alarm messages or emails.

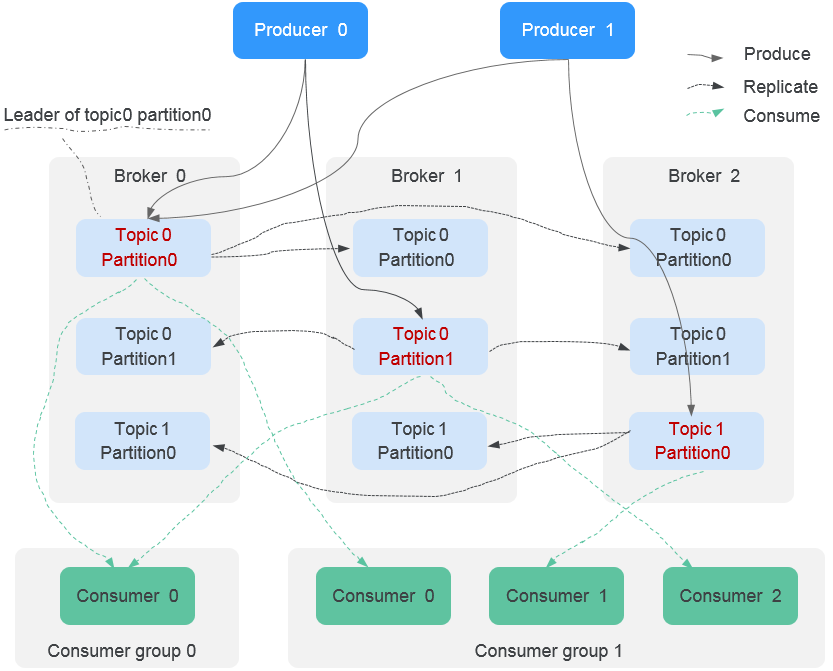

Partition

A topic is a place holder of your messages in Kafka and is further divided into partitions. Messages are stored in different partitions in a distributed manner, implementing horizontal expansion and high availability of Kafka.

Replica

To improve message reliability, each partition of Kafka has multiple replicas to back up messages. Each replica stores all data of a partition and synchronizes messages with other replicas. A partition has one replica as the leader which handles the creation and retrieval of all messages. The rest replicas are followers which replicate the leader.

The topic is a logical concept, whereas the partition and broker are physical concepts. The following figure shows the relationship between partitions, brokers, and topics of Kafka based on the message production and consumption directions.

Topic

A topic is a model for publishing and subscribing to messages in a message queue. Messages are produced, consumed, and managed based on topics. A producer publishes a message to a topic. Multiple consumers subscribe to the topic. The producer does not have a direct relationship with the consumers.

Product

A product is a collection of devices with the same capabilities or features. Each device belongs to a product. You can define a product to determine the functions and attributes of a device.

Thing Model

A thing model defines the service capabilities of a device, that is, what the device can do and what information the device can provide for external systems. After the capabilities of a device are divided into multiple thing model services, define the attributes, commands, and command fields of each thing model service.

Rule Engine

A rule engine allows you to configure forwarding rules so that data reported by devices can be forwarded to other cloud services for storage or further analysis.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot