What Should I Do If Error "The connector is trying to read binlog starting at Struct xxx but this is no longer available on the server" Is Reported During Incremental Synchronization of MySQL Data?

Symptom

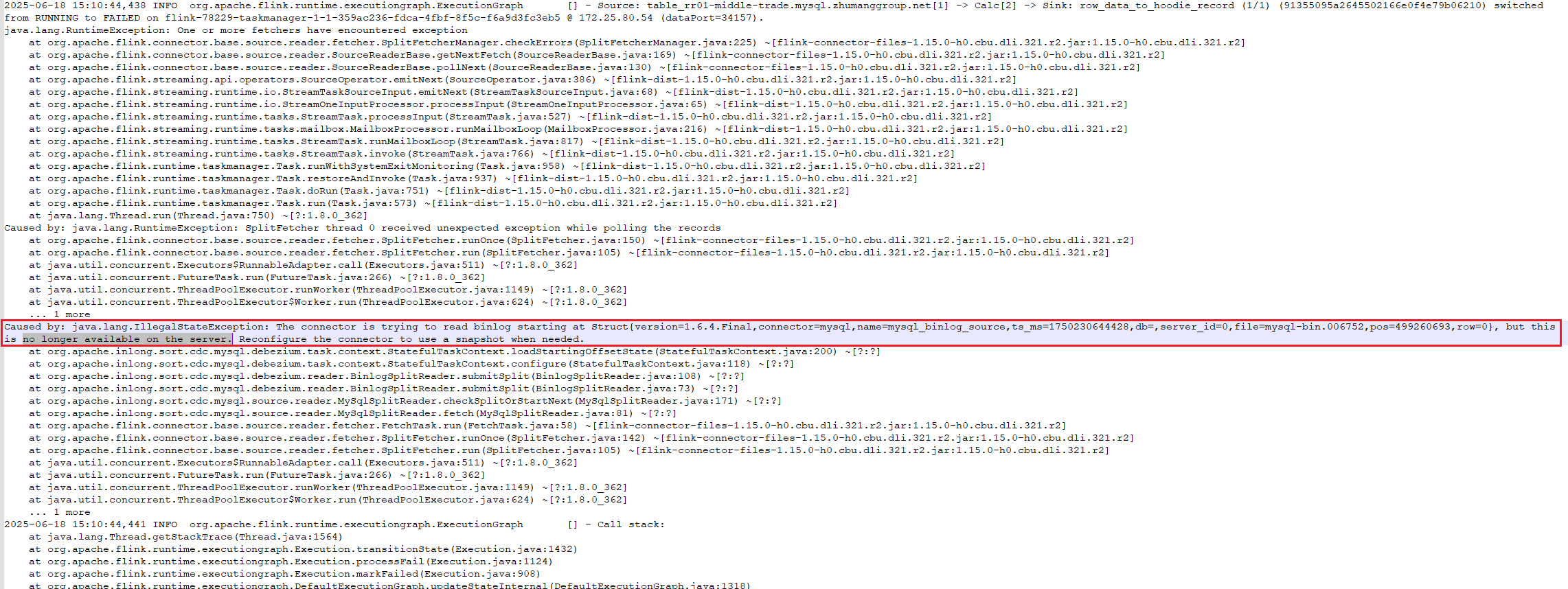

During incremental synchronization of MySQL data, or when a job is resumed after being suspended for a period, the job is abnormal, and the following error occurs: "The connector is trying to read binlog starting at Struct [xxx] but this is no longer available on the server" ([xxx] contains the binlog location information). The error can be found in the taskmanager and jobmanager logs.

Possible Causes

The migration job attempts to obtain a specified binlog file from the MySQL database, but the binlog file has been deleted.

Enabling binary logs is required for real-time data extraction from a MySQL database. If binlogs are disabled or the binlog retention period is too short, binlogs will be lost unexpectedly, causing a job failure. Check for other permissions issues by referring to Constraints on the MySQL Source.

Solution

- Scenario 1: supplementing data for a primary key table

- After evaluating the service impact, you must stop the job immediately and then restart the job. Set as early a start point as possible to prevent further incremental data loss.

- Check the data that has been lost.

When the error occurs, the binlog data has been lost and cannot be supplemented in real time. You must check the data that has been lost and supplement the data to the destination database using JDBC.

- You can determine the data to be supplemented based on the service.

- You can also obtain a relatively accurate range of the data that has been lost using the following method:

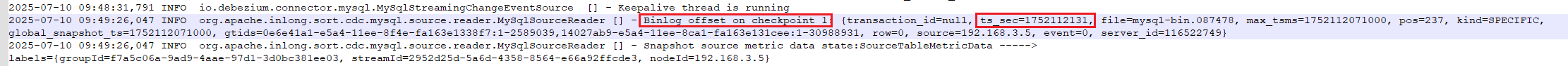

The start time of the data loss is the ts_ms timestamp in the error log. For example, ts_ms=1750230644428=2025-06-18 15:10:44 in Figure 2 indicates that data supplementation must start before 15:10:44 on June 18, 2025.

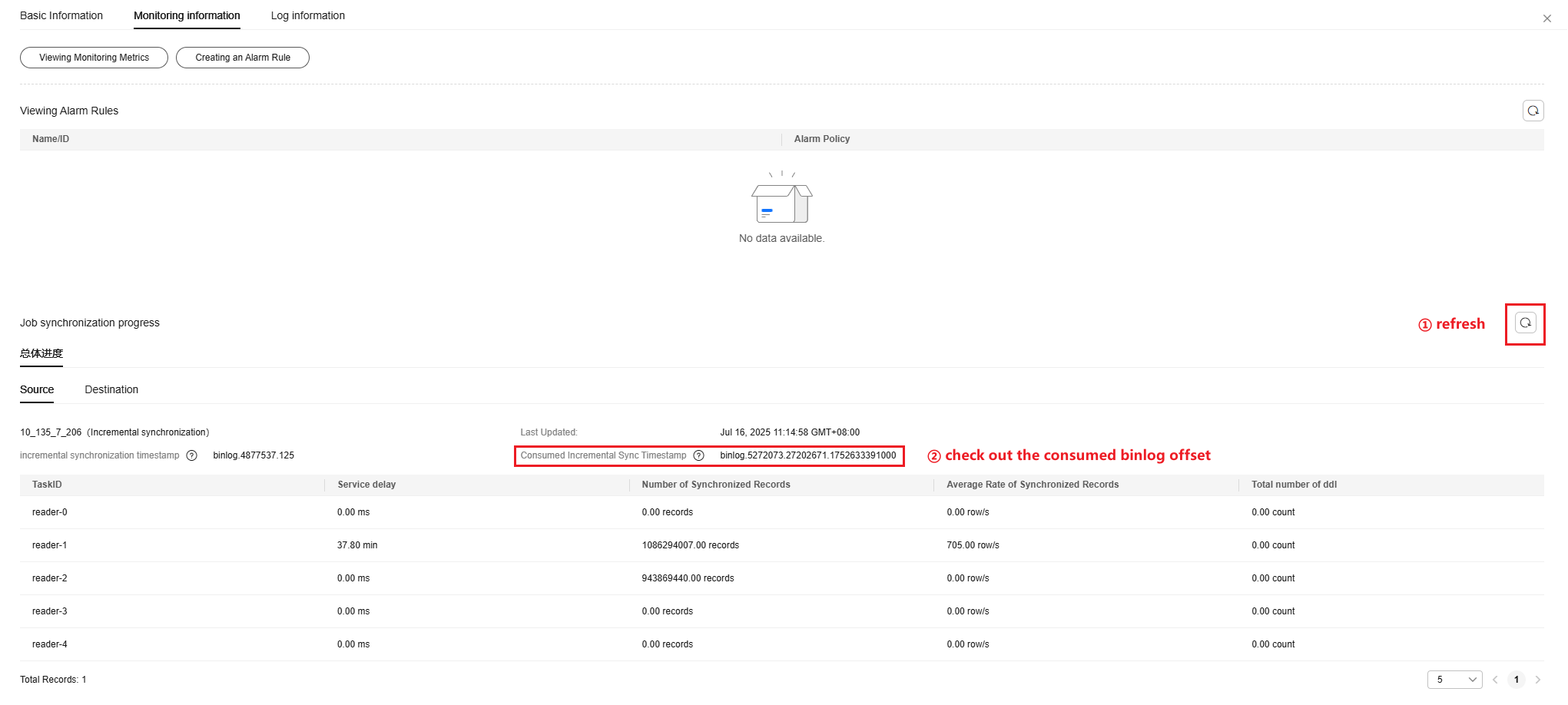

The end time of the data loss can be the consumption position after the job is restarted. For example, the consumption position time in Figure 3 is 1752071512000=2025-07-09 22:31:52, indicating that the data supplementation end time must be later than 22:31:52 on July 9, 2025. To obtain the accurate end time of the data loss, you can open the taskmanager log, search for keyword "binlog offset", and view ts_sec corresponding to the first checkpoint.

The preceding examples are for reference only. The example values may not be appropriate.

- Use an offline job to supplement data.

You can filter out the lost data by a service time field (for example, create_time or update_time), and create an offline data migration job or CDM job to migrate the lost data to the destination.

Stop the real-time job before offline data supplementation.

- Scenario 2: non-primary key table or no service time field

You can obtain the lost data using other fields and supplement the data offline.

After evaluating the service impact, you can migrate the entire table again if necessary.

Feedback

Was this page helpful?

Provide feedbackThank you very much for your feedback. We will continue working to improve the documentation.See the reply and handling status in My Cloud VOC.

For any further questions, feel free to contact us through the chatbot.

Chatbot